Cheerio vs Puppeteer: Guide to Choosing the Best Web Scraping Tool

Suciu Dan on Apr 11 2023

Are you trying to decide which tool to use for web scraping? It can be tough to choose between all of the different options out there, but don't worry – I’m here to help. In this article, we'll be taking a closer look at Cheerio vs Puppeteer, two popular tools for web scraping.

Cheerio is a library for parsing and manipulating HTML documents, while Puppeteer is a library for controlling a headless Chrome browser. Cheerio allows you to select elements using a syntax similar to jQuery, while Puppeteer can be used for tasks such as web scraping, testing, and automating form submissions.

So, let's dive in and get scrappy!

What is Cheerio?

Cheerio is a JavaScript library that parses and manipulates HTML documents and it allows you to select, modify, and perform actions on elements within a document using a jQuery-like syntax.

Cheerio is lightweight and easy to use, making it a good choice for simple web scraping tasks. It is also faster than using a full browser like Chrome or Firefox, as it doesn't have to load all the assets and resources. This makes it ideal for extracting data from an HTML document.

Cheerio has many features and benefits that make it a popular choice for web scraping. Some of the main features and benefits of Cheerio include:

- Lightweight and easy to use: Cheerio is designed to be lightweight and easy to use, making it a great choice for simple web scraping tasks. Its syntax is similar to jQuery, which is familiar to many developers, and it allows you to select and manipulate elements in an HTML document with just a few lines of code.

- Fast: Cheerio is faster than using a full-fledged browser for web scraping, as it doesn't have to load all the assets and resources that a browser would. This makes it a good choice for tasks where speed is important.

- Support for HTML and XML documents: Cheerio can parse and manipulate both HTML and XML documents, giving you the flexibility to work with different types of documents as needed.

- Can be used in combination with other tools: Cheerio can be used in combination with other tools such as the Fetch API or Axios to perform web scraping tasks. This allows you to tailor your workflow to your specific needs and use the best tools for the job.

What is Puppeteer?

Puppeteer is a Node.js library that provides a high-level API for controlling a headless Chrome browser. It allows you to automate tasks in Chrome without actually opening a Chrome window, which reduces the number of resources your scraper will consume.

You can use Puppeteer to perform actions such as filling out forms, clicking buttons, and extracting data from websites.

One of the main benefits of Puppeteer is that it allows you to interact with websites in a way that is similar to how a human user would. This makes it a good choice for tasks that require more complex interactions with a website, such as logging in, navigating through pages, and filling out forms.

Puppeteer has several features and benefits that make it a popular choice for web scraping and automation. Some of the main features and benefits of Puppeteer include:

- High-level API: Puppeteer provides a high-level API that is easy to use and understand. This makes it a good choice for developers who are new to web scraping or automation.

- Control over a headless Chrome browser: Puppeteer allows you to control a headless Chrome browser, which means you can automate tasks in Chrome without actually opening a Chrome window. This makes it a good choice for tasks that require more complex interactions with a website.

- Mimics human behavior: Puppeteer can mimic human behavior, such as clicking buttons, scrolling, and filling out forms. This makes it a good choice for tasks that require more complex interactions with a website.

- Support for modern web features: Puppeteer has full support for modern web features such as JavaScript, cookies, and CAPTCHAs. This makes it a good choice for tasks that require these features.

- Can be used in combination with other tools: Puppeteer can be used in combination with other tools such as Cheerio to perform web scraping tasks. This allows you to tailor your workflow to your specific needs and use the best tools for the job.

Differences between Cheerio vs Puppeteer

Cheerio and Puppeteer are both popular tools for web scraping, but they have some key differences that make them better suited for certain tasks. Here are some of the main differences between Cheerio vs Puppeteer:

- Performance: Cheerio is generally faster than Puppeteer because it doesn't have to load all the assets and resources that a browser would. However, Puppeteer has the advantage of being able to interact with websites in a way that is similar to how a human user would, which can make it faster for certain tasks.

- Functionality: Cheerio is good for simple web scraping tasks that involve extracting data from HTML or XML documents. Puppeteer is more powerful and can automate tasks in a headless Chrome browser, such as logging in, navigating pages, and filling out forms.

- Ease of use: Cheerio has a syntax that is similar to jQuery, which is familiar to many developers. This makes it easy to use for those who are already familiar with jQuery. Puppeteer also has a high-level API that is easy to use, but it requires more setup and configuration than Cheerio.

Overall, the choice between Cheerio vs Puppeteer will depend on the specific needs of your web scraping task. If you just need to extract some data from an HTML document and performance is a concern, Cheerio might be the better choice. If you need to automate, Puppeteer is the right choice.

Checking or installing Node.JS

Before we start, make sure Node.JS is installed on your machine. The Node version used in this article is 18.9.0. Run this command to check your local version:

node -v

If you’re getting an error, download and install Node.js from the official website. This will also install `npm`, the package manager for Node.js.

Setting up the project

Open the terminal and create a new folder called `scraper`. Access the directory and run the npm init command. This will create a `package.json` file for your project containing metadata such as the name, version, and dependencies.

Follow the prompts to enter information about your project. You can press `Enter` to accept the default values for each prompt, or you can enter your values as desired.

You can now start installing dependencies and creating files for your project:

npm install cheerio puppeteer

The dependencies will be installed in your project's `node_modules` directory and will also be added to the dependencies section of your `package.json` file.

Defining a target

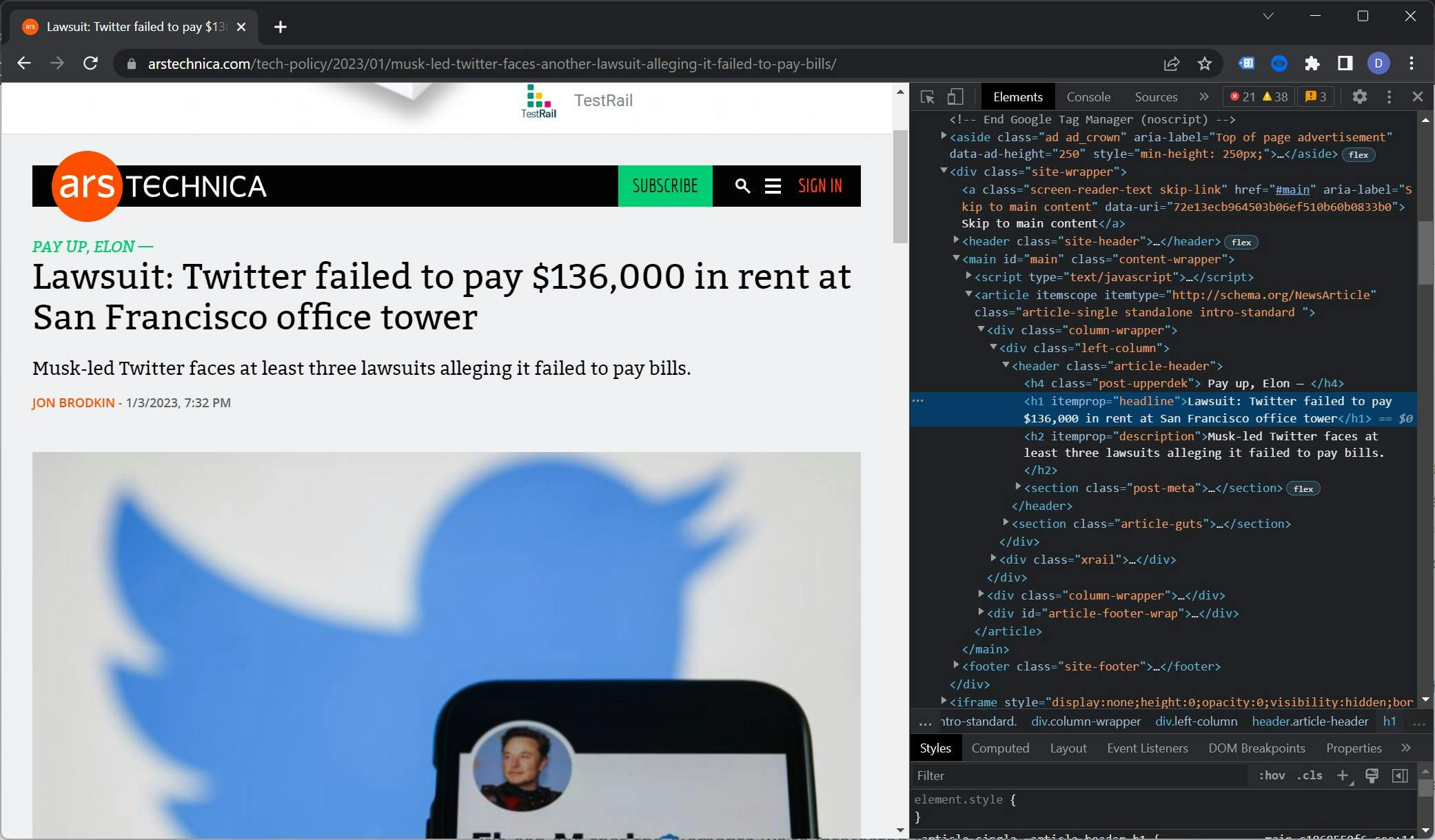

For this article, we will scrape an article from ArsTechnica and extract the article title, the cover image URL, and the first paragraph from the article body.

Basic crash course for data extraction

To begin, go to the target website and open an article. Right-click on the article title and select 'Inspect'. This will open the Developer Tools and highlight the HTML element for the heading tag.

For good SEO practices, a page should have only one H1 element. Therefore, h1 can be used as a reliable selector for the title.

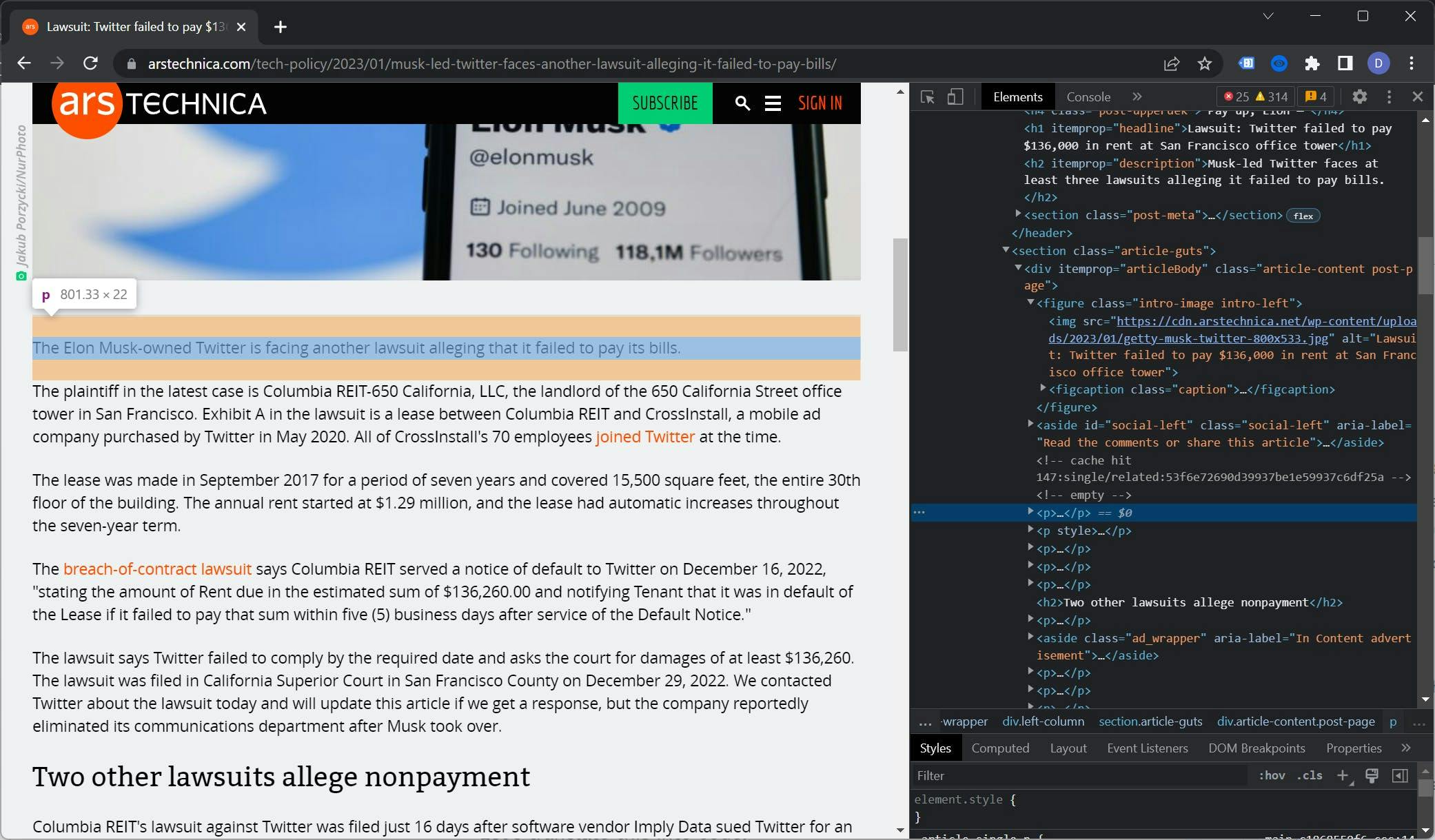

To pick the selector for the cover image, use the Inspect tool. The image is wrapped in a `figure` tag, so it is a good idea to include the parent element in the selector. The final selector is `figure img`.

Last, but not least, we need to find the selector for the first paragraph.

Since the first paragraph is not the first child element of the parent, an extra CSS selector is needed. The parent element has a class called `article-content`. We can use this class together with the `:first-of-type` selector to create our final selector: `.article-content p:first-of-type`.

Here’s the final list with selectors:

- Title: `h1`

- Cover image: `figure img`

- First paragraph: `.article-content p:first-of-type`

Building the scraper

Now that we have the dependencies in the project, we'll take a look at how to use Cheerio vs Puppeteer together to build a web scraper. By combining these two tools, you can create a scraper that is both fast and powerful so let's get started!

Create a file named `scrape.js` and paste the following code in it:

const puppeteer = require('puppeteer');

const cheerio = require('cheerio');

async function scrape() {

// Launch a headless Chrome browser

const browser = await puppeteer.launch();

// Create a new page

const page = await browser.newPage();

// Navigate to the website you want to scrape

await page.goto('https://arstechnica.com/tech-policy/2023/01/musk-led-twitter-faces-another-lawsuit-alleging-it-failed-to-pay-bills/');

// Wait for the page to load

await page.waitForSelector('h1');

// Extract the HTML of the page

const html = await page.evaluate(() => document.body.innerHTML);

// Use Cheerio to parse the HTML

const $ = cheerio.load(html);

// Extract the title, cover image, and paragraph using Cheerio's syntax

const title = $('h1').text();

const paragraph = $('.article-content p:first-of-type').text()

const coverImage = $('figure img').attr('src');

// Display the data we scraped

console.log({

title,

paragraph,

coverImage

});

// Close the browser

await browser.close();

}

scrape();You can run the code using the node scrape.js command. The output should display the title of the article and the cover image URL, and should look like this:

{

title: 'Lawsuit: Twitter failed to pay $136,000 in rent at San Francisco office tower',

paragraph: 'The Elon Musk-owned Twitter is facing another lawsuit alleging that it failed to pay its bills.',

coverImage: 'https://cdn.arstechnica.net/wp-content/uploads/2023/01/getty-musk-twitter-800x533.jpg'

}

Taking it to the next level

Now that you've learned how to scrape basic data from a single webpage using Puppeteer and Cheerio, it's time to take your web scraping skills to the next level. Here’s what you can do to turn this basic scraper into a state-of-the-art one:

- Scraping an entire category: By modifying the URL and the selectors in your code, you can scrape the articles from a category. This is useful for scraping large amounts of data or for keeping track of updates to a specific category of content.

- Using a different user agent with each request: By changing the user agent of your scraper, you can bypass restrictions and better mimic human behavior. This can be useful for scraping websites that block or throttle requests based on the user agent.

- Integrating a proxy network: A proxy network can help you rotate IP addresses and avoid detection. This is especially useful for scraping websites that block IPs or rate limit requests.

- Solving captchas: Some websites use captchas to prevent automated scraping. There are several ways to bypass captchas, such as using a captcha solving service or implementing a machine learning model to recognize and solve captchas.

Wrapping things up

By now, you should have a good understanding of the strengths and limitations of Cheerio and Puppeteer, and you should be able to make an informed decision about which one is the best fit for your needs. There is a web scraping tool for you, whether you're a beginner or an experienced developer.

If you want to dive deeper into Cheerio, check out this article written by one of my colleagues. It covers topics such as identifying nodes using the Inspect Element, saving the results to disk, and scraping a large site like Reddit in more detail.

We've only just touched on the capabilities of Puppeteer. If you want to learn more, this article goes into more depth and covers topics such as taking a screenshot, submitting a form, and scraping multiple pages.

We hope that this article has helped you to understand the choices available to you and to make the best decision for your web scraping needs.

An Alternative That's Even Easier

While we've discussed the strengths and limitations of Cheerio and Puppeteer, there is another option that you might consider: using a scraper as a service like WebScrapingAPI.

Using a service like this comes with several advantages:

- You can trust that the scraper is reliable and well-maintained: the scraper has dedicated resources to ensure it is up-to-date and working correctly. This can save you a lot of time and effort compared to building and maintaining your own scraper.

- A web scraper service can often be more cost-effective than building your own: you won't have to invest in the development and maintenance of the scraper, and you'll be able to take advantage of any special features or support that the company offers.

- Being detected won’t be an issue anymore: a premium web scraper avoids detection and can often scrape websites more effectively and efficiently than a scraper you build yourself. This saves you time and allows you to focus on analyzing and utilizing the scraped data.

We encourage you to try out our web scraper and see for yourself the benefits of using a trusted and reliable tool. Create a free account now.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Discover how to efficiently extract and organize data for web scraping and data analysis through data parsing, HTML parsing libraries, and schema.org meta data.

Are XPath selectors better than CSS selectors for web scraping? Learn about each method's strengths and limitations and make the right choice for your project!

Discover 3 ways on how to download files with Puppeteer and build a web scraper that does exactly that.