How Web Scraping in R Makes Data Science Fun

Raluca Penciuc on Oct 19 2022

As the web evolves, more data is dynamically generated. It makes it more unavailable to extract with an API. Web scraping in R comes to help data scientists, whose projects need huge amounts of data.

Luckily, programming languages also evolved to handle these situations. A popular example of such a language is R, designed to better manage high-volume tasks.

In this tutorial, I will go over the base principles by talking about R's real-life utility. I will also describe one of the most popular packages used in web scraping with R, rvest. Then I will illustrate how R works in matters of extracting a website’s data.

Introduction to R

R is the open-source pal of the S programming language, combined with the Scheme's semantics. It first appeared in mid-1993, became open-source in 1995 and had its first stable beta release in 2000.

Ross Ihaka and Robert Gentleman designed R with the purpose of "turning ideas into software quickly and faithfully".

R is a functional programming language and it’s well-known among data scientists. Its most popular use cases are:

- banking;

- finance;

- e-commerce;

- machine learning;

- any other sector that uses large amounts of data.

In comparison with SAS and SPSS, R is the most used analytics tool in the world. Its active and supportive community reaches almost 2 million users.

If we would take a look at some of the companies that integrate R in their businesses and how they do that, we would see: :

- Facebook: to update status and its social network graph;

- Google: predicting economic activity and improving the efficiency of online advertising;

- Foursquare: for its recommendations engine;

- Trulia: to predict house prices and local crime rates.

Yet, in comparison to other languages, R is in steady competition with Python. Both offer web scraping tools and have active communities.

The differences appear when we take a look at the target audience. Python has a very easy-to-learn syntax and many high-level features. This makes it more appealing to beginners and non-technical users.

R can seem a bit intimidating at first, but it’s more focused on statistical analysis. It provides a larger set of built-in data analysis and data visualization tools. Thus it can be a better option for projects where you handle big amounts of data, like web scraping.

About rvest

Rvest is among the most popular packages used for web scraping in R. It offers powerful, yet simple parsing features. Python’s BeautifulSoup serves as a source of inspiration and it is a part of the tidyverse collection.

Cool, but why use rvest when R has native libraries that do the same job? The first good reason is the fact that rvest is a wrapper over the httr and xml2 packages. This means that it handles both the GET request and the HTML parsing.

Thus you use one library instead of two and your code will be much cleaner and shorter. More than this, rvest can also receive a string as input and handle the XML parsing and file downloading as well.

Yet we should consider that the websites have more dynamically generated content. The reasons are diverse: performance, user experience, and many others. Rvest cannot handle the JavaScript execution so this is where you should look for an alternative.

Scraping with R

Okay, enough theory. Let’s see how R behaves in a real-life use case. For this tutorial, I picked the Goodreads page of a very famous book: George Orwell’s 1984. You can find the website here: https://www.goodreads.com/book/show/61439040-1984.

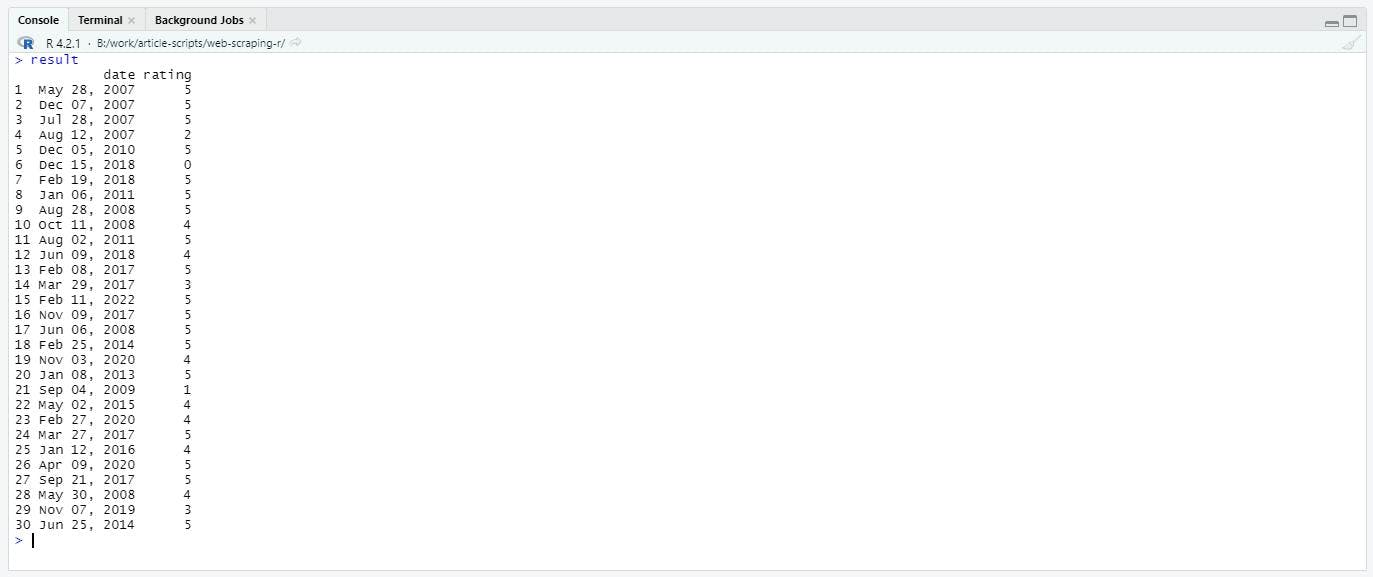

I want to see how the popularity of this book evolved over the years. To estimate this, I will scrape the reviews list and extract the date and the rating for each review. As the final step, I will save the data in an external file that can be later processed by other programs.

Set up the environment

But first, you have to make sure that you have everything you need to write the code.

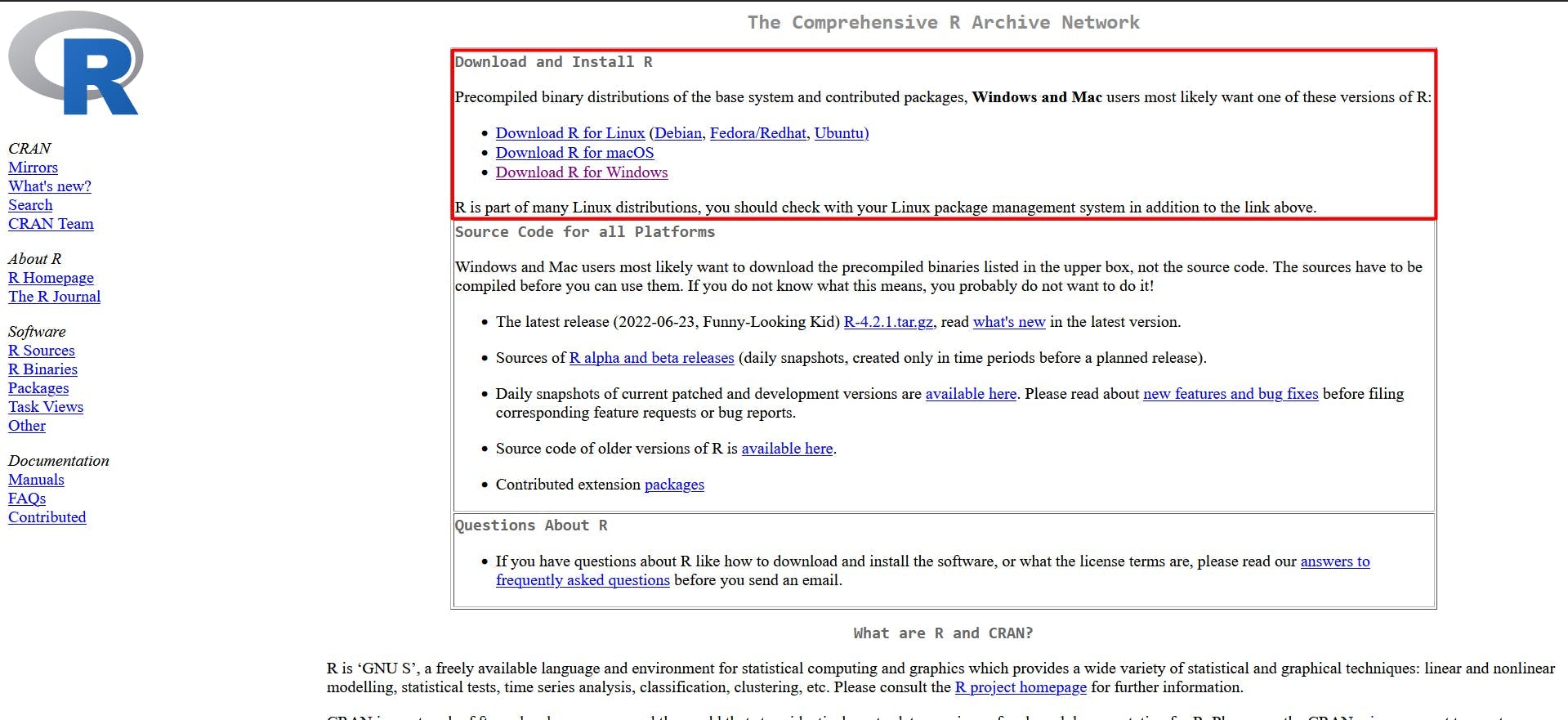

About the IDE, you have two options:

- install a R plugin for Visual Studio Code;

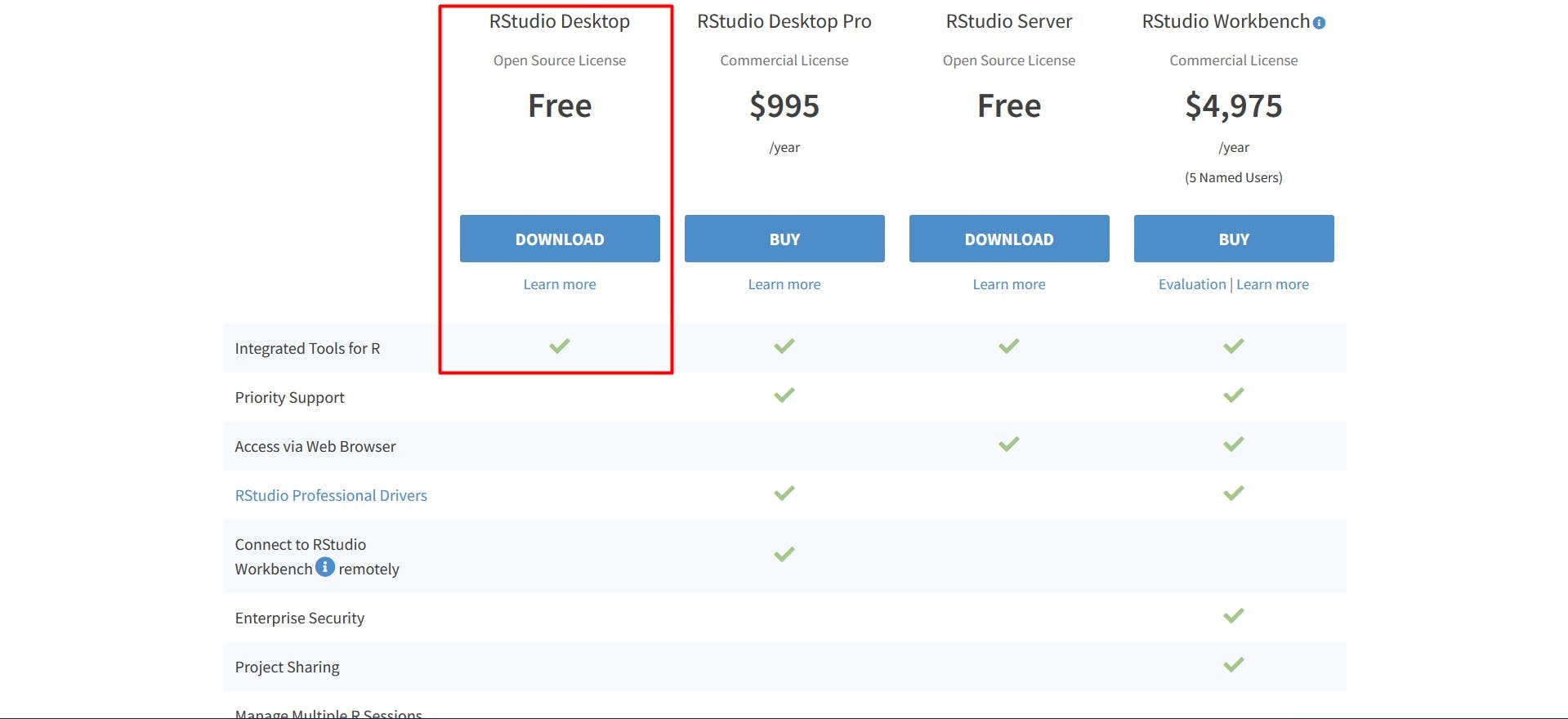

- download RStudio, designed to make coding in R effortless.

In this tutorial, I will be using the latter. You can download it here: https://www.rstudio.com/products/rstudio/download/.

RStudio Desktop's free version is enough to get you comfortable with the basics. Like before, follow the installation instructions.

Open RStudio and create a new empty directory. I will write the code in a new file named "goodreads-rvest.r".

Presenting the browser

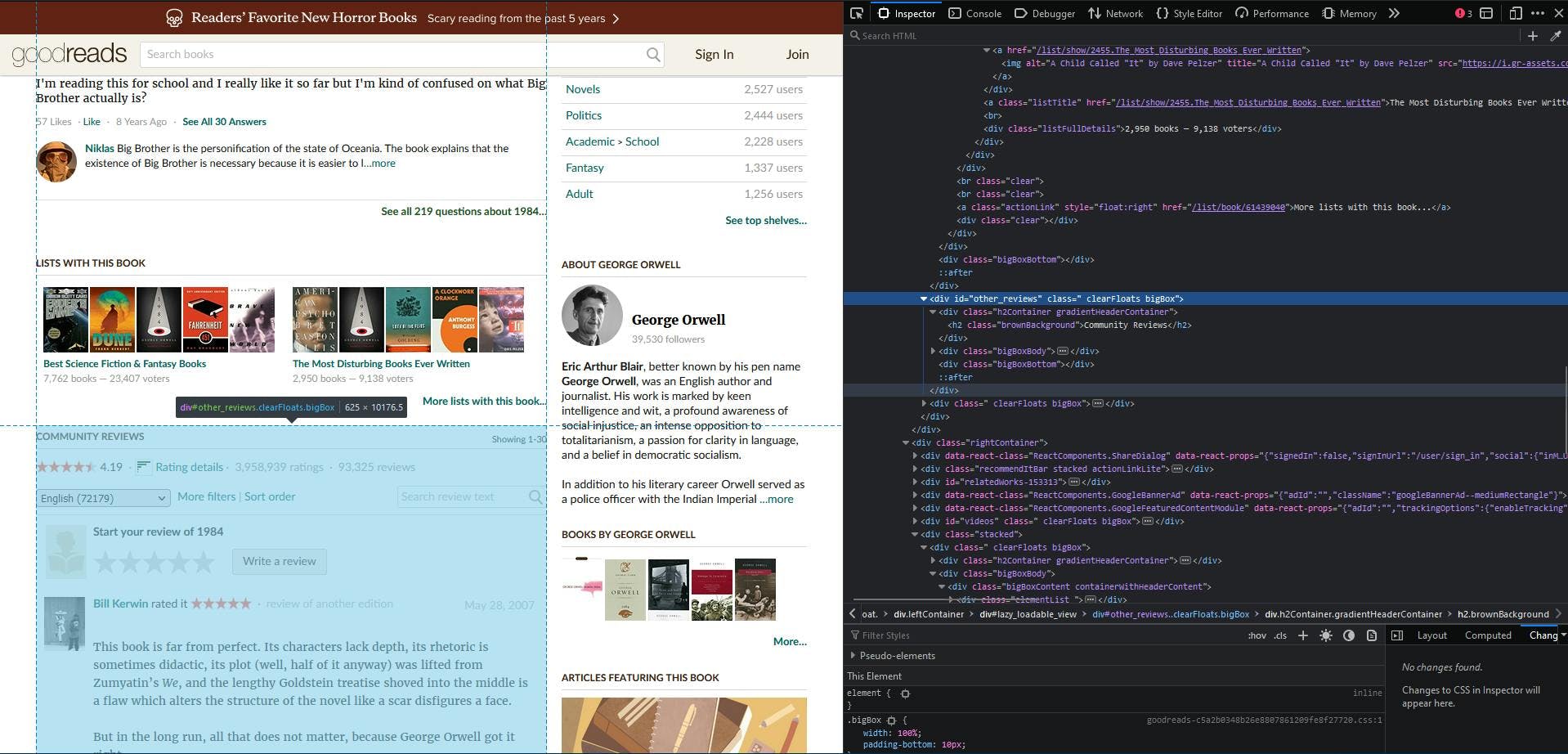

Now, before extracting the data, you have to acknowledge what data you want. Rvest handles both CSS and XPath selectors, so pick your fighter.

If you plan on starting more complex scraping projects, I recommend a basic knowledge of HTML and CSS. Here is a good playground to get started.

If you are not familiar with the whole HTML thing, there are some non-technical options as well. For example, Chrome offers the browser extension SelectorGadget. It allows you to click anywhere on the page and it shows you the CSS selector to get the data.

Yet not every website is as simple as Goodreads. I will choose to retrieve the data using CSS selectors found by manually inspecting the HTML.

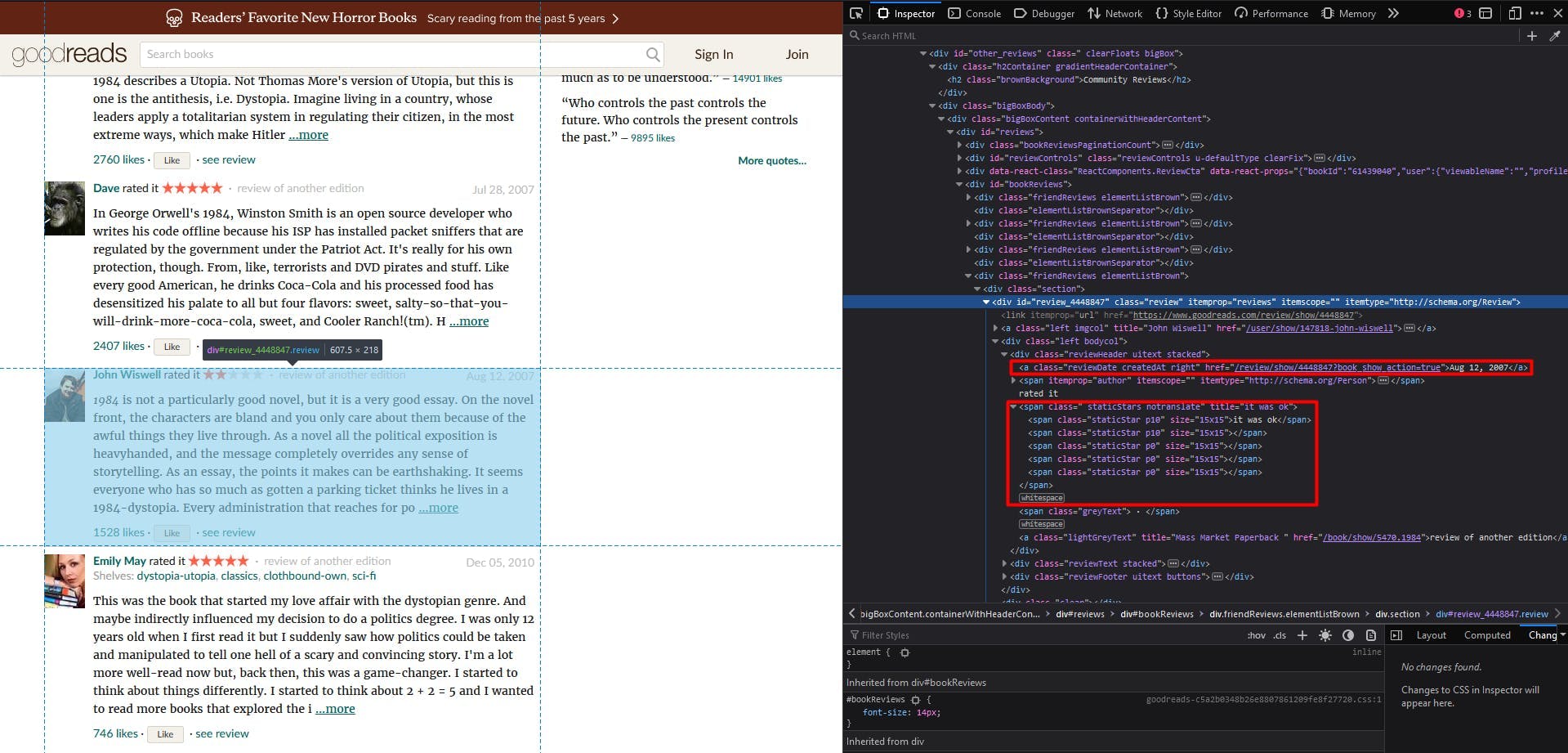

Navigate to our target URL in your browser and scroll down to the “Community Reviews” section. Then right-click on it and choose "Inspect" to open the Developer Tools.

The container with the “other_reviews” id is the one I will focus on. Now you use the “Inspect” button to find the CSS selector for a review’s date and rating.

So, you can notice the following:

- each individual review is a div container with the “review” class;

- the review’s date is a single anchor element with the “reviewDate” class;

- the review’s rating is a span element with the “staticStars” class. It has five span elements as children, the number of stars that a user can set. We will look at the coloured ones, which have the “p10” class.

Extracting the reviews

After checking all the prerequisites, you can finally start writing the code.

install.packages('rvest')Place the cursor at the end of the line and press the “Run” button above the code editor. You will see in your console the progress of the package’s installation.

The installation happens once, so now you can comment or delete the previous line:

#install.packages('rvest')Now you have to load (or import) the library:

library(rvest)

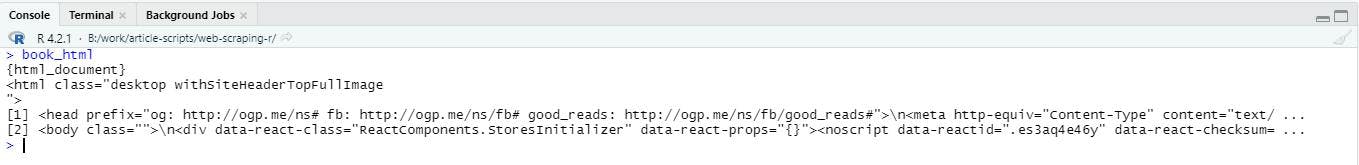

I will use the read_html function to send a GET request to the target website, which will download the needed HTML document. This way I will download the needed HTML document:

book_html <- read_html("https://www.goodreads.com/book/show/61439040-1984")The result is now stored in the book_html variable, which you can also see by simply typing in the console:

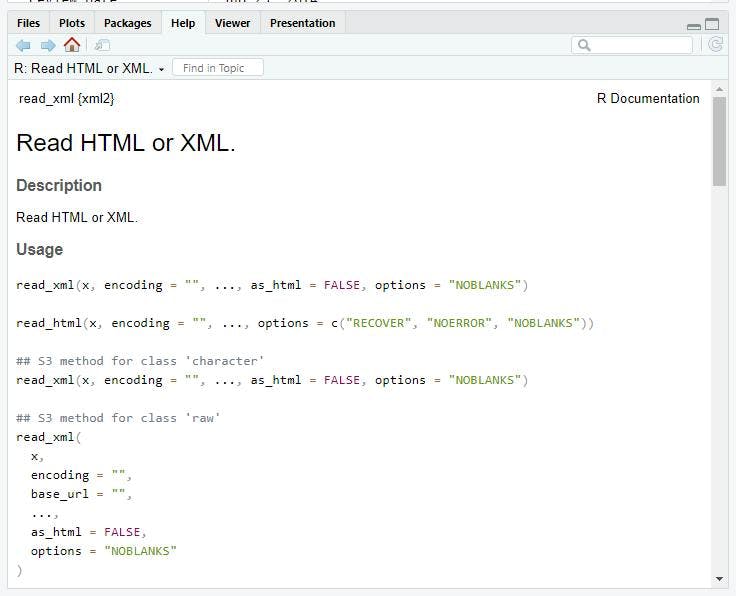

If you need at any moment to check out the official documentation for a function you want to use, type in the console:

help(function_name)

RStudio will open an HTTP server with a direct link to the docs. For read_html the output will be:

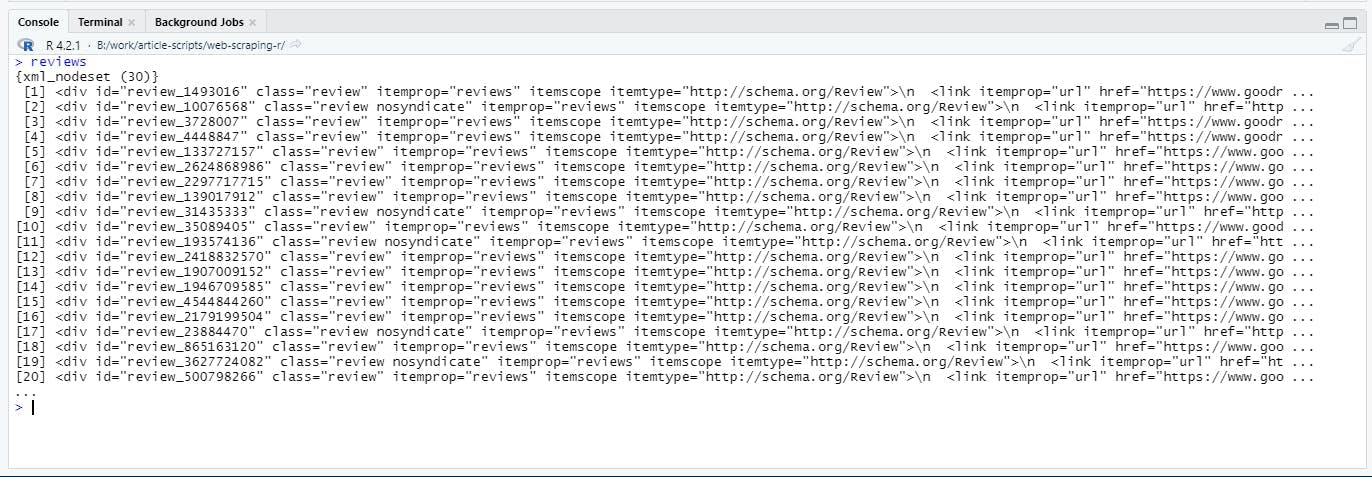

To get the reviews list, I will use the html_elements function. It will receive as input the CSS selector I found earlier:

reviews <- book_html %>% html_elements('div.review')The result will be a list of XML nodes, which I will iterate to get the date and the rating of each individual element:

R programmers use the pipe operator “%>%” to make coding more versatile. Its role is to pass the value of the left operand as an argument to the right operand.

You can chain the operands (as you will see later in this guide), thus it can help you reduce plenty of local variables. The previous line of code written without the pipe operator would look like this:

reviews <- html_elements(book_html, 'div.review')

To gather the data, I will initialize two vectors outside the loop. By taking a quick look at the website, I can guarantee that both vectors will have the same length.

dates <- vector()

ratings <- vector()

Now, while iterating through the reviews list, I look for two values: date and rating. As you saw before, the date is an anchor element that has the reviewDate class.

The rating is a span element with the staticStars class, and it contains five span elements for each star. If the user accorded a star, then the span element will have the p10 class name while the rest of them will have the p0 class name.

The code will look like this:

for (review in reviews) {

review_date = review %>% html_element('a.reviewDate') %>% html_text()

dates <- c(dates, review_date)

review_rating_element = review %>% html_element('span.staticStars')

valid_stars = review_rating_element %>% html_elements('span.p10')

review_rating = length(valid_stars)

ratings <- c(ratings, review_rating)

}Note the html_element function; it is not a typo. You can use html_elements when you want to extract a list of XML nodes and html_element for a single one.

In this case, I applied the latter for a smaller section of the HTML document (a review). I also used the html_text function to help me get the text content of the element I found.

Finally, I will merge the two vectors in a single data frame to centralize the data:

result = data.frame(date = dates, rating = ratings)

And the final result will look like this:

Saving the results

We all know that scraping is useless without storing the results somewhere. In R, to write into a CSV is no more than:

write.csv(result, "reviews.csv")

The result has to be a matrix or a data frame (which already is), otherwise, it attempts a conversion. Run the code and check the project directory. You will see that you can open the previous table in a text editor, Excel doc, etc.

The result has to be a matrix or a data frame (which already is), otherwise, it attempts a conversion. Run the code and check the project directory. You will see that you can open the previous table in a text editor, Excel doc, etc.

Needless to say, our data list has only 30 entries. The website shows over 90,000 reviews and over 3 million ratings. So what happened? Well, pagination.

More than that, go back to your browser and click the second page. You will notice that the list will change but the URL does not. This means that they use a state to dynamically load another section of the list.

In these situations, rvest may not be helpful. Instead, an automated browser can help mimic the click behavior to load the rest of the list. An example of such a library is RSelenium, but I will leave this subject as a follow-up exercise.

Conclusion

I hope this tutorial has given you a solid starting base in web scraping with R. Now you can make a decision easier on your next project' tech stack.

Yet please note that this article did not cover web scraping' many challenges. You can find them more detailed as concepts in this self-explanatory guide.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore the complexities of scraping Amazon product data with our in-depth guide. From best practices and tools like Amazon Scraper API to legal considerations, learn how to navigate challenges, bypass CAPTCHAs, and efficiently extract valuable insights.

The definition and uses of online job scraping. Advantages and disadvantages of job scraping along with strategies and potential risks.

Learn what’s the best browser to bypass Cloudflare detection systems while web scraping with Selenium.