Web Scraper with C# in Just a Few Minutes!

Suciu Dan on Oct 12 2022

The importance of information gathering has been known since ancient times, and people who used it to their advantage have prospered.

Today we can do that much easier and faster by using a scraping tool, and creating your own scraper isn’t difficult, either. The ability to gather leads faster, keep an eye on both the competition and your own brand, and learn more before investing in ideas is at your fingertips.

At this point, you may have known.

If you are interested in knowing more about web scraping or how to build your tool in C#, you should tag along!

Is web scraping legal?

Well, it is legal as long as the website you wish to scrape is ok with it. You can check that by adding “/robots.txt” to its URL address like so http://httpbin.org/robots.txt and reading the permissions, or by looking through their TOS section.

What is web scraping with c sharp?

Web scraping is an automated technique used by companies of all sizes to extract data for various purposes, such as price optimization or email gathering. Researchers use web scraping to collect data reports and statistics, and developers get large amounts of data for machine learning.

How does it work? Well, for most web scraping tools, all you need to do is specify the URL of the website you wish to extract data. Depending on the scraper's abilities, it will extract that web page’s information in a structured manner, ready for you to parse and manipulate in any way you like.

Take into account that some scrapers only look at the HTML content of a page to see the information of a dynamic web page. In this case, a more sophisticated web scraping tool is needed to complete the job.

Using a web scraper is very useful as it can reduce the amount of time you’d normally spend on this task. Manually copying and pasting data doesn’t sound like a fun thing to do over and over again. Think about how much it would take to get vast amounts of data to train an AI! If you are interested in knowing more about why data extraction is useful, have a look!

Let’s see how we can create our web scraping tool in just a few minutes.

Creating your own web scraper in C#

For this tutorial, I will show you how a web scraper can be written in C#. I know that using a different programming language such as Python can be more advantageous for this task, but that doesn’t mean it is impossible to do it in C#.

Coding in C# has its advantages, such as:

- It is object-oriented;

- Has better integrity and interoperability;

- It is a cross-platform;

1. Choosing the page you want to scrape

First things first, you need to decide what web page to scrape. In this example, I will be scraping Greece on Wikipedia and see what subjects are presented in its Table of Contents. This is an easy example, but you can scale it to other web pages as well.

2. Inspecting the code of the website

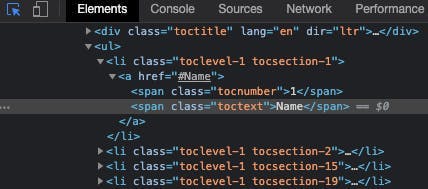

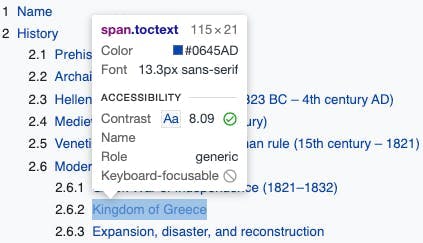

Using the developer tools, you can inspect each element to check and see under which tag the information you need is. Simply right-click on the web page and select “inspect”, and a “Browser Inspector Box” will pop up.

You can search for the class directly in the elements section or using the inspect tool on the web page as shown below.

Thus, you found out that the data you need is located within the span tag which has the class toctext. What you’ll do next is extract the whole HTML of the page, parse it, and select only the data within that specific class. Let’s make some quick preparations first!

3. Prepare the workspace

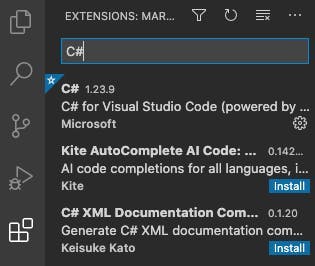

You can use whatever IDE is comfortable for you. In this example, I will use Visual Studio Code. You will also need to install .NET SDK.

Now you need to create your project. To do so, you obviously have to open up Visual Studio Code. Then, you will go to the extensions menu and install C# for Visual Studio Code.

You need a place to write and run our code. In the menu bar, you will select File > Open File (File > Open… on macOS) and in the dialog, you will create a folder that will serve as our workspace.

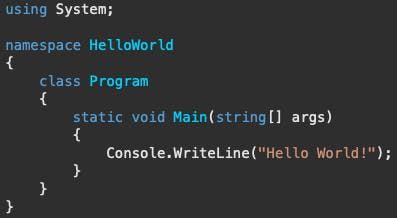

After you created the workplace, you can create a simple “Hello World” application template by entering the following command in our projects’ terminal:

dotnet new console

Your new project should look like this:

Next, you need to install these two packages:

- HtmlAgilityPack is an HTML parser written in C# to read/write DOM.

- CsvHelper is a package that’s used to read and write CSV files.

You can install them using these command lines inside your projects’ terminal:

dotnet add package csvhelper

dotnet add package htmlagilitypack

4. Writing the code

Let’s import the packages we installed a few minutes ago, and some other helpful packages for later use:

using CsvHelper;

using HtmlAgilityPack;

using System.IO;

using System.Collections.Generic;

using System.Globalization;

Outside our Main function, you will create a public class for your table of contents titles.

public class Row

{

public string Title {get; set;}

}

Now, coming back to the Main function, you need to load the page youwish to scrape. As I mentioned before, we will look at what Wikipedia is writing about Greece!

HtmlWeb web = new HtmlWeb();

HtmlDocument doc = web.Load("https://en.wikipedia.org/wiki/Greece");

Our next step is to parse and select the nodes containing the information you are looking for, which is located in the span tags with the class toctext.

varHeaderNames = doc.DocumentNode.SelectNodes("//span[@class='toctext']");What should you do with this information now? Let’s store it in a .csv file for later use. To do that, you first need to iterate over each node we extracted earlier and store its text into a list.

CsvHelper will do the rest of the job, creating and writing the extracted information into a file.

var titles = new List<Row>();

foreach (var item in HeaderNames)

{

titles.Add(new Row { Title = item.InnerText});

}

using (var writer = new StreamWriter("your_path_here/example.csv"))

using (var csv = new CsvWriter(writer, CultureInfo.InvariantCulture))

{

csv.WriteRecords(titles);

}

5. Running the code

The code is done and now we just have to run it! Use this command line in the terminal. Be sure you saved your file first!

dotnet run

You’re done!

I hope this article helped you better understand the basics of web scraping using C#.

It is very useful to have a scraper of your own but keep in mind that you can only scrape one web page at a time and still have to manually select the tags inside the website’s HTML code.

If you want to scrape several other pages, it will be a lot faster to use a scraper than to select the information manually, yes, but not all websites can be scraped using this method. Some websites are dynamic, and this example won’t extract all their data.

Have you ever thought about using a pre-made and more advanced tool to help you scrape en masse?

How about checking out what an API can do for you? Here is a guide written by WebScrapingAPI to help you choose an API that might fit your needs.

See you next time!

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Are XPath selectors better than CSS selectors for web scraping? Learn about each method's strengths and limitations and make the right choice for your project!

Learn how to scrape HTML tables with Golang for powerful data extraction. Explore the structure of HTML tables and build a web scraper using Golang's simplicity, concurrency, and robust standard library.

Master web scraping without getting blocked! Follow tips on respecting TOS, using proxies, and avoiding IP bans. Extract data ethically & legally.