The Beginner-friendly Guide to Web Scraping With Rust

Mihai Maxim on Oct 17 2022

Is Rust a good fit for web scraping?

Rust is a programming language designed for speed and efficiency. Unlike C or C++, Rust features an integrated package manager and build tool. It also has excellent documentation and a friendly compiler with helpful error messages. It does take a while to get used to the syntax. But once you do, you'll realize that you can write complex functionalities with just a few lines of code. Web scraping with Rust is an empowering experience. You gain access to powerful scraping libraries that do most of the heavy lifting for you. As a result, you get to spend more time on the fun parts, like designing new features. In this article, I will walk you through the process of building a web scraper with Rust.

How to install Rust

Installing Rust is a pretty straightforward process. Visit Install Rust - Rust Programming Language (rust-lang.org) and follow the recommended tutorial for your operating system. The page displays different contents based on the operating system you are using. At the end of the installation, make sure you open a brand new terminal and run a rustc --version. If everything went right, you should see the version number of the installed Rust compiler.

Since we will be building a web scraper, let’s create a Rust project with Cargo. Cargo is Rust’s build system and package manager. If you used the official installers provided by rust-lang.org, Cargo should be already installed. Check whether Cargo is installed by entering the following into your terminal: cargo --version. If you see a version number, you have it! If you see an error, such as command not found, look at the documentation for your method of installation to determine how to install Cargo separately. To create a project, navigate to the desired project location and run cargo new <project name>.

This is the default project structure:

- You write code in .rs files.

- You manage dependencies in the Cargo.toml file.

- Visit crates.io: Rust Package Registry to find packages for Rust.

Building a web scraper with Rust

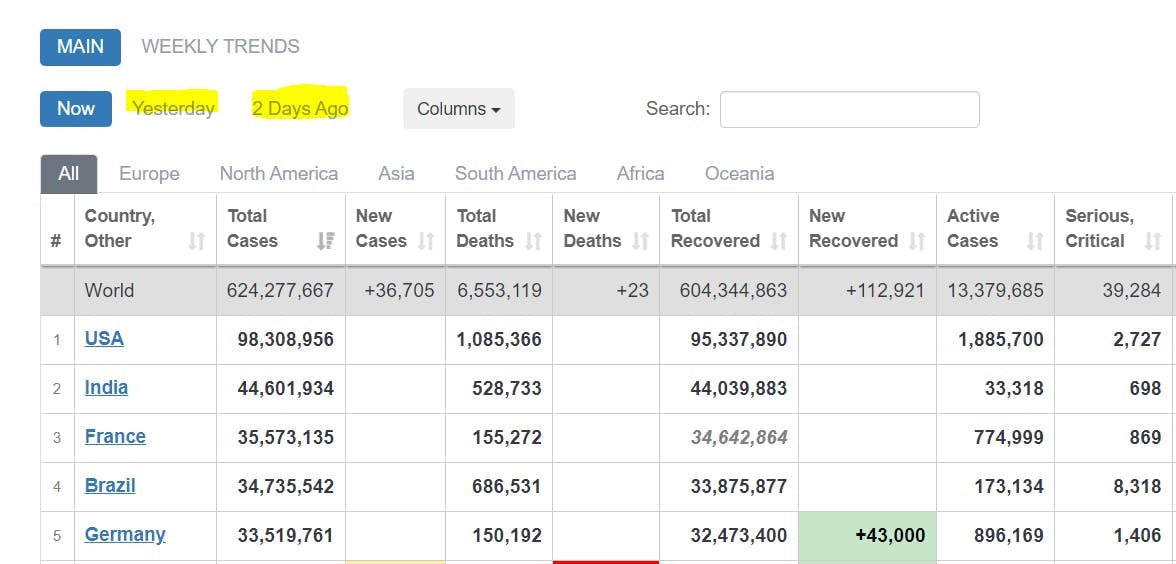

Now let’s have a look at how you could use Rust to build a scraper. The first step is defining a clear purpose. What do I want to extract? The next one is deciding how you want to store the scraped data. Most people save it as .json, but you should generally consider the format that better suits your individual needs. With these two requirements figured out, you can confidently move forward with implementing any scraper. To better illustrate this process, I propose we build a small tool that extracts Covid data from the COVID Live - Coronavirus Statistics - Worldometer (worldometers.info) website. It should parse the reported cases tables and store the data as .json. We will create this scraper together in the following chapters.

Fetching HTML with HTTP requests

To extract the tables, you'll first need to get the HTML that’s inside the web page. We will use the “reqwest” crate/library to fetch raw HTML from the website.

First, add it as a dependency in the Cargo.toml file:

reqwest = { version = "0.11", features = ["blocking", "json"] }Then define your target url and send your request:

let url = "https://www.worldometers.info/coronavirus/";

let response = reqwest::blocking::get(url).expect("Could not load url.");

The "blocking" feature ensures that the request is synchronous. As a result, the program will wait for it to complete and then continue with the other instructions.

let raw_html_string = response.text().unwrap();

Using CSS selectors to locate data

You got all the necessary raw data. Now you have to find a way to locate the reported cases tables. The most popular Rust library for this type of task is called “scraper”. It enables HTML parsing and querying with CSS selectors.

Add this dependency to your Cargo.toml file:

scraper = "0.13.0"

Add these modules to your main.rs file.

use scraper::Selector;

use scraper::Html;

Now use the raw HTML string to create an HTML fragment:

let html_fragment = Html::parse_fragment(&raw_html_string);

We’ll select the tables that display the reported cases for today, yesterday, and two days ago.

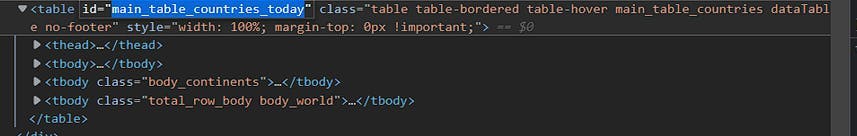

Open the developer console and identify the table ids:

At the time of writing this article, the id for today is: “main_table_countries_today”.

The other two table id’s are:

“main_table_countries_yesterday” and “main_table_countries_yesterday2”

Now let’s define some selectors:

let table_selector_string = "#main_table_countries_today, #main_table_countries_yesterday, #main_table_countries_yesterday2";

let table_selector = Selector::parse(table_selector_string).unwrap();

let head_elements_selector = Selector::parse("thead>tr>th").unwrap();

let row_elements_selector = Selector::parse("tbody>tr").unwrap();

let row_element_data_selector = Selector::parse("td, th").unwrap();

Pass the table_selector_string to the html_fragment select method to get the references of all the tables:

let all_tables = html_fragment.select(&table_selector);

Using the tables references, create a loop that parses the data from each table.

for table in all_tables{

let head_elements = table.select(&head_elements_selector);

for head_element in head_elements{

//parse the header elements

}

let head_elements = table.select(&head_elements_selector);

for row_element in row_elements{

for td_element in row_element.select(&row_element_data_selector){

//parse the individual row elements

}

}

}Parsing the data

The format you store the data in dictates the way you parse it. For this project, it is .json. Consequently, we need to put the table data in key-value pairs. We can use the table header names as keys and the table rows as values.

Use the .text() function to extract the headers and store them in a Vector:

//for table in tables loop

let mut head:Vec<String> = Vec::new();

let head_elements = table.select(&head_elements_selector);

for head_element in head_elements{

let mut element = head_element.text().collect::<Vec<_>>().join(" ");

element = element.trim().replace("\n", " ");

head.push(element);

}

//head

["#", "Country, Other", "Total Cases", "New Cases", "Total Deaths", ...]

Extract the row values in a similar manner:

//for table in tables loop

let mut rows:Vec<Vec<String>> = Vec::new();

let row_elements = table.select(&row_elements_selector);

for row_element in row_elements{

let mut row = Vec::new();

for td_element in row_element.select(&row_element_data_selector){

let mut element = td_element.text().collect::<Vec<_>>().join(" ");

element = element.trim().replace("\n", " ");

row.push(element);

}

rows.push(row)

}

//rows

[...

["", "World", "625,032,352", "+142,183", "6,555,767", ...]

...

["2", "India", "44,604,463", "", "528,745", ...]

...]

Use the zip() function to create a matching between header and row values:

for row in rows {

let zipped_array = head.iter().zip(row.iter()).map(|(a, b)|

(a,b)).collect::<Vec<_>>();

}

//zipped_array

[

...

[("#", ""), ("Country, Other", "World"), ("Total Cases", "625,032,352"), ("New Cases", "+142,183"), ("Total Deaths", "6,555,767"), ...]

...

]Now store the zipped_array (key, value) pairs in an IndexMap:

serde = {version="1.0.0",features = ["derive"]}

indexmap = {version="1.9.1", features = ["serde"]} (add these dependencies)use indexmap::IndexMap;

//use this to store all the IndexMaps

let mut table_data:Vec<IndexMap<String, String>> = Vec::new();

for row in rows {

let zipped_array = head.iter().zip(row.iter()).map(|(a, b)|

(a,b)).collect::<Vec<_>>();

let mut item_hash:IndexMap<String, String> = IndexMap::new();

for pair in zipped_array{

//we only want the non empty values

if !pair.1.to_string().is_empty(){

item_hash.insert(pair.0.to_string(), pair.1.to_string());

}

}

table_data.push(item_hash);

//table_data

[

...

{"Country, Other": "North America", "Total Cases": "116,665,220", "Total Deaths": "1,542,172", "Total Recovered": "111,708,347", "New Recovered": "+2,623", "Active Cases": "3,414,701", "Serious, Critical": "7,937", "Continent": "North America"}

,

{"Country, Other": "Asia", "Total Cases": "190,530,469", "New Cases": "+109,009", "Total Deaths": "1,481,406", "New Deaths": "+177", "Total Recovered": "184,705,387", "New Recovered": "+84,214", "Active Cases": "4,343,676", "Serious, Critical": "10,640", "Continent": "Asia"}

...

]

IndexMap is a great choice for storing the table data because it preserves the insertion order of the (key, value) pairs.

Serializing the data

Now that you can create json-like objects with table data, it is time to serialize them to .json. Before we begin, make sure that you have all these dependencies installed:

serde = {version="1.0.0",features = ["derive"]}

serde_json = "1.0.85"

indexmap = {version="1.9.1", features = ["serde"]}Store each table_data in a tables_data Vector:

let mut tables_data: Vec<Vec<IndexMap<String, String>>> = Vec::new();

For each table:

//fill table_data (see previous chapter)

tables_data.push(table_data);

Define a struct container for the tables_data:

#[derive(Serialize)]

struct FinalTableObject {

tables: IndexMap<String, Vec<IndexMap<String, String>>>,

}

Instantiate the struct:

let final_table_object = FinalTableObject{tables: tables_data};Serialize the struct to a .json string:

let serialized = serde_json::to_string_pretty(&final_table_object).unwrap();

Write the serialized .json string to a .json file:

use std::fs::File;

use std::io::{Write};

let path = "out.json";

let mut output = File::create(path).unwrap();

let result = output.write_all(serialized.as_bytes());

match result {

Ok(()) => println!("Successfully wrote to {}", path),

Err(e) => println!("Failed to write to file: {}", e),

}

Aaaand, you’re done. If everything went right, your output .json should look like:

{

"tables": [

[ //table data for #main_table_countries_today

{

"Country, Other": "North America",

"Total Cases": "116,665,220",

"Total Deaths": "1,542,172",

"Total Recovered": "111,708,347",

"New Recovered": "+2,623",

"Active Cases": "3,414,701",

"Serious, Critical": "7,937",

"Continent": "North America"

},

...

],

[...table data for #main_table_countries_yesterday...],

[...table data for #main_table_countries_yesterday2...],

]

}You can find the whole code for the project at [Rust][A simple <table> scraper] (github.com)

Making adjustments to fit other use cases

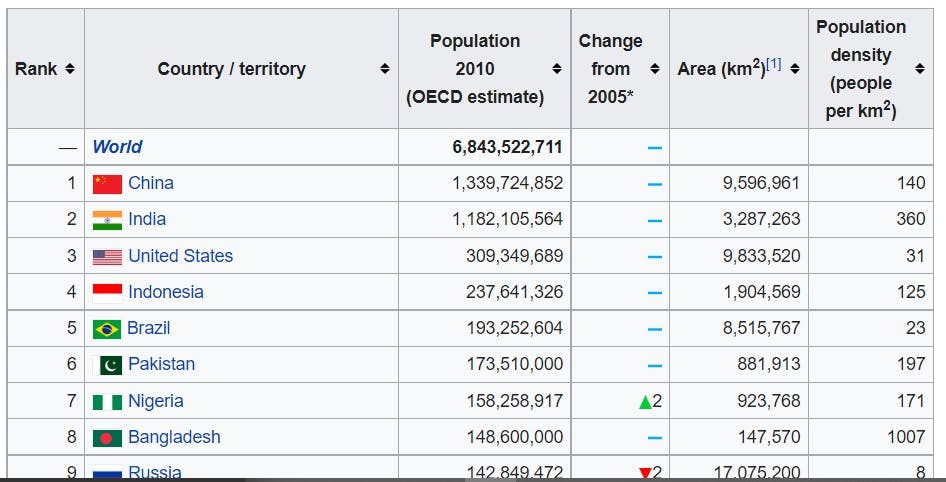

If you followed me this far, you've probably realized that you can use this scraper on other websites. The scraper is not bound to a specific table column count or naming convention. Also, it does not rely on many CSS selectors. So it should not take a lot of tweaking to make it work for other tables, right? Let's test this theory.

We need a selector for the <table> tag.

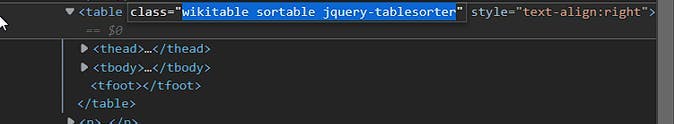

If class="wikitable sortable jquery-tablesorter", you could change the table_selector to:

let table_selector_string = ".wikitable.sortable.jquery-tablesorter";

let table_selector = Selector::parse(table_selector_string).unwrap();

This table has the same <thead> <tbody> structure, so there is no reason to change the other selectors.

The scraper should work now. Let’s give it a test run:

{

"tables": []

}Webscraping with Rust is fun, isn’t it?

How could this fail?

Let’s dig a little deeper:

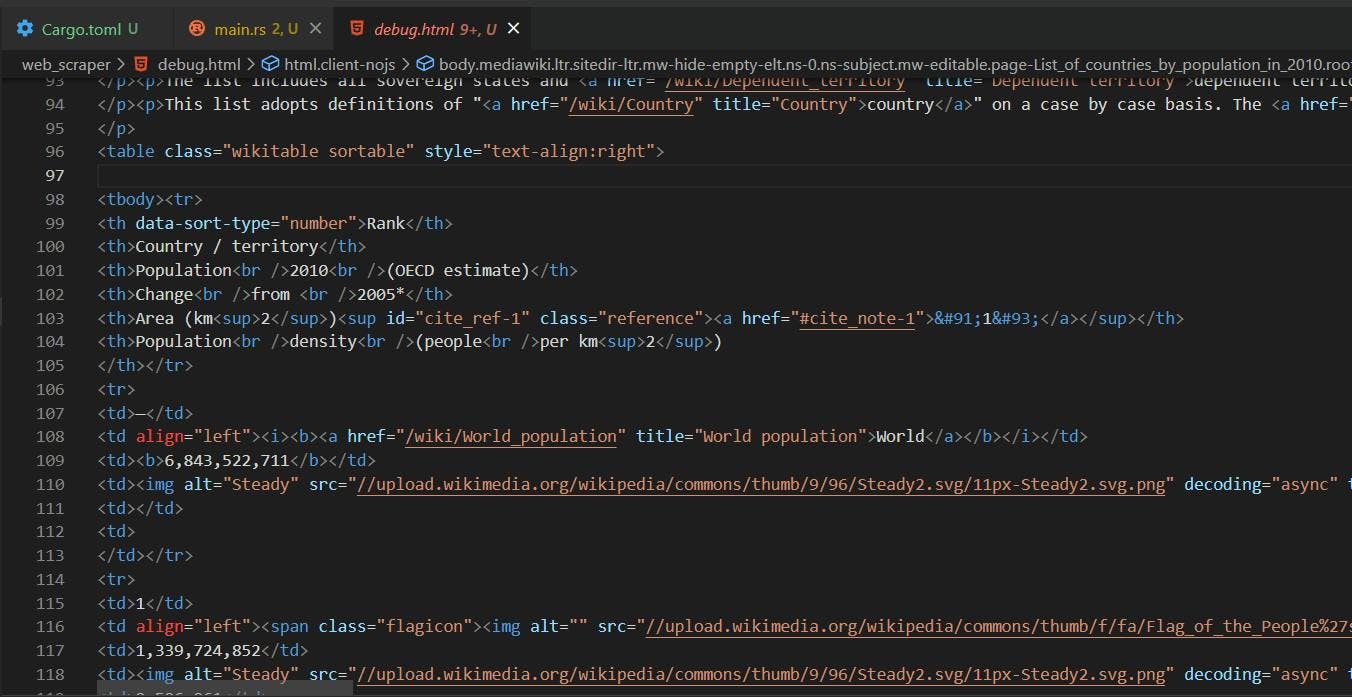

The easiest way to figure what went wrong is to look at the HTML that is returned from the GET request:

let url = "https://en.wikipedia.org/wiki/List_of_countries_by_population_in_2010";

let response = reqwest::blocking::get(url).expect("Could not load url.");

et raw_html_string = response.text().unwrap();

let path = "debug.html";

let mut output = File::create(path).unwrap();

let result = output.write_all(raw_html_string.as_bytes());

The HTML returned from the GET request is different from the one we see on the actual website. The browser offers an environment for Javascript to run and alter the page's layout. In the context of our scraper, we get the unaltered version of it.

Our table_selector did not work because the “jquery-tablesorter” class is injected dynamically by Javascript. Also, you can see that the <table> structure is different. The <thead> tag is missing. The table head elements are now found in the first <tr> of the <tbody>. Thus, they will be picked up by the row_elements_selector.

Removing “jquery-tablesorter” from the table_selector is not enough, we also need to handle the missing <tbody> case:

let table_selector_string = ".wikitable.sortable";

if head.is_empty() {

head=rows[0].clone();

rows.remove(0);

}// take the first row values as head if there is no <thead>Now let’s give it another spin:

{

"tables": [

[

{

"Rank": "--",

"Country / territory": "World",

"Population 2010 (OECD estimate)": "6,843,522,711"

},

{

"Rank": "1",

"Country / territory": "China",

"Population 2010 (OECD estimate)": "1,339,724,852",

"Area (km 2 ) [1]": "9,596,961",

"Population density (people per km 2 )": "140"

},

{

"Rank": "2",

"Country / territory": "India",

"Population 2010 (OECD estimate)": "1,182,105,564",

"Area (km 2 ) [1]": "3,287,263",

"Population density (people per km 2 )": "360"

},

...

]

]That’s better!

Summary

I hope that this article provides a good reference point for web scraping with Rust. Even though Rust’s rich type system and ownership model can be a little overwhelming, it is by no means unfit for web scraping. You get a friendly compiler that constantly points you in the right direction. You also encounter a lot of well-written documentation: The Rust Programming Language - The Rust Programming Language (rust-lang.org).

Building a web scraper is not always a straightforward process. You will face Javascript rendering, IP blocks, captchas, and many other setbacks. At WebScraping API, we provide you with all the necessary tools to combat these common issues. Are you curious to find out how it works? You can try our product for free at WebScrapingAPI - Product. Or you can contact us at WebScrapingAPI - Contact. We are more than happy to answer all your questions!

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore the in-depth comparison between Scrapy and Selenium for web scraping. From large-scale data acquisition to handling dynamic content, discover the pros, cons, and unique features of each. Learn how to choose the best framework based on your project's needs and scale.

Learn how to scrape dynamic JavaScript-rendered websites using Scrapy and Splash. From installation to writing a spider, handling pagination, and managing Splash responses, this comprehensive guide offers step-by-step instructions for beginners and experts alike.

Explore the transformative power of web scraping in the finance sector. From product data to sentiment analysis, this guide offers insights into the various types of web data available for investment decisions.