The Ultimate Guide On How To Start Web Scraping With Go

Sorin-Gabriel Marica on Oct 14 2022

Web scraping with Go it's a great way of creating a fast and powerful scraper. This is because GoLang is one of the best programming languages you can use for concurrency. But before jumping right into it, I must first tell you more about what web scraping is, and how it can help you.

Web scraping is the process of extracting data from websites. This process can be done manually, but this approach is not recommended when dealing with a lot of data. In this article we will explore how you can build your own automated web scraper from scratch with Go.

If you’re new to this, you may wonder what are some of the use cases that Web Scraping has. Here is a small list with a few of the most common ones:

- Price Comparison Tools - You can build many tools using a web scraper. One of the most common and useful ones is a price comparison tool. Such a tool would scrape the prices for a product from many sources, and display the best deal possible.

- Machine Learning - If you want to build a model using machine learning, you will need a training dataset. While sometimes you may find existing datasets that you can use, oftentimes you will need to do some extra work, and get the data that you need yourself.

- Market Research - A third use case is scraping information from the internet to find out who your competitors are and what they are doing. This way you can keep up or stay ahead of the competition, being aware of any new feature that they might have added.

What will you need for scraping data with go

Before we begin you will need to be able to run GoLang code on your machine. To do that all you need is to install Go, if you hadn’t already. You can find out more details on how to install Go, and how to check if you have it installed over here.

The other thing you will need is an IDE or a text editor of your choice where we will write the code. I prefer to use Visual Studio Code, but feel free to use whatever you may see fit.

And that’s it. Pretty simple, no? Now let’s dive into the main topic of this article, web scraping with go.

Build a web scraper using Go

For building our scraper we will first need a purpose, a set of data, that we want to collect from a specific source. Thus as the topic for our scraper I chose scraping the weekly downloads of the first packages from npmjs.com that are using the keyword “framework”. You can find them on this page: https://www.npmjs.com/search?q=keywords:framework&page=0&ranking=optimal)

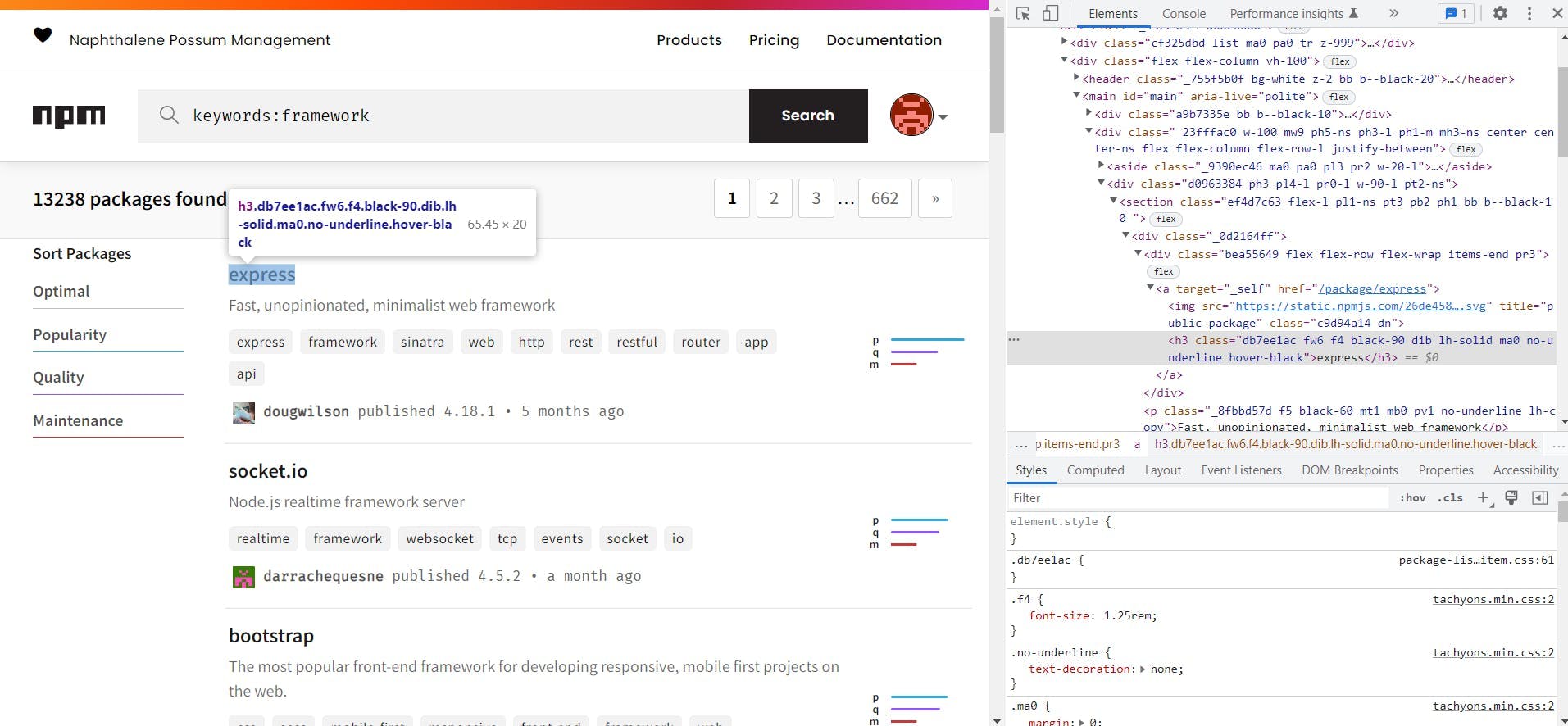

Inspect the contents of the page you want to scrape

To do scraping right, before you start actually extracting the data, you will need to see where the data is. By this I mean that you will need to build the HTML selectors to query the data, based on the HTML structure of the page.

To see the HTML structure of the page, you can use the developer tools available in most of the modern browsers. In Chrome, you can do this on the page by right clicking on the element you want to extract and click “Inspect Page”. Once you do that you will see something like this:

Based on the HTML that you can see on the right (the inspect window), we can now build the selectors we will use. From this page we only need the URLs of each of the packages.

By looking over the HTML, we can see that the css classes used by the website are code generated. This makes them not reliable for scraping so we will use the html tags instead. On the page we can see that the packages are in <section> tags and that the link to the package is in the first div from the first div of the section.

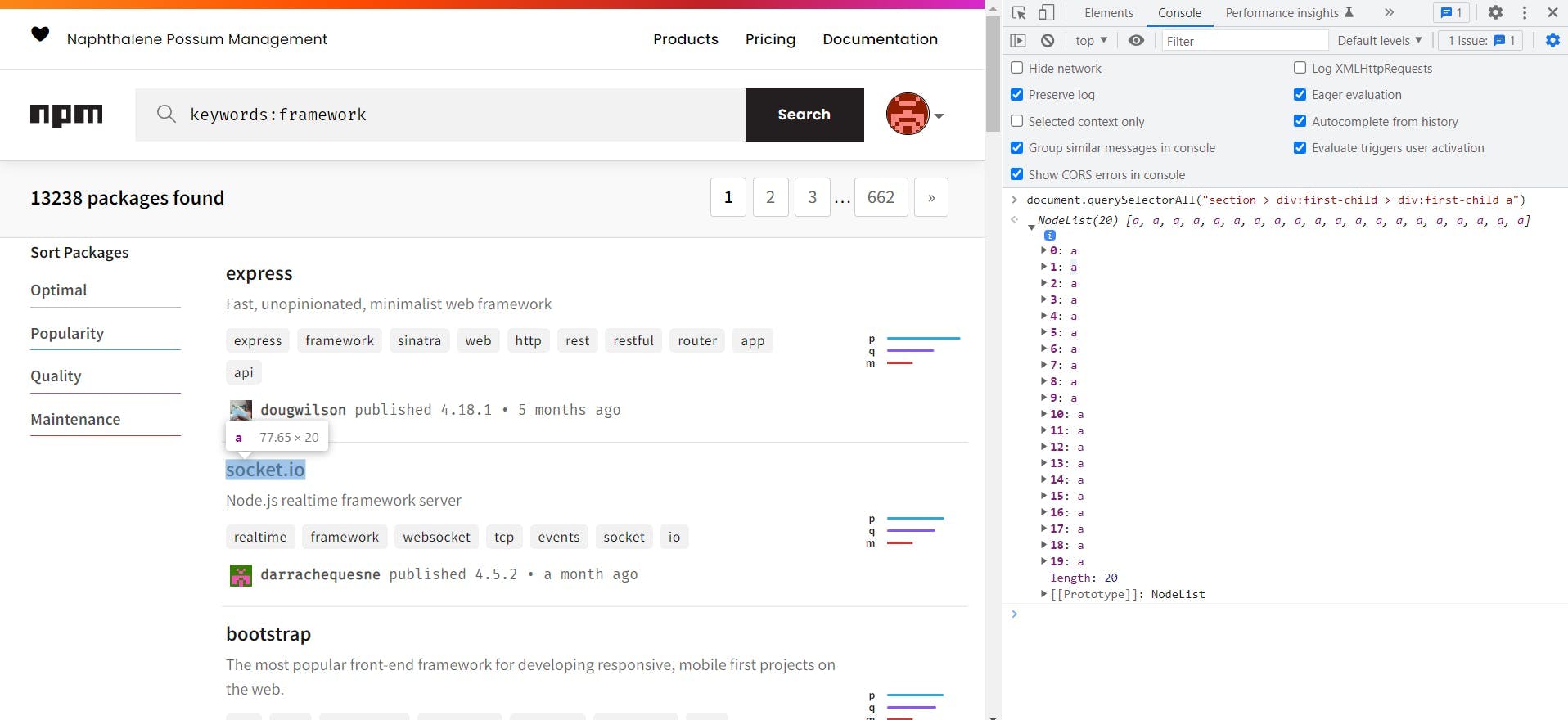

Knowing this we can build the following selector to extract the links of all the packages: section > div:first-child > div:first-child a. Before trying it in code, we can test the selector from the developer tools of the browser. To do this go to the console tab and run document.querySelectorAll("{{ SELECTOR }}"):

By hovering over each of the nodelist elements returned, we can see that they are exactly the ones we were looking for, and so we can use this selector.

Scraping a page using Go

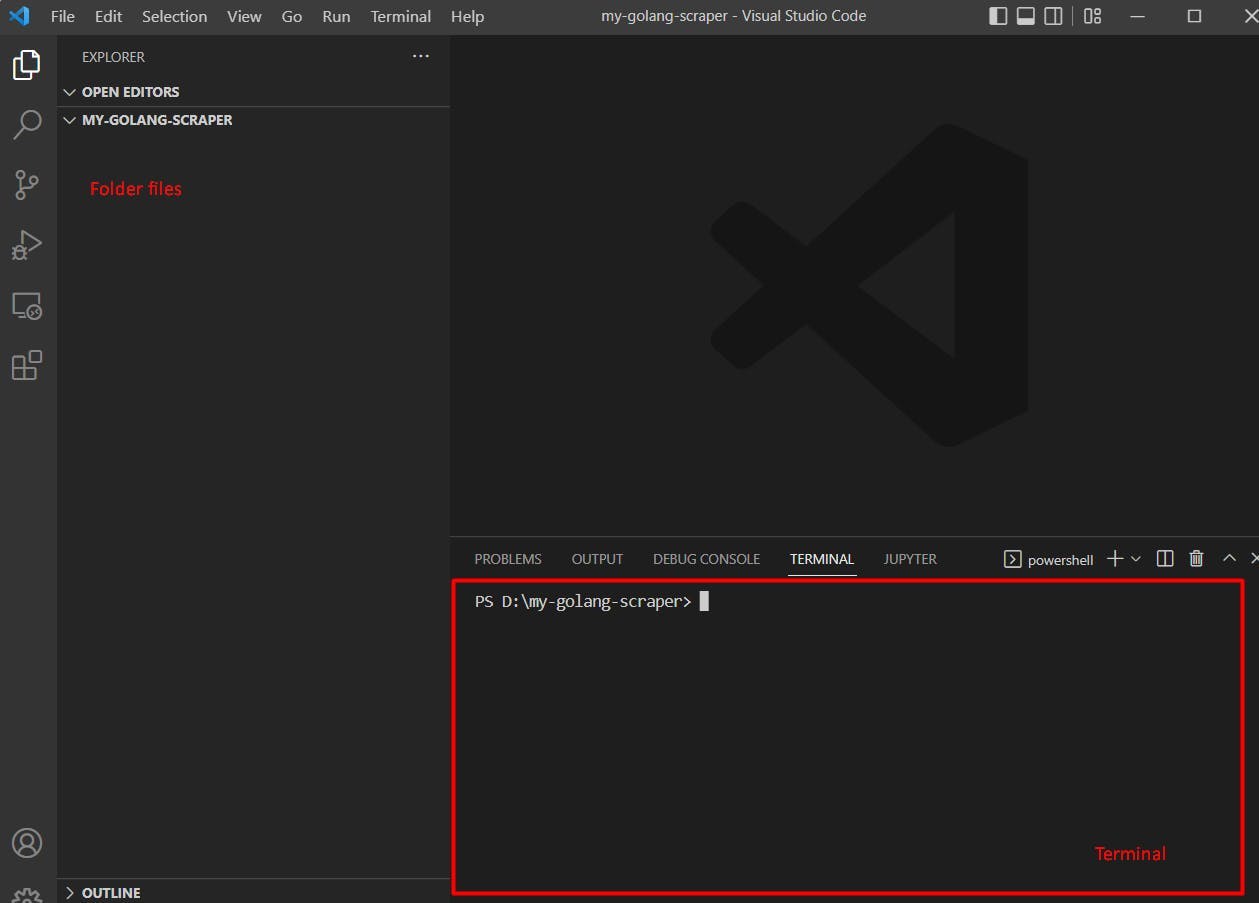

We’re finally starting to build the scraper! To do that you should first create a folder where we will put all our code. Next, you need to open a terminal window, either from your IDE or from your operating system and go to our folder.

To open a terminal in the folder, using Visual Studio Code you can click on Terminal -> New Terminal (from the top bar).

Now that we have our terminal opened, it’s time to initialize the project. You can do this by running the command:

go mod init webscrapingapi.com/my-go-scraper

This will create in your folder a file called go.mod with the following contents:

module webscrapingapi.com/my-go-scraper

go 1.19

To make the request to the page and extract the selectors from the HTML, we will use Colly, a GoLang package (check out Colly documentation for more info). To install this package you have to run

go get github.com/gocolly/colly

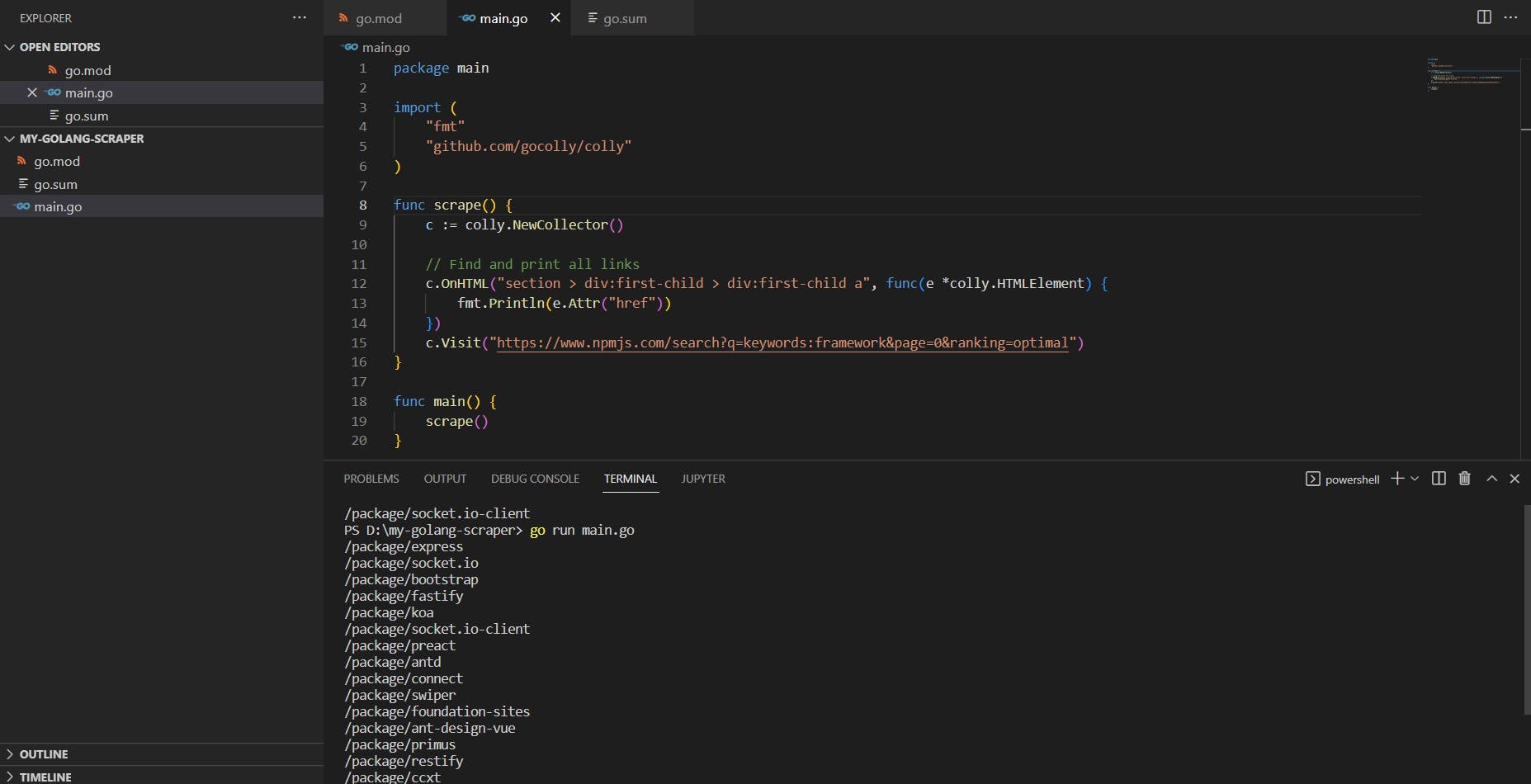

Now that we have everything prepared, all we need to do is create our main.go file, and write down some code. The code for extracting all the links from the first page of npmjs frameworks, is the following:

package main

import (

"fmt"

"github.com/gocolly/colly"

)

func scrape() {

c := colly.NewCollector()

// Find and print all links

c.OnHTML("section > div:first-child > div:first-child a", func(e *colly.HTMLElement) {

fmt.Println(e.Attr("href"))

})

c.Visit("https://www.npmjs.com/search?q=keywords:framework&page=0&ranking=optimal")

}

func main() {

scrape()

}

If this seems hard to read at first, don’t worry, we will break it down in the following paragraphs and explain it.

Every golang file should start with the package name and the imports that Go will use. In this case the two packages we use are “fmt” to print the links we scrape, and "Colly" (for the actual scraping).

In the next part, we created the function scrape() which takes care of scraping the links we need. To do that the function visits the first page, and waits for finding the selector we decided on. When an element from that selector shows up, it goes ahead and prints the href attribute of that element.

The last part is the main function which is the function that is called everytime we run a golang script. To execute the previous code run go run main.go from your terminal, and you should get the following output:

As you can see, the href attribute has relative paths to the links, so we will need to prefix it with the npmjs url.

Use GoLang’s concurrency for efficiency

One of the coolest features of GoLang are the GoRoutines. GoRoutines are simple lightweight threads managed by the Go runtime. What’s great about this is that Go can help us scrape many urls at the same time with a lightning speed.

Before we extracted the links for the first 20 packages under the keyword “framework” on npmjs.com. Now we will attempt to scrape all these links at the same time and extract the weekly downloads for each of them. To do that we will be using GoRoutines and WaitGroups.

Here is the final code to extract the weekly downloads using the goroutines:

package main

import (

"fmt"

"github.com/gocolly/colly"

"sync"

)

func scrapeWeeklyDownloads(url string, wg *sync.WaitGroup) {

defer wg.Done()

c := colly.NewCollector()

// Find and print the weekly downloads value

c.OnHTML("main > div > div:last-child > div:not([class]) p", func(e *colly.HTMLElement) {

fmt.Println(fmt.Sprintf("%s - %s", url, e.Text))

})

c.Visit(url)

}

func scrape() {

c := colly.NewCollector()

var wg sync.WaitGroup

// Find and print all links

c.OnHTML("section > div:first-child > div:first-child a", func(e *colly.HTMLElement) {

wg.Add(1)

go scrapeWeeklyDownloads(fmt.Sprintf("%s%s", "https://www.npmjs.com", e.Attr("href")), &wg)

})

c.Visit("https://www.npmjs.com/search?q=keywords:framework&page=0&ranking=optimal")

wg.Wait()

}

func main() {

scrape()

}

Now let’s discuss what was added to our previous code. First you will notice that we have a new package imported called “sync”. This will help us use golang routines and wait for the threads to finish, before stopping the execution of the program.

The next thing added is the new function called “scrapeWeeklyDownloads”. This function accepts two parameters: the url of the link we’re gonna scrape and a WaitGroup pointer. What this function does is to visit the url given and extract the weekly downloads (using the selector main > div > div:last-child > div:not([class]) p).

Last changes you will notice were in the scrape function where we created a WaitGroup using var wg sync.WaitGroup. Here, for each link from the packages page, we used wg.Add(1) and then created a GoRoutine that calls the scrapeWeeklyDownloads function. At the end of the function, the instruction wg.Wait() makes the code wait until all the GoRoutines finish executing.

For more information on WaitGroups please check out this example from golang.

Why GoRoutines and WaitGroups?

By using concurrency in golang with GoRoutines and WaitGroups we can create a very fast scraper. Running the previous code sample will return the page and the weekly downloads of each package. But, because we’re using multi threading, the order in which these informations will show, it’s unknown (as threads execute at different speeds)

If you’re running the code using linux or windows subsystem linux (wsl), you can use time go run main.go to see the execution time of the whole script. For me, the execution time is somewhere around 5 to 6 seconds. This is very fast, considering we’re scraping 21 pages (First the page with the packages and then the pages of each of the packages).

Other obstacles

Most scrapers usually count on making a simple HTTP Request to the page, to get the content they need. This solution is good but sometimes websites display their information through javascript rendering. That means that the website will first show you only a part of its content, loading the rest of it dynamically through javascript.

To scrape such pages you will need to use chromedriver and control a real chrome browser. While there are some options to do this in Golang, you will need to do some extra research for this topic.

Even with javascript rendering covered there are still some extra obstacles when scraping a website. Some websites may use antibot detections, ip blocks or captchas to prevent bots from scraping their content. To still scrape those websites you may try to use some tips and tricks for web scraping, such as making your scraper slower and to act more human-like.

But, if you want to keep your scraper fast and to tackle these obstacles in an easy way, you may use WebScrapingAPI. WebScrapingAPI is an API designed to help you with scraping by rotating your IP and avoiding antibot detections. This way you can continue to use the lightning speed that GoLang provides and scrape your data in no time.

Conclusion on Web Scraping with Go

Web Scraping is a nice and fast way to extract data from the internet, and it can be used for many different use cases. You can choose to extract data for your machine learning model or to build an application from scratch using the data you scraped.

GoLang it's one of the best solutions out there when it comes to concurrency. Using GoLang and Colly you can build a fast scraper that will bring your data in no time. This makes web scraping with Go very easy and efficient once you get used to Go’s syntax

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Are XPath selectors better than CSS selectors for web scraping? Learn about each method's strengths and limitations and make the right choice for your project!

Learn how to scrape HTML tables with Golang for powerful data extraction. Explore the structure of HTML tables and build a web scraper using Golang's simplicity, concurrency, and robust standard library.

Learn how to use proxies with node-fetch, a popular JavaScript HTTP client, to build web scrapers. Understand how proxies work in web scraping, integrate proxies with node-fetch, and build a web scraper with proxy support.