The Ultimate Guide to Web Scraping Walmart

Raluca Penciuc on Feb 23 2023

Web scraping Walmart is a popular topic among data enthusiasts and businesses alike. Walmart is one of the largest retail companies in the world, with a vast amount of data available on its website. By scraping this data, you can gain valuable insights into consumer behavior, market trends, and much more.

In this article, we will explore the process of web scraping Walmart using TypeScript and Puppeteer. We will also go over the environment setup, the identification of the data, and extract it for use in your own projects. In the end, we will discuss how using a professional scraper may be a more effective and reliable solution.

By the end of this guide, you'll have a solid understanding of the process and be able to use it to improve your business or research. Whether you are a data scientist, a marketer, or a business owner, this guide will help you harness the power of Walmart's data to drive your success.

Prerequisites

Before we begin, let's make sure we have the necessary tools in place.

First, download and install Node.js from the official website, making sure to use the Long-Term Support (LTS) version. This will also automatically install Node Package Manager (NPM) which we will use to install further dependencies.

For this tutorial, we will be using Visual Studio Code as our Integrated Development Environment (IDE) but you can use any other IDE of your choice. Create a new folder for your project, open the terminal, and run the following command to set up a new Node.js project:

npm init -y

This will create a package.json file in your project directory, which will store information about your project and its dependencies.

Next, we need to install TypeScript and the type definitions for Node.js. TypeScript offers optional static typing which helps prevent errors in the code. To do this, run in the terminal:

npm install typescript @types/node --save-dev

You can verify the installation by running:

npx tsc --version

TypeScript uses a configuration file called tsconfig.json to store compiler options and other settings. To create this file in your project, run the following command:

npx tsc -init

Make sure that the value for “outDir” is set to “dist”. This way we will separate the TypeScript files from the compiled ones. You can find more information about this file and its properties in the official TypeScript documentation.

Now, create an “src” directory in your project, and a new “index.ts” file. Here is where we will keep the scraping code. To execute TypeScript code you have to compile it first, so to make sure that we don’t forget this extra step, we can use a custom-defined command.

Head over to the “package. json” file, and edit the “scripts” section like this:

"scripts": {

"test": "npx tsc && node dist/index.js"

}This way, when you will execute the script, you just have to type “npm run test” in your terminal.

Finally, to scrape the data from the website we will be using Puppeteer, a headless browser library for Node.js that allows you to control a web browser and interact with websites programmatically. To install it, run this command in the terminal:

npm install puppeteer

It is highly recommended when you want to ensure the completeness of your data, as many websites today contain dynamic-generated content. If you’re curious, you can check out before continuing the Puppeteer documentation to fully see what it’s capable of.

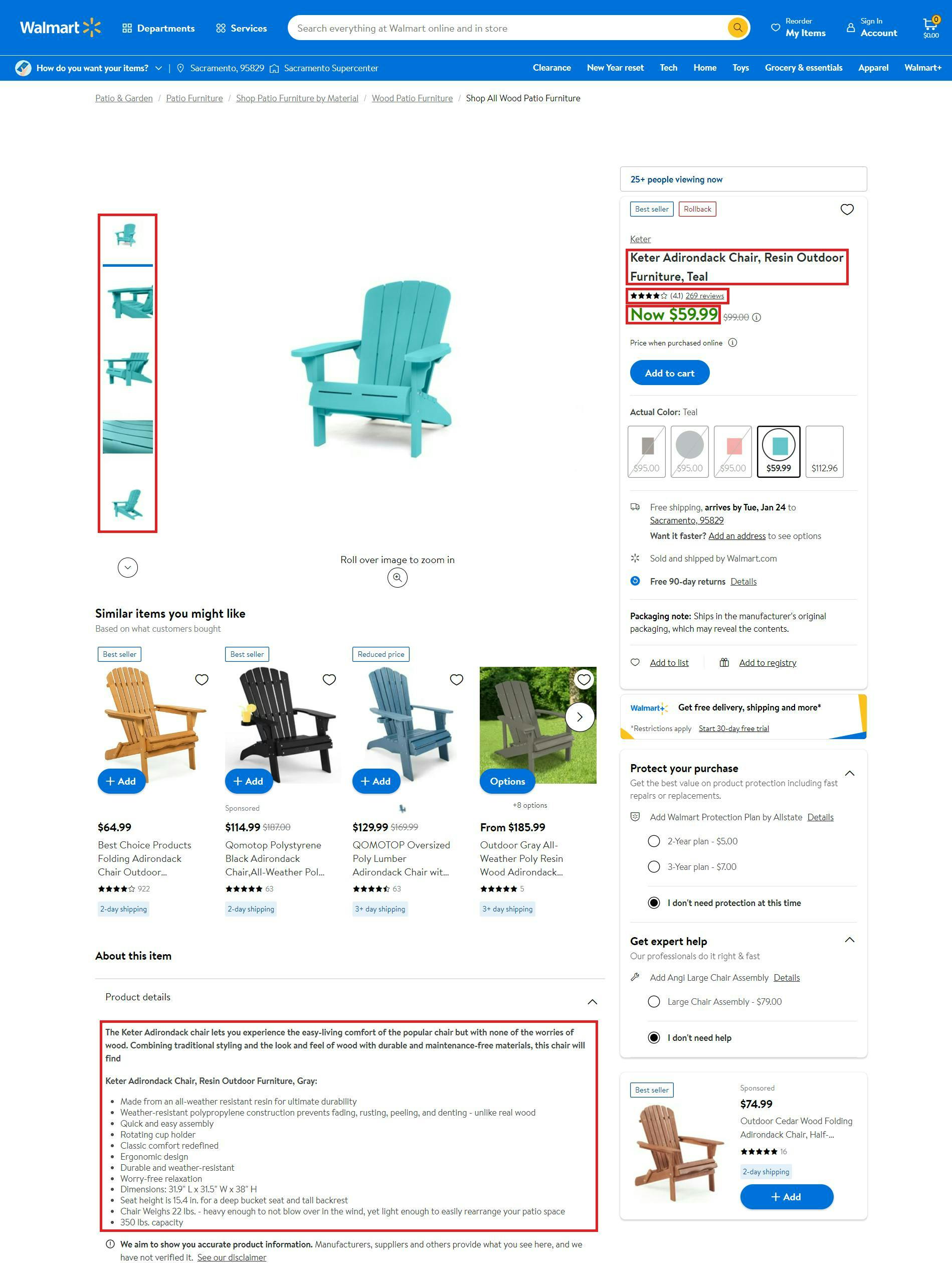

Locating the data

Now that you have your environment set up, we can start looking at extracting the data. For this article, I chose to scrape data from this product page: https://www.walmart.com/ip/Keter-Adirondack-Chair-Resin-Outdoor-Furniture-Teal/673656371.

We’re going to extract the following data:

- the product name;

- the product rating number;

- the product reviews count;

- the product price;

- the product images;

- the product details.

You can see all this information highlighted in the screenshot below:

By opening the Developer Tools on each of these elements you will be able to notice the CSS selectors that we will use to locate the HTML elements. If you’re fairly new to how CSS selectors work, feel free to reach out to this beginner guide.

Data extraction

Before writing our script, let’s verify that the Puppeteer installation went all right:

import puppeteer from 'puppeteer';

async function scrapeWalmartData(walmart_url: string): Promise<void> {

// Launch Puppeteer

const browser = await puppeteer.launch({

headless: false,

args: ['--start-maximized'],

defaultViewport: null

})

// Create a new page

const page = await browser.newPage()

// Navigate to the target URL

await page.goto(walmart_url)

// Close the browser

await browser.close()

}

scrapeWalmartData("https://www.walmart.com/ip/Keter-Adirondack-Chair-Resin-Outdoor-Furniture-Teal/673656371")

Here we open a browser window, create a new page, navigate to our target URL, and then close the browser. For the sake of simplicity and visual debugging, I open the browser window maximized in non-headless mode.

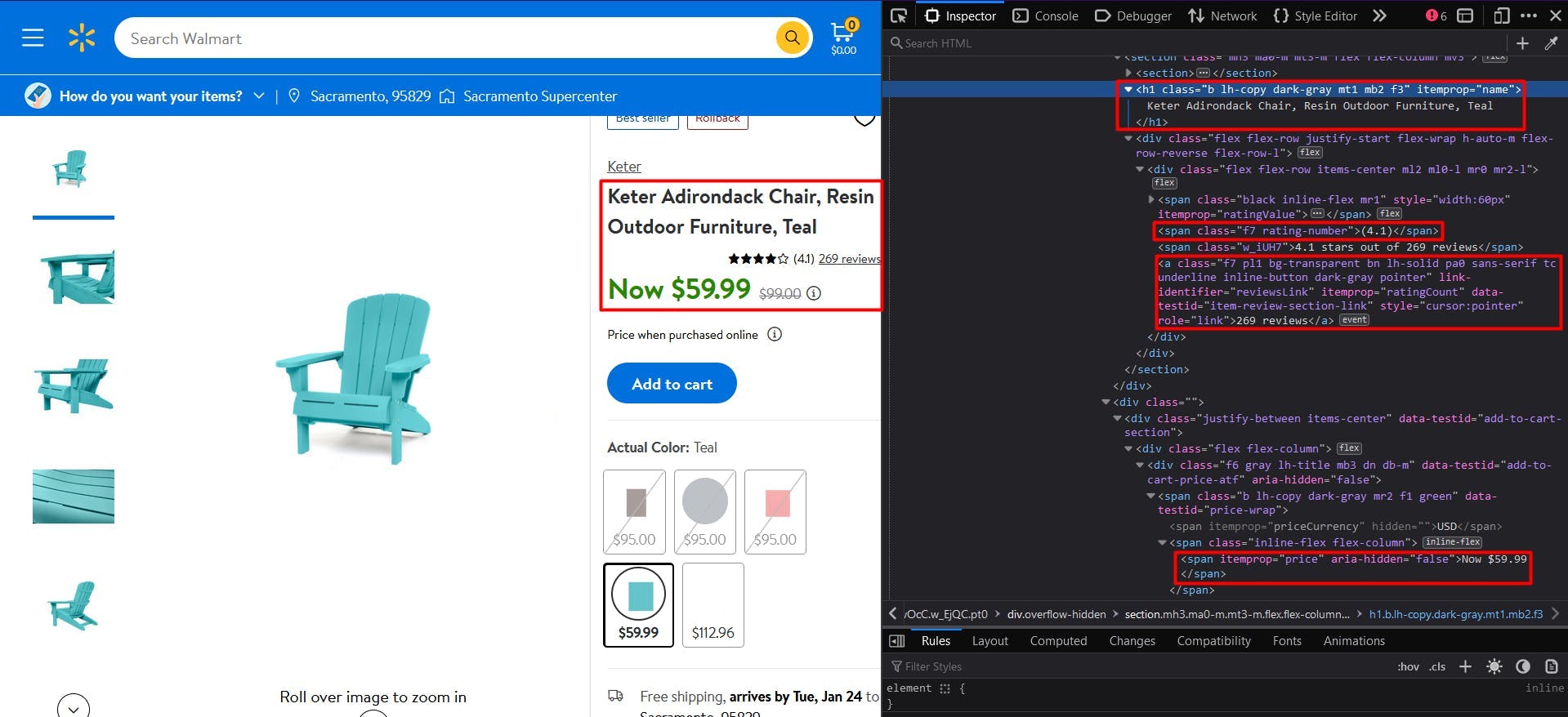

Now, let’s take a look at the website’s structure:

To get the product name, we target the “itemprop” attribute of the “h1” element. The result we’re looking for is its text content.

// Extract product name

const product_name = await page.evaluate(() => {

const name = document.querySelector('h1[itemprop="name"]')

return name ? name.textContent : ''

})

console.log(product_name)

For the rating number, we identified as reliable the “span” elements whose class name ends with “rating-number”.

// Extract product rating number

const product_rating = await page.evaluate(() => {

const rating = document.querySelector('span[class$="rating-number"]')

return rating ? rating.textContent : ''

})

console.log(product_rating)

And finally (for the highlighted section), for the number of reviews and for the product price we rely on the “itemprop” attribute, just like above.

// Extract product reviews count

const product_reviews = await page.evaluate(() => {

const reviews = document.querySelector('a[itemprop="ratingCount"]')

return reviews ? reviews.textContent : ''

})

console.log(product_reviews)

// Extract product price

const product_price = await page.evaluate(() => {

const price = document.querySelector('span[itemprop="price"]')

return price ? price.textContent : ''

})

console.log(product_price)

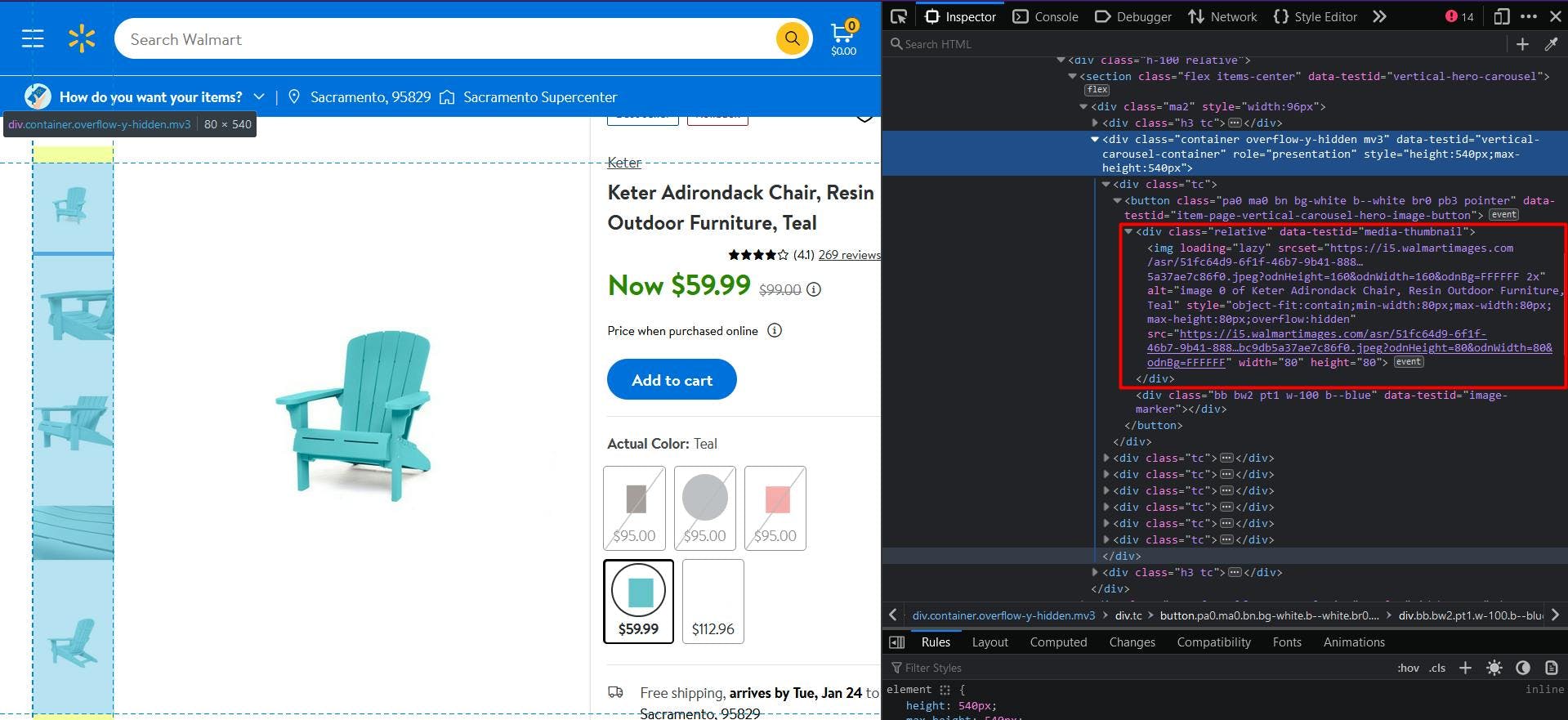

Moving on to the product images, we navigate further in the HTML document:

Slightly more tricky, but not impossible. We cannot uniquely identify the images by themselves, so this time we will target their parent elements. Therefore, we extract the “div” elements that have the attribute “data-testid” set to “media-thumbnail”.

Then, we convert the result to a Javascript array, so we can map each element to its “src” attribute.

// Extract product images

const product_images = await page.evaluate(() => {

const images = document.querySelectorAll('div[data-testid="media-thumbnail"] > img')

const images_array = Array.from(images)

return images ? images_array.map(a => a.getAttribute("src")) : []

})

console.log(product_images)

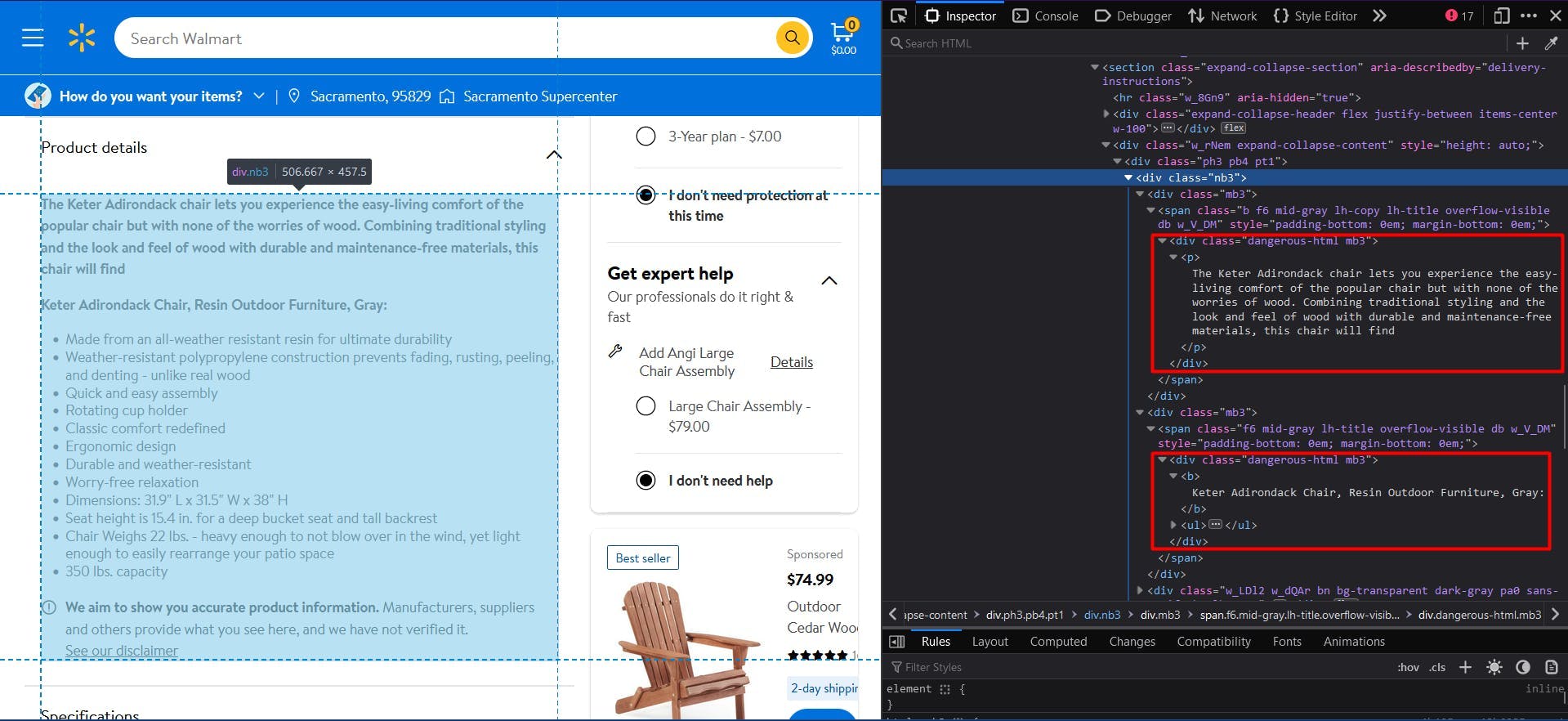

And last but not least, we scroll down the page to inspect the product details:

We apply the same logic from extracting the images, and this time we simply make use of the class name “dangerous-html”.

// Extract product details

const product_details = await page.evaluate(() => {

const details = document.querySelectorAll('div.dangerous-html')

const details_array = Array.from(details)

return details ? details_array.map(d => d.textContent) : []

})

console.log(product_details)

The final result should look like this:

Keter Adirondack Chair, Resin Outdoor Furniture, Teal

(4.1)

269 reviews

Now $59.99

[

'https://i5.walmartimages.com/asr/51fc64d9-6f1f-46b7-9b41-8880763f6845.483f270a12a6f1cbc9db5a37ae7c86f0.jpeg?odnHeight=80&odnWidth=80&odnBg=FFFFFF', 'https://i5.walmartimages.com/asr/80977b5b-15c5-435e-a7d6-65f14b2ee9c9.d1deed7ca4216d8251b55aa45eb47a8f.jpeg?odnHeight=80&odnWidth=80&odnBg=FFFFFF',

'https://i5.walmartimages.com/asr/80c1f563-91a9-4bff-bda5-387de56bd8f5.5844e885d77ece99713d9b72b0f0d539.jpeg?odnHeight=80&odnWidth=80&odnBg=FFFFFF', 'https://i5.walmartimages.com/asr/fd73d8f2-7073-4650-86a3-4e809d09286e.b9b1277761dec07caf0e7354abb301fc.jpeg?odnHeight=80&odnWidth=80&odnBg=FFFFFF',

'https://i5.walmartimages.com/asr/103f1a31-fbc5-4ad6-9b9a-a298ff67f90f.dd3d0b75b3c42edc01d44bc9910d22d5.jpeg?odnHeight=80&odnWidth=80&odnBg=FFFFFF', 'https://i5.walmartimages.com/asr/120121cd-a80a-4586-9ffb-dfe386545332.a90f37e11f600f88128938be3c68dca5.jpeg?odnHeight=80&odnWidth=80&odnBg=FFFFFF',

'https://i5.walmartimages.com/asr/47b8397f-f011-4782-bbb7-44bfac6f3fcf.bb12c15a0146107aa2dcd4cefba48c38.jpeg?odnHeight=80&odnWidth=80&odnBg=FFFFFF'

]

[

'The Keter Adirondack chair lets you experience the easy-living comfort of the popular chair but with none of the worries of wood. Combining traditional styling and the look and feel of wood with durable and maintenance-free materials, this chair will find',

'Keter Adirondack Chair, Resin Outdoor Furniture, Gray: Made from an all-weather resistant resin for ultimate durability Weather-resistant polypropylene construction prevents fading, rusting, peeling, and denting - unlike real wood Quick and easy assembly Rotating cup holder Classic comfort redefined Ergonomic design Durable and weather-resistant Worry-free relaxation Dimensions: 31.9" L x 31.5" W x 38" H Seat height is 15.4 in. for a deep bucket seat and tall backrest Chair Weighs 22 lbs. - heavy enough to not blow over in the wind, yet light enough to easily rearrange your patio space 350 lbs. capacity '

]

Bypass bot detection

While scraping Walmart may seem easy at first, the process can become more complex and challenging as you scale up your project. The retail website implements various techniques to detect and prevent automated traffic, so your scaled-up scraper starts getting blocked.

Walmart uses the “Press & Hold” model of CAPTCHA, offered by PerimeterX, which is known to be almost impossible to solve from your code. Besides this, the website also uses protections offered by Akamai and ThreatMetrix and collects multiple browser data to generate and associate you with a unique fingerprint.

Among the collected browser data we find:

- properties from the Navigator object (deviceMemory, hardwareConcurrency, languages, platform, userAgent, webdriver, etc.)

- canvas fingerprinting

- timing and performance checks

- plugin and voice enumeration

- web workers

- screen dimensions checks

- and many more

One way to overcome these challenges and continue scraping at a large scale is to use a scraping API. These kinds of services provide a simple and reliable way to access data from websites like walmart.com, without the need to build and maintain your own scraper.

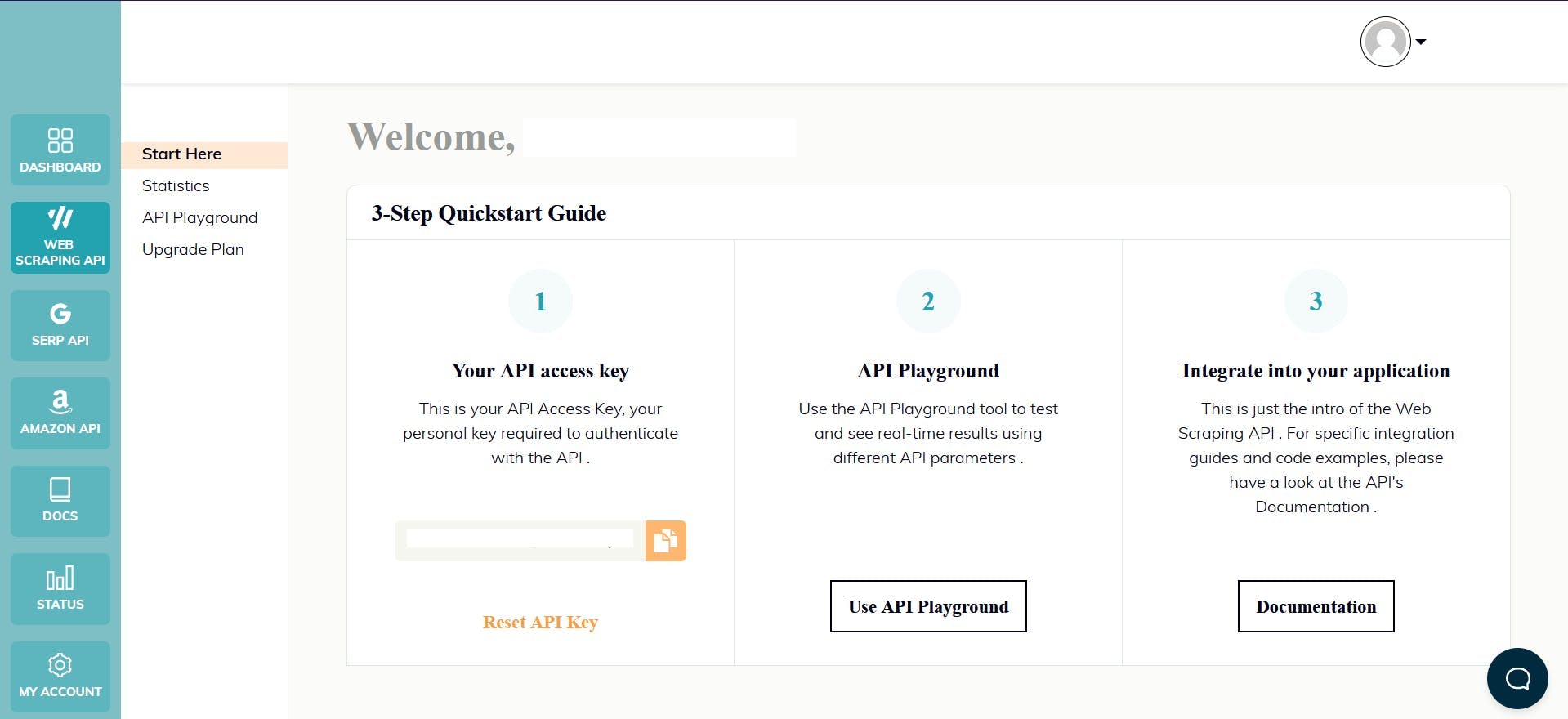

WebScrapingAPI is an example of such a product. Its proxy rotation mechanism avoids CAPTCHAs altogether, and its extended knowledge base makes it possible to randomize the browser data so it will look like a real user.

The setup is quick and easy. All you need to do is register an account, so you’ll receive your API key. It can be accessed from your dashboard, and it’s used to authenticate the requests you send.

As you have already set up your Node.js environment, we can make use of the corresponding SDK. Run the following command to add it to your project dependencies:

npm install webscrapingapi

Now all it’s left to do is to adjust the previous CSS selectors to the API. The powerful feature of extraction rules makes it possible to parse data without significant modifications.

import webScrapingApiClient from 'webscrapingapi';

const client = new webScrapingApiClient("YOUR_API_KEY");

async function exampleUsage() {

const api_params = {

'render_js': 1,

'proxy_type': 'residential',

'timeout': 60000,

'extract_rules': JSON.stringify({

name: {

selector: 'h1[itemprop="name"]',

output: 'text',

},

rating: {

selector: 'span[class$="rating-number"]',

output: 'text',

},

reviews: {

selector: 'a[itemprop="ratingCount"]',

output: 'text',

},

price: {

selector: 'span[itemprop="price"]',

output: 'text',

},

images: {

selector: 'div[data-testid="media-thumbnail"] > img',

output: '@src',

all: '1'

},

details: {

selector: 'div.dangerous-html',

output: 'text',

all: '1'

}

})

}

const URL = "https://www.walmart.com/ip/Keter-Adirondack-Chair-Resin-Outdoor-Furniture-Teal/673656371"

const response = await client.get(URL, api_params)

if (response.success) {

console.log(response.response.data)

} else {

console.log(response.error.response.data)

}

}

exampleUsage();

Conclusion

This article has presented you with an overview of web scraping Walmart using TypeScript along with Puppeteer. We have discussed the process of setting up the necessary environment, identifying and extracting the data, and provided code snippets and examples to help guide you through the process.

The advantages of scraping Walmart's data include gaining valuable insights into consumer behavior, market trends, price monitoring, and much more.

Furthermore, opting for a professional scraping service can be a more efficient solution, as it ensures that the process is completely automated and handles the possible bot detection techniques encountered.

By harnessing the power of Walmart's data, you can drive your business to success and stay ahead of the competition. Remember to always respect the website's terms of service and not to scrape too aggressively in order to avoid being blocked.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Scrape Amazon efficiently with Web Scraping API's cost-effective solution. Access real-time data, from products to seller profiles. Sign up now!

Explore the complexities of scraping Amazon product data with our in-depth guide. From best practices and tools like Amazon Scraper API to legal considerations, learn how to navigate challenges, bypass CAPTCHAs, and efficiently extract valuable insights.

Explore the transformative power of web scraping in the finance sector. From product data to sentiment analysis, this guide offers insights into the various types of web data available for investment decisions.