Web Scraping API Quick Start Guide

Mihnea-Octavian Manolache on Jul 14 2023

WebScrapingAPI offers a suite of web scraping solutions. Our main product is a general purpose web scraper, designed to collect real time data from any website. As you will discover throughout this guide, the tool is packed with various features, allowing it to go undetected by anti-bot systems. Having an extensive set of features, this guide is designed to help you get started with using Web Scraping API. Here are some of the details we will be covering:

- Working with the frontend dashboard

- Understanding the API’s parameters and features

- Sending basic requests to the API

- Setting up a complex web scraper using the SDKs

Why Sign Up With Web Scraping API

There are countless reasons you should use Web Scraping API instead of a regular scraper. However, we’re relying on feedback when deciding what makes us the best choice. And here are some of the reasons why our customers chose us:

- Professional Support - Our support is covered by actual software engineers working hands-on with the API. So when you reach out to us for help, we make sure you get the best answers possible. Moreover, if you encounter any issue, the engineer talking to you will most likely be able to fix it in little to no time, and push the fix into production.

- Ease-of-use - Building a web scraper can be challenging. One has to account for various proxies, evasions, captchas, browsers etc. With Web Scraping API, you get all that (and much more) with a “click of a button”.

- Scalability - When we built our product, one of our first priorities was to ensure we deliver results regardless of the total number of requests we’re receiving. When you sign up for Web Scraping API, you sign up for our entire infrastructure. And that includes proxies, browsers, HTTP clients and more.

Signing Up For A Free Cloud Based Scraper

To sign-up with Web Scraping API, all that is required is a valid email address. Moreover, every user is entitled to a 7 days free trial with full access to the API’s features, limited to 1000 API credits. After these 7 days, you will still get access to a free tier, which offers you 1000 API credits per month, but with limited API features. If you want to keep using the full version of our cloud based web scraper, we offer you flexible pricing plans. You can check out the Pricing page for up to date information on pricing. On a high note,we offer:

- a Starter plan, with up to 100,000 API credits and 20 concurrent calls

- a Grow plan, with up to 1,000,000 API credits and 50 concurrent calls

- a Business plan, with up to 3,000,000 API credits and 100 concurrent calls

- a Pro plan, with up to 10,000,000 API credits and 500 concurrent calls

- a tailored Enterprise plan that is to be discussed based on your particular needs

To get started, please visit our SigUp page here and simply create a free account.

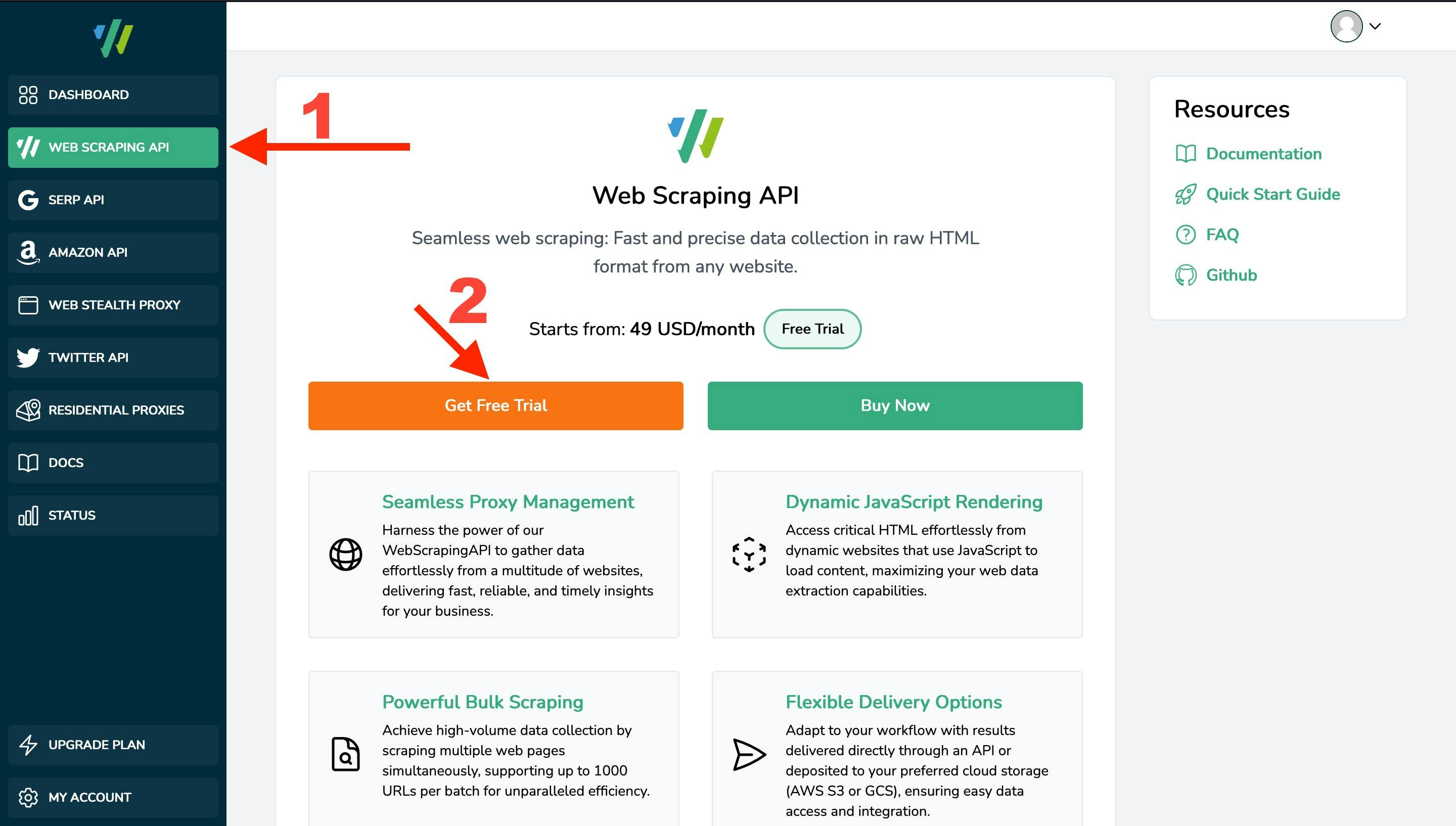

Understanding the Dashboard

Signing up on our Dashboard does not implicitly give you access to our products. As you will see, the left sidebar displays a few options, associated with our products. Since this guide refers to the general purpose web scraper, in order to create a subscription for this service, navigate to Web Scraping API and click on the “Get Free Trial” button. Once the subscription is created, you will be provided an API key. Make sure to keep it safe, as this will be your unique identifier in our systems. You will then also be able to access the statistics page and the Playground.

The Basics of Our Web Scraper API

There are three ways to interact with our web scraper, two of which are let’s say “programmatic” and one that is more “beginner friendly”. The first two imply accessing the API via HTTP clients or via our SDKs. The third one is using the Playground provided inside the Dashboard. And we’ll get to them shortly, but before that, it is important to understand how the API works. Only then you will be able to use the full power of Web Scraping API. So let’s start with the basics:

Authenticating Requests

The way we authenticate requests coming from registered users is by enabling the `api_key` URL parameter. The unique API key is linked to your account and holds information about permissions, usage etc.

Please note that each product you sign up for has an unique API key associated with it. For example, you cannot use the API key from your general purpose web scraper on the SERP API or vice versa.

This being said, in order to scrape a URL as an authenticated user, you will need to access the following resource:

https://api.webscrapingapi.com/v1?api_key=<YOUR_UNIQUE_API_KEY>

API Parameters

Within our API, query parameters are used to customize the scraper based on your needs. Understanding how each parameter works will enable us to use the full power of our web scraper API. We keep an up to date documentation of the API parameters here. However, we’re also going to dive into them here, to have a better understanding of how query parameters work with Web Scraping API. This being said, there are three types of parameters: required, default and optional. The required ones are quite simple:

- The `api_key` parameter that we’ve discussed above

- The `url` parameter, which represents the URL you want to scrape

Please note that the `url` parameter’s value should be a valid URL, not a domain name, and it should ideally be URL encoded. (i.e. https%3A%2F%2Fwebscrapingapi.com)

When it comes to default parameters, we’ve used historical data to increase our API’s (and implicitly your project’s) success rate. Internal data shows that the best configuration for web scraping is using an actual web browser paired with a residential IP address. Hence, our API’s default parameters are:

- `render_js=1` - to fire up an actual browser (not a basic HTTP client)

- `proxy_type=residential` - to access the target via a residential IP address (enabled only if your current plan supports residential proxies)

Of course, you may also overwrite the value for these parameters, though we don’t encourage it. Scraping with a basic HTTP client and datacenter proxies usually leads to the targeted website picking up on scraping activity and blocking access.

Moving forward, we will be discussing the optional parameters. Since we’ve documented all parameters in our Documentation, we’re only going to discuss the most used parameters for now:

- Parameter: render_js

Description: By enabling this parameter, you will access the targeted URL via an actual browser. It has the advantage of rendering JavaScript files. It’s a great choice for scraping JavaScript-heavy sites (like those built with ReactJS, for example).

Documentation: [here] - Parameter: proxy_type

Description: Used to access the targeted URL via a residential or a datacenter IP address.

Documentation: [here] - Parameter: stealth_mode

Description: Web scraping is not an illegal activity. However, some websites tend to block access to automated software (including web scrapers). Our team has designed a set of tools that makes it almost impossible for anti-bot systems to detect our web scraper. You may enable these features by using the stealth_mode=1 parameter.

Documentation: [here] - Parameter: country

Description: Used to access your target from a specific geolocation. Checkout supported countries [here].

Documentation: [here] - Parameter: timeout

Description: By default, we terminate a request (and not charge if failed) after 10s. With certain targets, you may want to increase this value to up to 60s.

Documentation: [here] - Parameter: device

Description: You can use this to make your scraper look like a 'desktop', 'tablet', or 'mobile'.

Documentation: [here] - Parameter: wait_until

Description: In simple terms, once it reaches the targeted URL, it freezes the scraper until a certain event happens. The concept we follow is best described [here].

Documentation: [here] - Parameter: wait_for

Description: This parameter freezes the scraper for a specified amount of time (that cannot exceed 60s).

Documentation: [here] - Parameter: wait_for_css

Description: Freezes the scraper until a certain CSS selector (i.e., class or ID) is visible on the page.

Documentation: [here] - Parameter: session

Description: Enables you to use the same Proxy (IP address) across multiple requests.

Documentation: [here]

Response Codes

One of the most important aspects that you have to know about response codes is that we only charge for successful responses. So if your request results in anything other than status code 200, you won’t be charged. Apart from that, the API errors are documented here and as you will see, they follow the regular HTTP status codes. To name a few:

- 400: Bad Request - When you send invalid parameters for example

- 401: Unauthorized - When you fail to send an `api_key` or the API key is invalid

- 422: Unprocessable Entity - When the API fails to fulfill the request (like for example when the CSS selector you awaited is not visible on page)

Interacting With the Web Scraper API

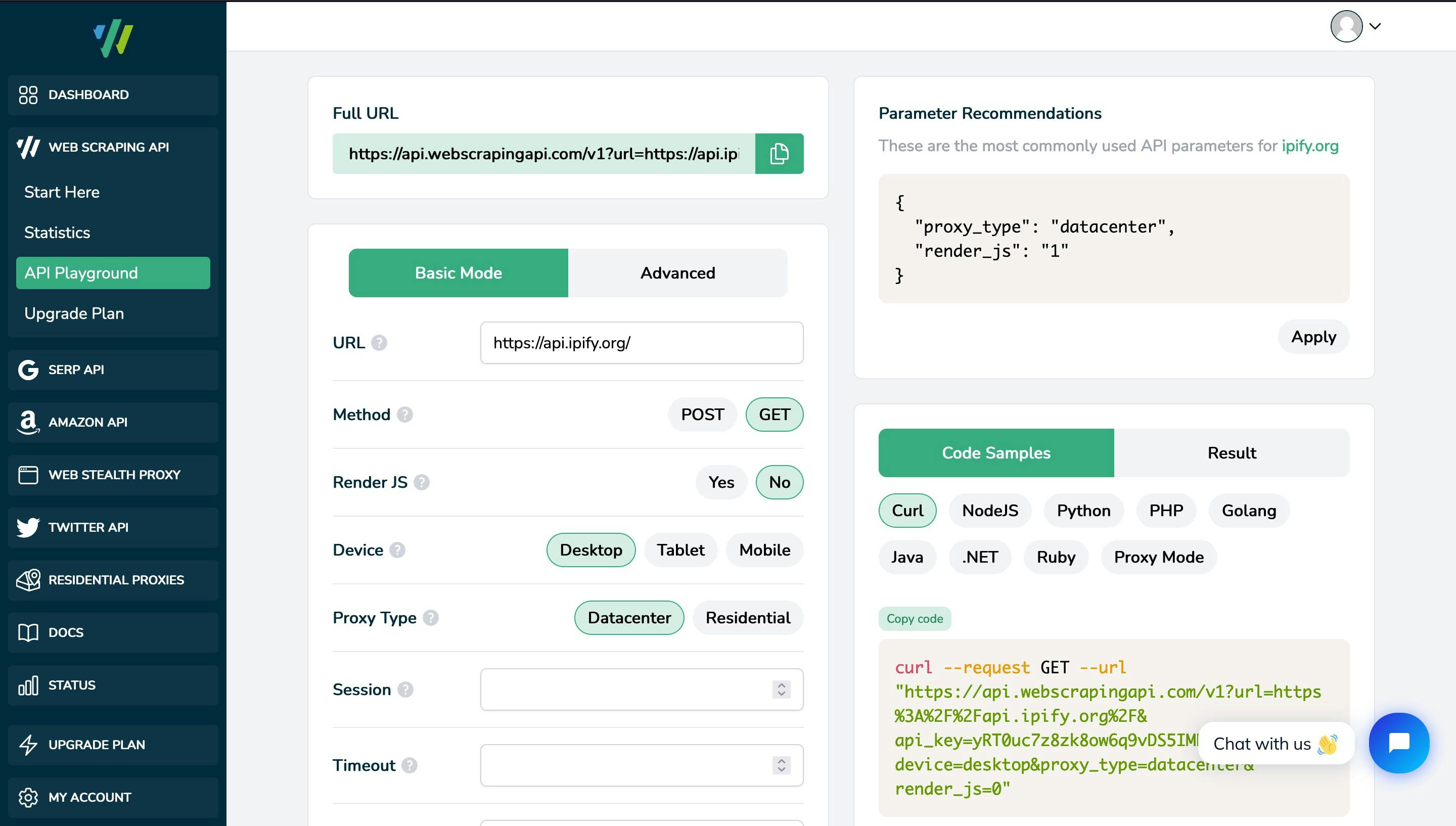

As said, there are mainly three ways you can interact with the web scraper API. First of all, using the SDKs or accessing the API via HTTP clients is more language (or technology) dependent and requires some technical background. A more beginner friendly interface is available within our Dashboard, under the API Playground. This former tool will allow you to play with our web scraper, test it and get the grasp of how to use the parameters to your advantage, before going into programmatic implementations or advanced features. Some key aspects of the playground are:

- It automatically fixes parameters incompatibilities (i.e. `stealth_mode=1` is incompatible with `render_js=0`)

- It provides actual code samples for various programming languages that you can use for your project

- It displays recommended parameters, based on our internal testings and historical data from previous requests, so you can increase your project’s success rate

Advanced Web Scraping Features

For advanced users, our API is packed with various features making it customizable and ready for any scraping project. Again, a good source of information is our official documentation. However, here are some of the aspects we should highlight:

POST, PUT and PATCH

With web scraping API, you are not bound to use only GET requests. Should your scraping project need to create, replace or update resources, you can use our POST, PUT or PATCH requests. A key aspect of these requests is that you can also use `render_js=1`, which means an actual web browser, not a simple HTTP client. A POST request example is:

curl --request POST --url "https://api.webscrapingapi.com/v1?api_key=<YOUR_API_KEY>&url=https%3A%2F%2Fhttpbin.org%2Fpost" --data "{

"foo": "bar"

}"Proxy Mode

You can also use our API as a proxy to scrape your targeted URL. To access the API as a proxy, you need to account for the following:

- The username to authenticate with the proxy is always set to `webscrapingapi`, followed by the parameters you want to enable, separated by dots.

- The password is always your personal API key

Here is a url example you can use to access the web scraper via our Proxy Mode:

https://webscrapingapi.<parameter_1.parameter_2.parameter_n>:<YOUR_API_KEY>@proxy.webscrapingapi.com:8000

Conclusions

Web Scraping API offers a suite of powerful scraping tools that are backed-up by a team of engineers and ready-to-use. It is packed with dozens of features making it a customisable web scraping solution. Moreover, you can integrate the general purpose cloud web scraper with any programming language or technology, as it returns either raw HTML, or parsed JSONs. Moreover, our extensive documentation and public GitHub repositories should help you get started with your project in no time.

We hope this guide is a good starting point for you and please note that our support is always here if you have any questions. We look forward to being your partner in success!

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Scrape Amazon efficiently with Web Scraping API's cost-effective solution. Access real-time data, from products to seller profiles. Sign up now!

Effortlessly gather real-time data from search engines using the SERP Scraping API. Enhance market analysis, SEO, and topic research with ease. Get started today!

Explore the transformative power of web scraping in the finance sector. From product data to sentiment analysis, this guide offers insights into the various types of web data available for investment decisions.