How to Web Scrape Yelp.com (2023 Update) - A Step-by-Step Guide

Raluca Penciuc on Mar 03 2023

Yelp is a platform that allows users to search for businesses, read reviews, and even make reservations. It is a popular website with millions of monthly visitors, making it an ideal target for data scraping.

Knowing how to web scrape Yelp can be a powerful tool for businesses and entrepreneurs looking to gather valuable information about the local market.

In this article, we will explore the advantages of web scraping Yelp, including information on how to set up the environment, locate the data, and extract valuable information.

We will also look at the potential business ideas that can be created using this scraped data, and why using a professional scraper is better than creating your own. By the end of this article, you will have a solid understanding of how to web scrape Yelp.

Environment setup

Before we begin, let's ensure we have the necessary tools.

First, download and install Node.js from the official website, making sure to use the Long-Term Support (LTS) version. This will also automatically install Node Package Manager (NPM) which we will use to install further dependencies.

For this tutorial, we will be using Visual Studio Code as our Integrated Development Environment (IDE) but you can use any other IDE of your choice. Create a new folder for your project, open the terminal, and run the following command to set up a new Node.js project:

npm init -y

This will create a package.json file in your project directory, which will store information about your project and its dependencies.

Next, we need to install TypeScript and the type definitions for Node.js. TypeScript offers optional static typing which helps prevent errors in the code. To do this, run in the terminal:

npm install typescript @types/node --save-dev

You can verify the installation by running:

npx tsc --version

TypeScript uses a configuration file called tsconfig.json to store compiler options and other settings. To create this file in your project, run the following command:

npx tsc -init

Make sure that the value for “outDir” is set to “dist”. This way we will separate the TypeScript files from the compiled ones. You can find more information about this file and its properties in the official TypeScript documentation.

Now, create an “src” directory in your project, and a new “index.ts” file. Here is where we will keep the scraping code. To execute TypeScript code you have to compile it first, so to make sure that we don’t forget this extra step, we can use a custom-defined command.

Head over to the “package. json” file, and edit the “scripts” section like this:

"scripts": {

"test": "npx tsc && node dist/index.js"

}This way, when you will execute the script, you just have to type “npm run test” in your terminal.

Finally, to scrape the data from the website, we will use Puppeteer, a headless browser library for Node.js that allows you to control a web browser and interact with websites programmatically. To install it, run this command in the terminal:

npm install puppeteer

It is highly recommended when you want to ensure the completeness of your data, as many websites today contain dynamic-generated content. If you’re curious, you can check out before continuing the Puppeteer documentation to fully see what it’s capable of.

Data location

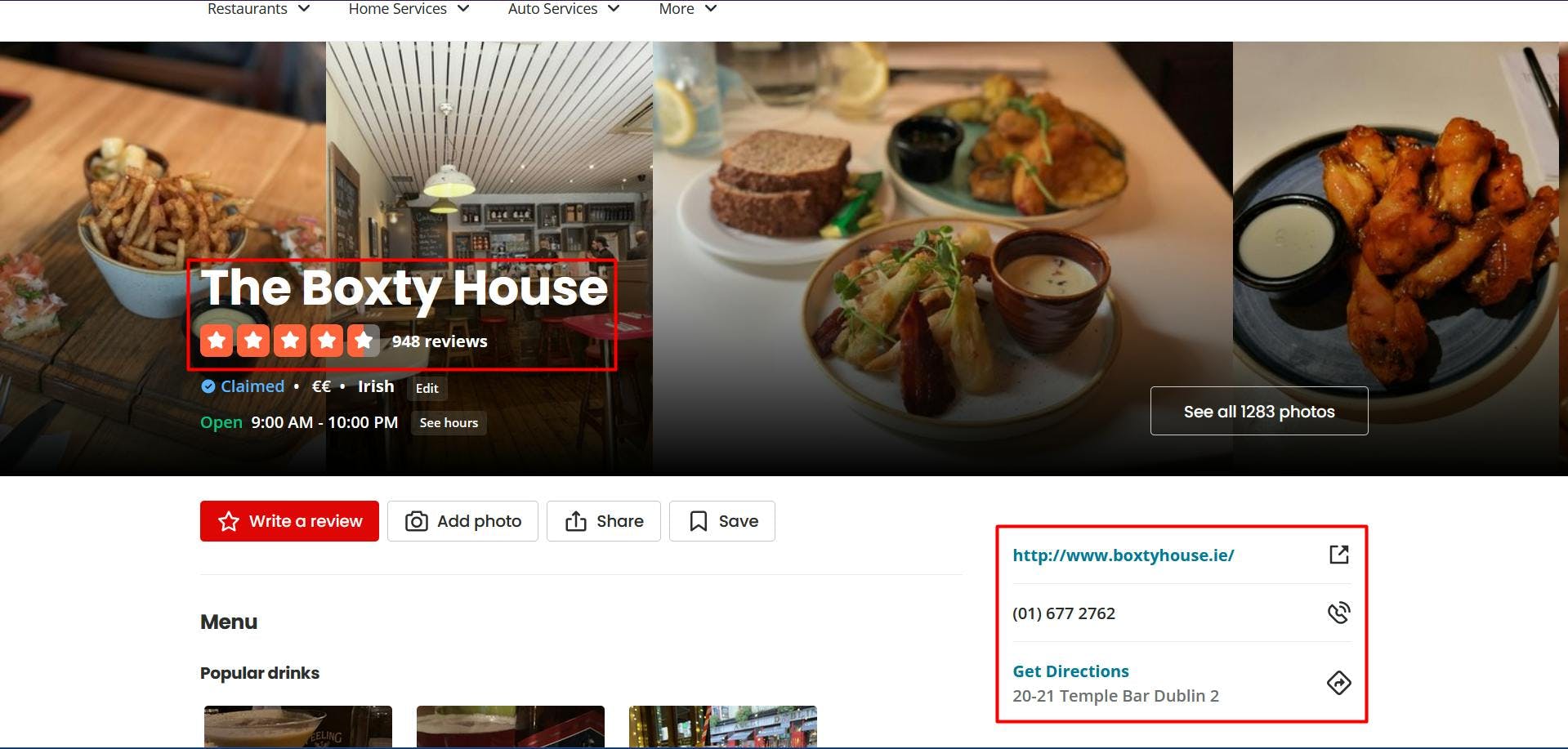

Now that you have your environment set up, we can start looking at extracting the data. For this article, I chose to scrape the page of an Irish restaurant from Dublin: https://www.yelp.ie/biz/the-boxty-house-dublin?osq=Restaurants.

We’re going to extract the following data:

- the restaurant name;

- the restaurant rating;

- the restaurant's number of reviews;

- the business website;

- the business phone number;

- the restaurant's physical addresses.

You can see all this information highlighted in the screenshot below:

By opening the Developer Tools on each of these elements you will be able to notice the CSS selectors that we will use to locate the HTML elements. If you’re fairly new to how CSS selectors work, feel free to reach out to this beginner guide.

Extracting the data

Before writing our script, let’s verify that the Puppeteer installation went all right:

import puppeteer from 'puppeteer';

async function scrapeYelpData(yelp_url: string): Promise<void> {

// Launch Puppeteer

const browser = await puppeteer.launch({

headless: false,

args: ['--start-maximized'],

defaultViewport: null

})

// Create a new page

const page = await browser.newPage()

// Navigate to the target URL

await page.goto(yelp_url)

// Close the browser

await browser.close()

}

scrapeYelpData("https://www.yelp.ie/biz/the-boxty-house-dublin?osq=Restaurants")

Here we open a browser window, create a new page, navigate to our target URL, and close the browser. For the sake of simplicity and visual debugging, I open the browser window maximized in non-headless mode.

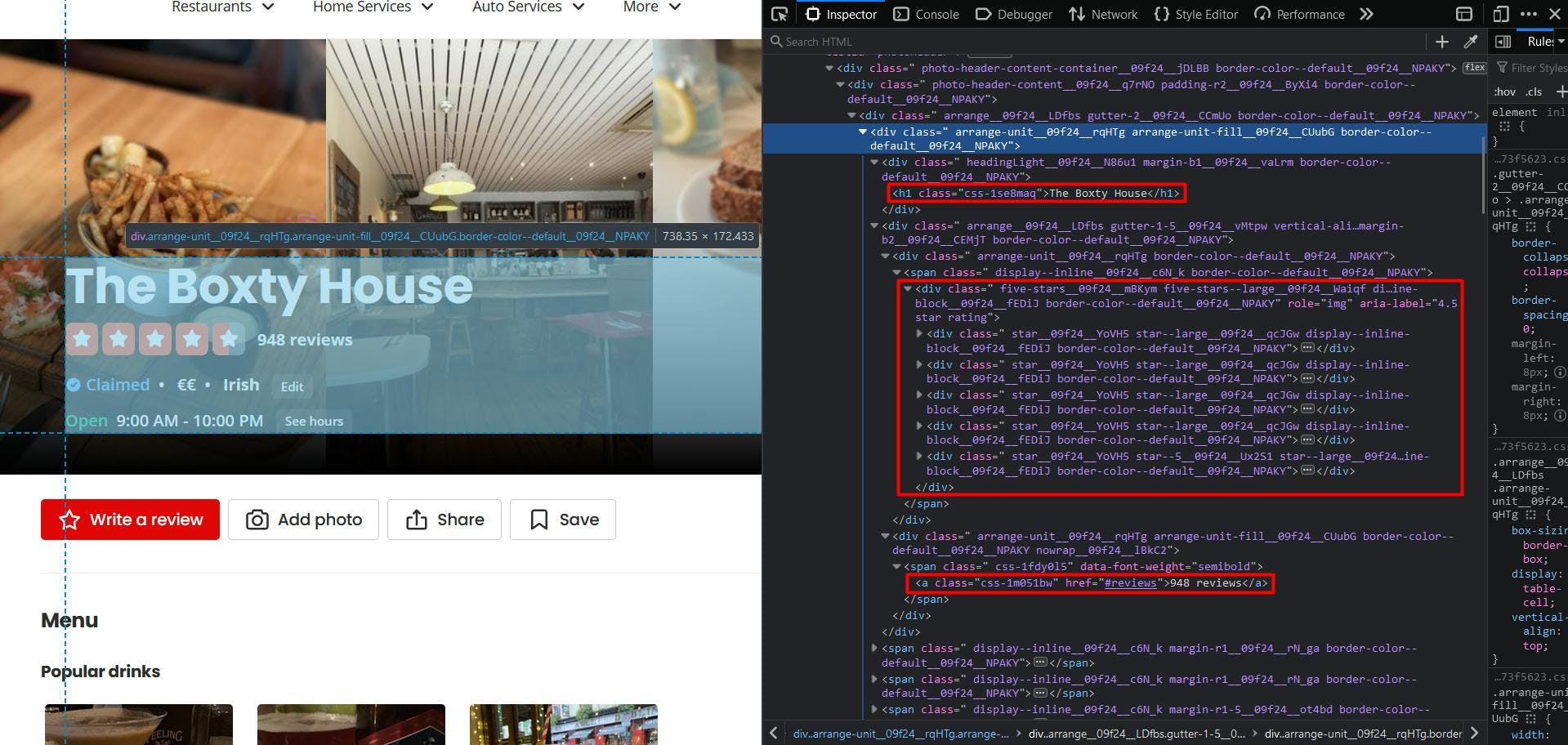

Now, let’s take a look at the website’s structure:

It seems that Yelp displays a somewhat difficult page structure, as the class names are randomly generated and very few elements have unique attribute values.

But fear not, we can get creative with the solution. Firstly, to get the restaurant name, we target the only “h1” element present on the page.

// Extract restaurant name

const restaurant_name = await page.evaluate(() => {

const name = document.querySelector('h1')

return name ? name.textContent : ''

})

console.log(restaurant_name)

Now, to get the restaurant rating, you can notice that beyond the star icons, the explicit value is present in the attribute “aria-label”. So, we target the “div” element whose “aria-label” attribute ends with the “star rating” string.

// Extract restaurant rating

const restaurant_rating = await page.evaluate(() => {

const rating = document.querySelector('div[aria-label$="star rating"]')

return rating ? rating.getAttribute('aria-label') : ''

})

console.log(restaurant_rating)

And finally (for this particular HTML section), we see that we can easily get the review number by targeting the highlighted anchor element.

// Extract restaurant reviews

const restaurant_reviews = await page.evaluate(() => {

const reviews = document.querySelector('a[href="#reviews"]')

return reviews ? reviews.textContent : ''

})

console.log(restaurant_reviews)

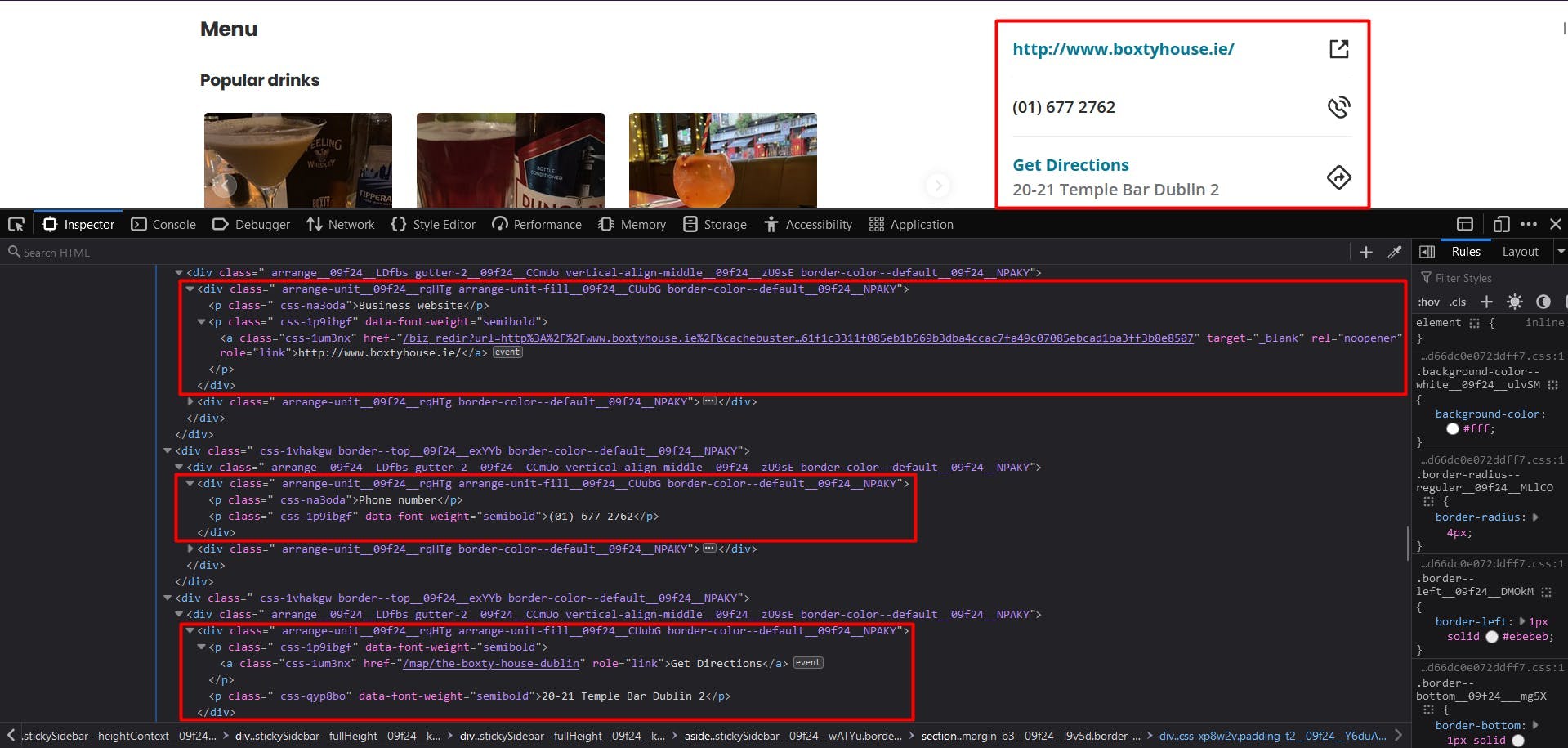

Easy peasy. Let’s take a look at the business information widget:

Unfortunately, in this situation, we cannot rely on CSS selectors. Luckily, we can make use of another method to locate the HTML elements: XPath. If you’re fairly new to how CSS selectors work, feel free to reach out to this beginner guide.

To extract the restaurant’s website: we apply the following logic:

locate the “p” element that has “Business website” as text content;

locate the following sibling

locate the anchor element and its “href” attribute.

// Extract restaurant website

const restaurant_website_element = await page.$x("//p[contains(text(), 'Business website')]/following-sibling::p/a/@href")

const restaurant_website = await page.evaluate(

element => element.nodeValue,

restaurant_website_element[0]

)

console.log(restaurant_website)

Now, for the phone number and the address we can follow the exact same logic, with 2 exceptions:

- for the phone number, we stop the following sibling and extract its textContent property;

- for the address, we target the following sibling of the parent element.

// Extract restaurant phone number

const restaurant_phone_element = await page.$x("//p[contains(text(), 'Phone number')]/following-sibling::p")

const restaurant_phone = await page.evaluate(

element => element.textContent,

restaurant_phone_element[0]

)

console.log(restaurant_phone)

// Extract restaurant address

const restaurant_address_element = await page.$x("//a[contains(text(), 'Get Directions')]/parent::p/following-sibling::p")

const restaurant_address = await page.evaluate(

element => element.textContent,

restaurant_address_element[0]

)

console.log(restaurant_address)

The final result should look like this:

The Boxty House

4.5 star rating

948 reviews

/biz_redir?url=http%3A%2F%2Fwww.boxtyhouse.ie%2F&cachebuster=1673542348&website_link_type=website&src_bizid=EoMjdtjMgm3sTv7dwmfHsg&s=16fbda8bbdc467c9f3896a2dcab12f2387c27793c70f0b739f349828e3eeecc3

(01) 677 2762

20-21 Temple Bar Dublin 2

Bypass bot detection

While scraping Yelp may seem easy at first, the process can become more complex and challenging as you scale up your project. The website implements various techniques to detect and prevent automated traffic, so your scaled-up scraper starts getting blocked.

Yelp collects multiple browser data to generate and associate you with a unique fingerprint. Some of these are:

- properties from the Navigator object (deviceMemory, hardwareConcurrency, platform, userAgent, webdriver, etc.)

- timing and performance checks

- service workers

- screen dimensions checks

- and many more

One way to overcome these challenges and continue scraping at a large scale is to use a scraping API. These kinds of services provide a simple and reliable way to access data from websites like yelp.com, without the need to build and maintain your own scraper.

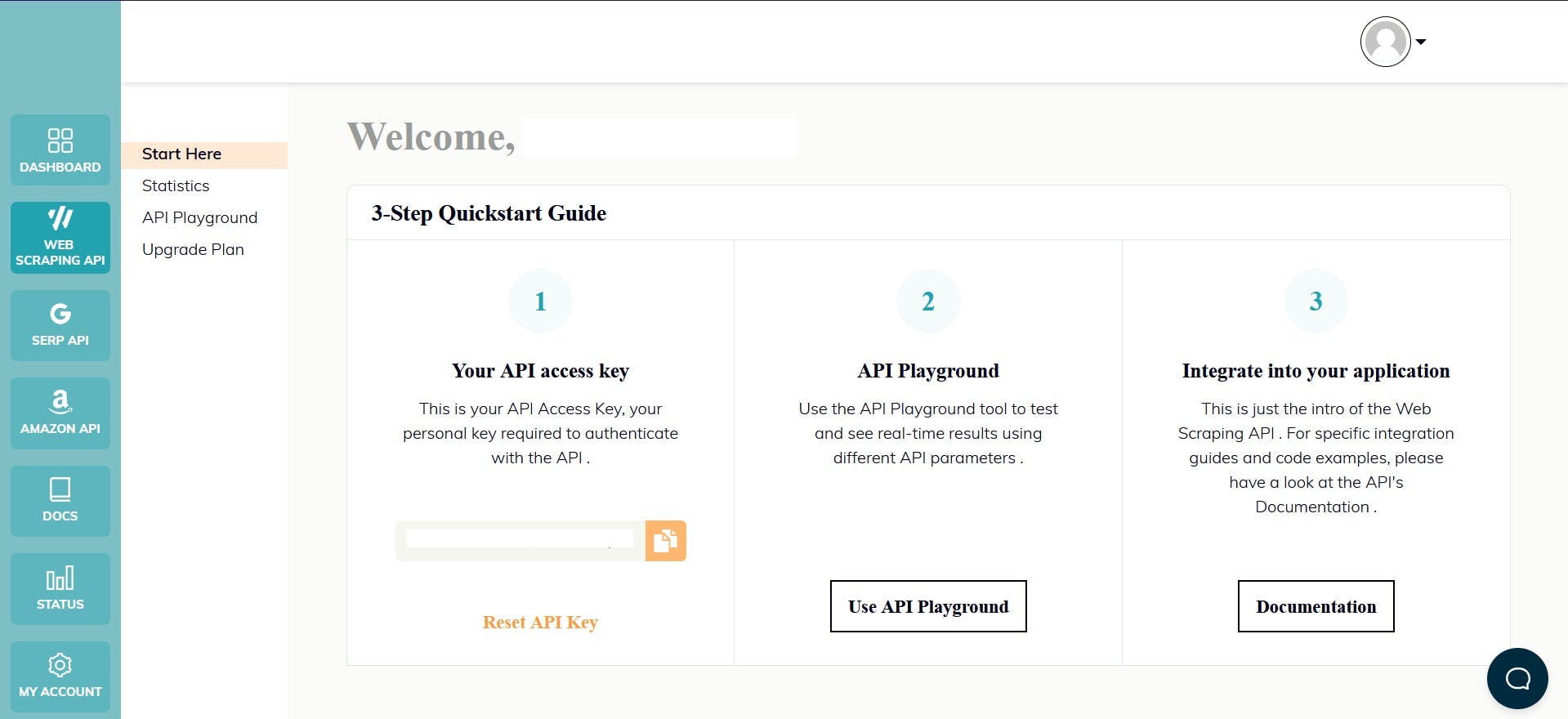

WebScrapingAPI is an example of such a product. Its proxy rotation mechanism avoids CAPTCHAs altogether, and its extended knowledge base makes it possible to randomize the browser data so it will look like a real user.

The setup is quick and easy. All you need to do is register an account, so you’ll receive your API key. It can be accessed from your dashboard, and it’s used to authenticate the requests you send.

As you have already set up your Node.js environment, we can make use of the corresponding SDK. Run the following command to add it to your project dependencies:

npm install webscrapingapi

Now all it’s left to do is to send a GET request so we receive the website’s HTML document. Note that this is not the only way you can access the API.

import webScrapingApiClient from 'webscrapingapi';

const client = new webScrapingApiClient("YOUR_API_KEY");

async function exampleUsage() {

const api_params = {

'render_js': 1,

'proxy_type': 'residential',

}

const URL = "https://www.yelp.ie/biz/the-boxty-house-dublin?osq=Restaurants"

const response = await client.get(URL, api_params)

if (response.success) {

console.log(response.response.data)

} else {

console.log(response.error.response.data)

}

}

exampleUsage();

By enabling the “render_js” parameter, we send the request using a headless browser, just like you previously did along with this tutorial.

After receiving the HTML document, you can use another library to extract the data of interest, like Cheerio. Never heard of it? Check out this guide to help you get started!

Conclusion

This article has presented you with a comprehensive guide on how to web scrape Yelp using TypeScript and Puppeteer. We have gone through the process of setting up the environment, locating and extracting data, and why using a professional scraper is a better solution than creating your own.

The data scraped from Yelp can be used for various purposes such as identifying market trends, analyzing customer sentiment, monitoring competitors, creating targeted marketing campaigns, and many more.

Overall, web scraping Yelp.com can be a valuable asset for anyone looking to gain a competitive advantage in their local market and this guide has provided a great starting point to do so.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore the complexities of scraping Amazon product data with our in-depth guide. From best practices and tools like Amazon Scraper API to legal considerations, learn how to navigate challenges, bypass CAPTCHAs, and efficiently extract valuable insights.

Get started with WebScrapingAPI, the ultimate web scraping solution! Collect real-time data, bypass anti-bot systems, and enjoy professional support.

Are XPath selectors better than CSS selectors for web scraping? Learn about each method's strengths and limitations and make the right choice for your project!