How To Use A Proxy With Node Fetch And Build a Web Scraper

Mihnea-Octavian Manolache on Apr 24 2023

First of all, when building a web scraper, proxies are definitely very important. Secondly, node-fetch is one of the most popular JavaScript HTTP clients. So it makes sense one would combine the two and create a web scraper.

That is why today we are going to discuss how to use a proxy with node-fetch. And as a bonus, we’ll build a web scraper on top of this infrastructure.

So by the end of this article:

- You will have a solid understanding of how proxies work in web scraping

- You will learn how to integrate a proxy with node-fetch

- You will have a project added to your personal portofolio

What is a proxy and why use it for web scraping?

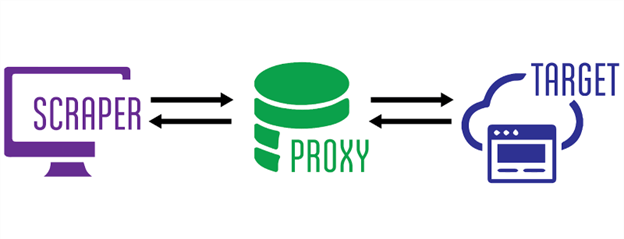

In computer networking, proxies act as “middlewares” between a client and a server. The architecture of a proxy server is quite complex, but on a high level this is what happens when you’re using a proxy:

- You “tap” into the proxy server and specify the destination (server) you are trying to reach (scrape)

- The proxy server connects to your target and retrieves the results (the HTML files of an website for example)

- The proxy server then serves you back the response it got from the destination server

In this chain, your IP remains hidden from the destination server, as you never actually connect to it. And that is mainly the main reason why proxies are such an important part of web scraping. They “hide” the web scraper’s IP address, so it won’t get blocked by antibot systems.

Why use node-fetch for web scraping?

If JavaScript is your favorite programming language, there are many HTTP clients you can use to build a web scraper. Among the most popular ones, there are axios, got and a couple more listed here. But node-fetch remains one of the most downloaded npm packages, and there is a reason for that.

First, it is the first package to implement the Fetch API in Node JS. Then, starting with v17.5.0 the Node JS team added the Fetch API, so it wouldn’t be required as a third party dependency. To this day yet, in Node JS version 19.3.0, fetch is still marked as experimental. So node-fetch remains the more stable solution.

Scraping specific, node-fetch is a great tool because, as the name implies, it is used to fetch resources from various sources. And that is maybe the most basic definition of scraping.

How to use node-fetch with proxies?

Long story short, there is no built-in method to use a proxy with node-fetch. So if you want to build a web scraper, but your infrastructure relies on node-fetch, then you might risk exposing your real IP. This means you risk getting blocked by antibot software.

Luckily, though, there are workarounds to it. One of them is offered to us by Nathan Rajlich, who built a module that implements http.Agent and it’s called https-proxy-agent. The installation is available via npm. Also, implementing it with node-fetch is pretty straightforward:

import fetch from 'node-fetch';

import HttpsProxyAgent from "https-proxy-agent";

const fetch_proxy = async () => {

const proxy = new HttpsProxyAgent('http://1.255.134.136:3128');

const response = await fetch('https://httpbin.org/ip', { agent: proxy});

const data = await response.text();

console.log(data);

}

fetch_proxy()

For the purposes of this test, I used a free proxy from Proxy Scrape. As expected, the response indicates that the request originated from the proxy IP, and not from my local IP:

"origin": "1.255.134.136"

Another option is to use node-fetch-with-proxy. This package uses proxy-agent as a dependency of its own on top of node-fetch. For this to work, all you need to do is set the ‘HTTP_PROXY’ environment variable. It takes your proxy server IP address and port number as a value. Then you can use the regular node-fetch syntax to make your calls, which will automatically be routed to the proxy server. Here is an example:

import fetch from "node-fetch-with-proxy";

fetch('http://httpbin.org/ip')

.then(res => res.json())

.then(json => console.log(json));

How to build a web scraper using a proxy with node-fetch?

On a high note, we’ve already built a web scraper. The code samples from above do exactly what any web scraper does, namely, they collect data from a website. However, in a real life scenario, a web scraper is a bit more complex. For example, the raw data needs to be processed, or we need to check and parse the collected headers or cookies. So let us dive a bit deeper and turn our first sample into a real scraper. Let’s set some expectations:

- We should be able to return the raw HTML

- We should be able to return the entire response as a JSON object

- We should be able to extract elements based on specific selectors

Assuming that you have already installed node-fetch and https-proxy-agent, we’ll need one more thing: an HTML parser. I always go for cheerio for web scraping. So please make sure you install it inside your project. This being said, let’s get started:

#1: Import dependencies

The first thing we want to do is import the packages we’ve discussed above. I guess this part needs no further explanation:

import fetch from 'node-fetch';

import HttpsProxyAgent from "https-proxy-agent";

import * as cheerio from 'cheerio';

#2: Scraper logic

We need our scraper to be able to perform three actions: return the row HTML, return the entire response, and return an element based on its CSS selector. One of them we’ve already partly implemented before. But let’s split everything into three functions:

const raw_html = async (proxyServer, targetURL) => {

const proxy = new HttpsProxyAgent(proxyServer);

const response = await fetch(targetURL, { agent: proxy});

const data = await response.text();

return data;

}

const json_response = async (proxyServer, targetURL) => {

const proxy = new HttpsProxyAgent(proxyServer);

const response = await fetch(targetURL, { agent: proxy});

const data = {

url: response.url,

status: response.status,

Headers: response.headers,

body: await response.text()

}

return data;

}

const select_css = async (proxyServer, targetURL, cssSelector) => {

const proxy = new HttpsProxyAgent(proxyServer);

const response = await fetch(targetURL, { agent: proxy});

const html = await response.text();

const $ = cheerio.load(html);

return $(cssSelector).text();

}#3: Argument parser

We can use terminal arguments to distinguish between the three options that we’ve implemented in our scraper. There are options you can use to parse terminal arguments in Node, but I like to keep things simple. That is why we’ll use process.argv, which generates an array of arguments. Note that the first two items of this array are ‘node’ and the name of your script. For example, if running `node scraper.js raw_html`, the arguments array will look like this:

[

'/usr/local/bin/node',

'path_to_directory/scraper.js',

'raw_html'

]

Ignoring the first two elements, we’ll use the following logic:

- the first argument will specify the function we want to run;

- the second will point to our target (the website we want to scrape);

- the third will point to the proxy server;

- and a fourth one will point to the css selector.

So the command to run our scraper should look like this:

~ » node scraper.js raw_html https://webscrapingapi.com http://1.255.134.136:3128

This simply translates to extract the raw html from WebScrapingAPI’s home page and use http://1.255.134.136 as a proxy middleware. Now the final part is to code the logic for these arguments, such that our code will understand the run command:

const ACTION = process.argv[2]

const TARGET = process.argv[3]

const PROXY = process.argv[4]

const SELECTOR = process.argv[5]

switch (ACTION) {

case 'raw_html':

console.log(await raw_html(PROXY, TARGET))

break

case 'json_response':

console.log(await json_response(PROXY, TARGET))

break

case 'select_css':

SELECTOR ? console.log(await select_css(PROXY, TARGET, SELECTOR)) : console.log('Please specify a CSS selector!')

break

default:

conssole.log('Please choose between `raw_html`, `json_response` and `select_css`')

}And that is basically it. Congratulations! You have successfully created a fully functional web scraper using a proxy with node-fetch. I now challenge you to add more functionality to this scraper, make your own version, and use it as an asset in your personal portfolio.

Using a proxy with node-fetch may not be enough for web scraping

As I like to say, there is more to stealthy scraping than just hiding your IP address. In reality, using a proxy server for your web scraper is only one layer of protection against antibot software. Another layer is changing your user agent by [setting custom headers to your request] (LINK https://trello.com/c/n8xZswSI/14-2-8-january-article-13-http-headers-with-axios).

At Web Scraping API for example, we have a dedicated team that works on custom evasion techniques. Some of them go as far as modifying the default values of the headless browser in order to avoid fingerprinting.

Moreover, as modern sites render content dynamically using JavaScript, a simple HTTP client such as node-fetch may not be enough. You may want to explore using a real web browser. Python’s selenium or Node’s puppeteer is just two options you could look into in that regard.

Conclusions

Using a proxy with node-fetch is a great place to start when building a web scraper. However, you have to account for the fact that the two are not ‘directly compatible’ and you will have to use a third party solution to link them. Luckily, there are plenty of possibilities and the JavaScript community is always happy to help newcomers.

Building a stealthy scraper is however more difficult and you’ll have to come up with more complex evasion techniques. But I like to see an opportunity in everything. Why not take what you’ve learned today and add to it. Hopefully, by the end, you'll come up with the best web scraper written with node-fetch and proxies. As always, my advice for you is to keep on learning!

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Learn what’s the best browser to bypass Cloudflare detection systems while web scraping with Selenium.

Discover 3 ways on how to download files with Puppeteer and build a web scraper that does exactly that.

Learn how to scrape Google Maps reviews with our API using Node.js. Get step-by-step instructions on setting up, extracting data, and overcoming potential issues.