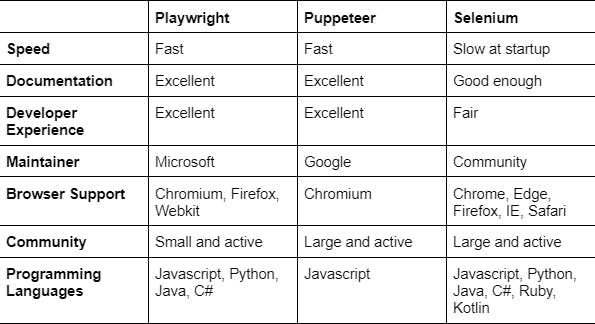

Find out how to scrape HTML tables with Golang

Andrei Ogiolan on Apr 24 2023

Introduction

Web scraping is a technique for extracting data from websites and can be a powerful tool for gathering information from the internet. This article will look at how to scrape HTML tables with Golang, a popular programming language known for its simplicity, concurrency support, and robust standard library.

What are HTML tables?

HTML tables are a type of element in HTML (Hypertext Markup Language) that is used to represent tabular data on a web page. An HTML table consists of rows and columns of cells containing text, images, or other HTML elements. HTML tables are created using the table element, and are structured using the ‘<tr>’ (table row),‘<td>’ (table cell), ‘<th>’ (table header), ‘<caption>’, ‘<col>’, ‘<colgroup>’, ‘<tbody>’ (table body), ‘<thead>’ (table head) and ‘<tfoot>’ (table foot) elements. Now let’s go through each one and get into more detail:

- table element: Defines the start and end of an HTML table.

- tr (table row) element: Defines a row in an HTML table.

- td (table cell) element: Defines a cell in an HTML table.

- th (table header) element: Defines a header cell in an HTML table. Header cells are displayed in bold and centered by default and are used to label the rows or columns of the table.

- caption element: Defines a caption or title for an HTML table. The caption is typically displayed above or below the table.

- col and colgroup elements: Define the properties of the columns in an HTML table, such as the width or alignment.

- tbody, thead, and tfoot elements: Define the body, head, and foot sections of an HTML table, respectively. These elements can be used to group rows and apply styles or attributes to a specific section of the table.

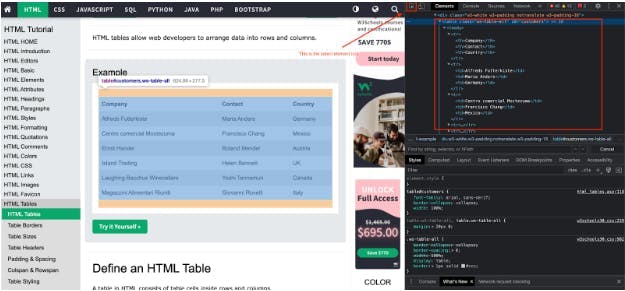

For a better understanding of this concept, let’s see what an HTML table looks like:

At first look, it seems like a normal table and we can not see the structure with the above described elements. It does not mean they are not present, it means the browser already parsed that for us. In order to be able to see the HTML structure, you need to go one step deeper and use dev tools. You can do that by right-clicking on the page, clicking on inspect, clicking on select element tool, and click on the element ( table in this case ) you want to see the HTML structure for. After following these steps, you should see something like this:

HTML tables are commonly used to present data in a structured, tabular format, such as for tabulating results or displaying the contents of a database. They can be found on a wide variety of websites and are an important element to consider when scraping data from the web.

Setting up

Before we start scraping, we need to set up our Golang environment and install the necessary dependencies. Make sure you have Golang installed and configured on your system, and then create a new project directory and initialize a `go.mod` file:

$ mkdir scraping-project

$ cd scraping-project

$ go mod init <NAME-OF-YOUR-PROJECT>

$ touch main.go

Next, we need to install a library for making HTTP requests and parsing HTML. There are several options available, but for this article we will use the `net/http` package from the standard library and the golang.org/x/net/html package for parsing HTML. These packages can be installed by running the following command:

$ go get -u net/http golang.org/x/net/html

Now that our environment is set up, we are ready to start building our HTML table scraper using Golang.

Let’s start scraping

Now that we have our environment set up, we can start building a scraper to extract data from an HTML table. The first step is to send an HTTP request to the web page that contains the HTML table we want to scrape. We can use the `http.Get` function from the `net/http` package to send a GET request and retrieve the HTML content:

package main

import (

"fmt"

"io/ioutil"

"log"

"net/http"

)

func main() {

resp, err := http.Get("https://www.w3schools.com/html/html_tables.asp")

if err != nil {

log.Fatal(err)

}

defer resp.Body.Close()

// Read the response body and convert it to a string

body, err := ioutil.ReadAll(resp.Body)

if err != nil {

log.Fatal(err)

}

html := string(body)

fmt.Println(html)

}

Next, we can use the `goquery.NewDocumentFromReader` function from the goquery package to parse the HTML content and extract the data we need. As any other Golang package you need to install it first as following:

$ go get github.com/PuerkitoBio/goquery

And then add the following code which will parse the HTML of the page:

doc, err := goquery.NewDocumentFromReader(resp.Body)

if err != nil {

log.Fatal(err)

}

Now that we have a parser and element extractor for our HTML, we can take advantage of the Goquery package `doc.Find()` functionality which enables us to find the specific elements we are looking for, in this case, a table. We can use it as follows:

doc.Find("table").Each(func(i int, sel * goquery.Selection) {

// For sake of simplicity taking the first table of the page

if i == 0 {

// Looping through headers

headers: = sel.Find("th").Each(func(_ int, sel * goquery.Selection) {

if sel != nil {

fmt.Print(sel.Text())

fmt.Print(" ")

}

})

fmt.Println()

// Looping through cells

sel.Find("td").Each(func(index int, sel * goquery.Selection) {

if sel != nil {

fmt.Print(sel.Text())

fmt.Print(" ")

}

// Printing columns nicely

if (index + 1) % headers.Size() == 0 {

fmt.Println()

}

})

}

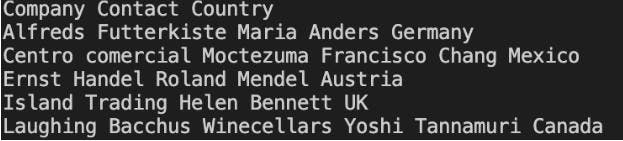

})That’s it, you are able now to scrape the table using Golang and you should be able to see it on the screen like this:

As you may notice the structure can be quite confusing and hard to read. The good news is that you can do better than that and display the data nicely in a tabular format which is easy to read. This is a perfect job for tablewriter package which you can install as following:

$ go get github.com/olekukonko/tablewriter

Now we need to do a few adjustments to our code before passing our information to a tablewriter such as defining the table headers, structs and storing them into an array. It should look something like this:

package main

import (

"log"

"net/http"

"os"

"github.com/PuerkitoBio/goquery"

"github.com/olekukonko/tablewriter"

)

type Company struct {

Company string

Contact string

Country string

}

func main() {

resp, err := http.Get("https://www.w3schools.com/html/html_tables.asp")

if err != nil {

log.Fatal(err)

}

defer resp.Body.Close()

// Read the response body and convert it to a string

doc, err := goquery.NewDocumentFromReader(resp.Body)

if err != nil {

log.Fatal(err)

}

var companies []Company

doc.Find("table").Each(func(i int, sel *goquery.Selection) {

if i == 0 {

e := Company{}

sel.Find("td").Each(func(index int, sel *goquery.Selection) {

if index%3 == 0 {

e.Company = sel.Text()

}

if index%3 == 1 {

e.Contact = sel.Text()

}

if index%3 == 2 {

e.Country = sel.Text()

}

// Add the element to our array

if index != 0 && (index+1)%3 == 0 {

companies = append(companies, e)

}

})

}

})

table := tablewriter.NewWriter(os.Stdout)

// Setting our headers

table.SetHeader([]string{"Company", "Contact", "Country"})

for _, Company := range companies {

s := []string{

Company.Company,

Company.Contact,

Company.Country,

}

table.Append(s)

}

table.Render()

}

You should be able now to see the data displayed in this format:

At this point, you managed to build a scraper in Golang that scrapes a web page, and stores and displays the data nicely. You can also modify the code to scrape a table from another website. Please keep in mind that not all the websites on the internet are this easy to scrape data from. Many of them implemented high level protection measures designed to prevent scraping such as CAPTCHA and blocking the IP addresses, but luckily there are 3rd party services such as WebScrapingAPI which offer IP Rotation and CAPTCHA bypass enabling you to scrape those targets.

Diving deeper

While the technique we have described so far is sufficient for simple HTML tables, there are several ways in which it can be improved.

One potential issue is that the HTML table structure may not be consistent across all web pages. For example, the table may have a different number of columns or the data may be nested in different HTML elements. To handle these cases, you can use more advanced techniques such as CSS selectors or XPath expressions to locate the data you want to extract.

Another issue is that web pages often use AJAX or other client-side technologies to load additional data into the page after it has loaded in the browser. This means that the HTML table you are scraping may not contain all of the data you need. To scrape these types of pages, you may need to use a tool such as a headless browser, which can execute JavaScript and render the page just like a regular web browser. A good alternative to that is to use our scraper which can return the data after JavaScript is rendered on the page. You can learn more about this by checking our docs.

Finally, it's important to consider the performance and scalability of your scraper. If you are scraping large tables or multiple pages, you may need to use techniques such as concurrency or rate limiting to ensure that your scraper can handle the load.

Summary

I hope you found this resource as a good starting point for scraping HTML tables with Golang. We have walked through the process of scraping data from an HTML table using the Go programming language. We looked at how to retrieve the HTML content of a web page, print it on the screen and display it in a tabular readable human eye format. We also discussed some of the challenges that you may encounter when scraping HTML tables, including inconsistent table structures, client-side data loading, and performance and scalability issues.

While it is possible to build your own scraper using the techniques described in this article, it is often more efficient and reliable to use a professional scraping service. These services have the infrastructure, expertise, and security measures in place to handle large volumes of data and complex scraping tasks, and can often provide data in a more structured and convenient format such as CSV or JSON.

In summary, scraping HTML tables can be a useful way to extract data from the web, but it is important to carefully consider the trade-offs between building your own scraper and using a professional service.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Learn how to use proxies with Axios & Node.js for efficient web scraping. Tips, code samples & the benefits of using WebScrapingAPI included.

Discover 3 ways on how to download files with Puppeteer and build a web scraper that does exactly that.

Learn how to scrape JavaScript tables using Python. Extract data from websites, store and manipulate it using Pandas. Improve efficiency and reliability of the scraping process.