Web Scraping With Scrapy: The Easy Way

Mihai Maxim on Jan 30 2023

Web scraping with Scrapy

Scrapy is a powerful Python library for extracting data from websites. It's fast, efficient, and easy to use – trust me, I've been there. Whether you're a data scientist, a developer, or someone who loves to play with data, Scrapy has something to offer you. And best of all, it's free and open-source.

Scrapy projects come with a file structure that helps you organize your code and data. It makes it easier to build and maintain web scrapers, so it's definitely worth considering if you're planning to do any serious web scraping. Web scraping with Scrapy It's like having a helpful assistant (albeit a virtual one) by your side as you embark on your data extraction journey.

What we’re going to build

When you're learning a new skill, it's one thing to read about it and another thing to actually do it. That's why we've decided to build a scraper together as we go through this guide. It's the best way to get a hands-on understanding of how web scraping with Scrapy works.

So what will we be building, exactly? We’ll build a scraper that scrapes word definitions from the Urban Dictionary website. It's a fun target for scraping and will help make the learning process more enjoyable. Our scraper will be simple – it will return the definitions for various words found on the Urban Dictionary website. We'll be using Scrapy's built-in support for selecting and extracting data from HTML documents to pull out the definitions that we need.

So let's get started! In the next section, we'll go over the prerequisites that you'll need to follow along with this tutorial. See you there!

Prerequisites

Before we dive into building our scraper, there are a few things that you'll need to set up. In this section, we'll be covering how to install Scrapy and set up a virtual environment for our project. The Scrapy documentation suggests installing Scrapy in a dedicated virtual environment. By doing so, you will avoid any onflicts with your system packages.

I'm running Scrapy on Ubuntu 22.04.1 WSL (Windows Subsystem for Linux), so I'll be configuring a virtual environment for my machine.

I encourage you to go through the "Understanding the Folder Structure" chapter to fully understand the tools that we are working with. Also, have a look at the “Scrapy Shell” chapter, it will make your development experience a lot easier.

Setting up a Python virtual environment

To set up a virtual environment for Python in Ubuntu, you can use the mkvirtualenv command. First, make sure that you have virtualenv and virtualenvwrapper installed:

$ sudo apt-get install virtualenv virtualenvwrapper

Add these lines at the end of your .bashrc file:

export WORKON_HOME=$HOME/.virtualenvs

export PROJECT_HOME=$HOME/Deve

export VIRTUALENVWRAPPER_PYTHON='/usr/bin/python3'

source /usr/local/bin/virtualenvwrapper.sh

I used Vim to edit the file, but you can chose whatever editor you like:

vim ~/.bashrc

// Use ctr + i to enter insert mode, use the down arrow to scroll to the bottom of the file.

// Paste the lines at the end of the file.

// Hit escape to exit insert mode, type wq and hit enter to save the changes and exit Vim.

Then create a new virtual environment with mkvirtualenv:

$ mkvirtualenv scrapy_env

Now you should see a (scrapy_env) appended at the beginning of your terminal line.

To exit the virtual environment, type $ deactivate

To return to the scrappy_env virtual environment, type $ workon scrapy_env

Installing Scrapy

You can install Scrapy with the pip package manager:

$ pip install scrapy

This will install the latest version of Scrapy.

You can create a new project with the scrapy startproject command:

$ scrapy startproject myproject

This will initialize a new Scrapy project called "myproject". It should contain the default project structure.

Understanding the folder structure

This is the default project structure:

myproject

├── myproject

│ ├── __init__.py

│ ├── items.py

│ ├── middlewares.py

│ ├── pipelines.py

│ ├── settings.py

│ └── spiders

│ └── __init__.py

└── scrapy.cfg

items.py

items.py is a model for the extracted data. This model will be used to store the data that you extract from the website.

Example:

import scrapy

class Product(scrapy.Item):

name = scrapy.Field()

price = scrapy.Field()

description = scrapy.Field()

Here we defined an Item called Product. It can be used by a Spider (see /spiders) to store information about the name, price and description of a product.

/spiders

/spiders is a folder containing Spider classes. In Scrapy, Spiders are classes that define how a website should be scraped.

Example:

import scrapy

from myproject.items import Product

class MySpider(scrapy.Spider):

name = 'myspider'

start_urls = ['<example_website_url>']

def parse(self, response):

# Extract the data for each product

for product_div in response.css('div.product'):

product = Product()

product['name'] = product_div.css('h3.name::text').get()

product['price'] = product_div.css('span.price::text').get()

product['description'] = product_div.css('p.description::text').get()

yield product

The “spider” goes through the start_urls, extracts the name, price and description of all the products found on the pages (using css selectors) and stores the data in the Product Item (see items.py). It then “yields” these items, which causes Scrapy to pass them to the next component in the pipeline (see pipelines.py).

Yield is a keyword in Python that allows a function to return a value without ending the function. Instead, it produces the value and suspends the function's execution until the next value is requested.

For example:

def count_up_to(max):

count = 1

while count <= max:

yield count

count += 1

for number in count_up_to(5):

print(number)

// returns 1 2 3 4 5 (each on a new line)

pipelines.py

pipelines are responsible for processing the items (see items.py and /spiders) that are extracted by the spiders. You can use them to clean up the HTML, validate the data, and export it to a custom format or save it to a database.

Example:

import pymongo

class MongoPipeline(object):

def __init__(self):

self.conn = pymongo.MongoClient('localhost', 27017)

self.db = self.conn['mydatabase']

self.product_collection = self.db['products']

self.other_collection = self.db['other']

def process_item(self, item, spider):

if spider.name == 'product_spider':

//insert item in the product_collection

elif spider.name == 'other_spider':

//insert item in the other_collection

return item

We created a Pipeline named MongoPipeline. It connects to two MongoDB collections (product_collection and other_collection). The pipeline receives items (see items.py) from spiders (see /spiders) and processes them in the process_item function. In this example, the process_item function adds the items to their designated collections.

settings.py

settings.py stores a variety of settings that control the behavior of the Scrapy project, such as the pipelines, middlewares, and extensions that should be used, as well as settings related to how the project should handle requests and responses.

For example, you can use it to set the order execution of the pipelines (see pipelines.py):

ITEM_PIPELINES = {

'myproject.pipelines.MongoPipeline': 300,

'myproject.pipelines.JsonPipeline': 302,

}

// MongoPipeline will execute before JsonPipeline, because it has a lower order number(300)Or set an export container:

FEEDS = {

'items': {'uri': 'file:///tmp/items.json', 'format': 'json'},

}

// this will save the scraped data to a items.jsonscrappy.cfg

scrapy.cfg is the configuration file for the project's main settings.

[settings]

default = [name of the project].settings

[deploy]

#url = http://localhost:6800/

project = [name of the project]

middlewares.py

There are two types of middlewares in Scrapy: downloader middlewares and spider middlewares.

Downloader middlewares are components that can be utilized to modify requests and responses, handle errors, and implement custom download logic. They are situated between the spider and the Scrapy downloader.

Spider middlewares are components that can be utilized to implement custom processing logic. They are situated between the engine and the spider.

The Scrapy Shell

Before we embark on the exciting journey of implementing our Urban Dictionary scraper, we should first familiarize ourselves with the Scrapy Shell. This interactive console allows us to test out our scraping logic and see the results in real-time. It's like a virtual sandbox where we can play around and fine-tune our approach before unleashing our Spider onto the web. Trust me, it'll save you a lot of time and headache in the long run. So let's have some fun and get to know the Scrapy Shell.

Opening the shell

To open the Scrapy Shell, you will first need to navigate to the directory of your Scrapy project in your terminal. Then, you can simply run the following command:

scrapy shell

This will open the Scrapy Shell and you will be presented with a prompt where you can enter and execute Scrapy commands. You can also pass a URL as an argument to the shell command to directly scrape a web page, like so:

scrapy shell <url>

For example:

scrapy shell https://www.urbandictionary.com/define.php?term=YOLO

Will return (in the response object) the html of the web page that contains the definitions of the word YOLO (in the Urban Dictionary).

Alternatively, once you enter the shell, you can use the fetch command to fetch a web page.

fetch('https://www.urbandictionary.com/define.php?term=YOLO')You can also start the shell with the nolog parameter to make it not display logs:

scrapy shell --nolog

Working with the shell

In this example, I fetched the “https://www.urbandictionary.com/define.php?term=YOLO” URL and saved the html to the test_output.html file.

(scrapy_env) mihai@DESKTOP-0RN92KH:~/myproject$ scrapy shell --nolog

[s] Available Scrapy objects:

[s] scrapy scrapy module (contains scrapy.Request, scrapy.Selector, etc)

[s] crawler <scrapy.crawler.Crawler object at 0x7f1eef80f6a0>

[s] item {}

[s] settings <scrapy.settings.Settings object at 0x7f1eef80f4c0>

[s] Useful shortcuts:

[s] fetch(url[, redirect=True]) Fetch URL and update local objects (by default, redirects are followed)

[s] fetch(req) Fetch a scrapy.Request and update local objects

[s] shelp() Shell help (print this help)

[s] view(response) View response in a browser

>>> response // response is empty

>>> fetch('https://www.urbandictionary.com/define.php?term=YOLO')

>>> response

<200 https://www.urbandictionary.com/define.php?term=Yolo>

>>> with open('test_output.html', 'w') as f:

... f.write(response.text)

...

118260

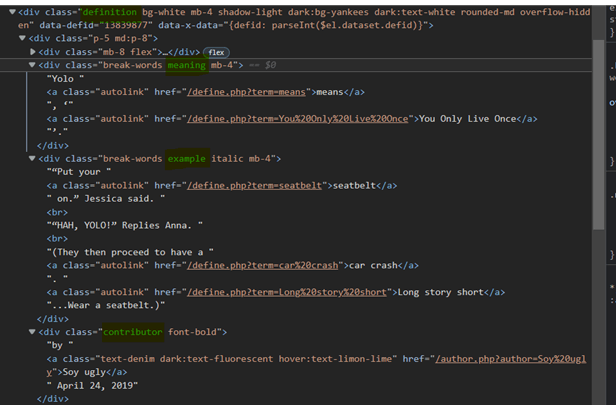

Now let’s inspect test_output.html and identify the selectors we would need in order to extract the data for our Urban Dictionary scraper.

We can observe that:

- Every word definition container has the “definition” class.

- The meaning of the word is found inside the div with the class “meaning”.

- Examples for the word are found inside the div with the class “example”.

- Information about the post author and date are found within the div with the class “contributor”.

Now let’s test some selectors in the Scrapy Shell:

To get references to every definition containers we can use CSS or XPath selectors:

You can learn more about XPath selectors here: https://www.webscrapingapi.com/the-ultimate-xpath-cheat-sheet

definitions = response.css('div.definition')definitions = response.xpath('//div[contains(@class,"definition")]')We should extract the meaning, example and post information from every definition container. Let’s test some selectors with the first container:

>>> first_def = definitions[0]

>>> meaning = first_def.css('div.meaning').xpath(".//text()").extract()

>>> meaning

['Yolo ', 'means', ', '', 'You Only Live Once', ''.']

>>> meaning = "".join(meaning)

>>> meaning

'Yolo means, 'You Only Live Once'.'

>>> example = first_def.css('div.example').xpath(".//text()").extract()

>>> example = "".join(example)

>>> example

'"Put your seatbelt on." Jessica said.\r"HAH, YOLO!" Replies Anna.\r(They then proceed to have a car crash. Long story short...Wear a seatbelt.)'

>>> post_data = first_def.css('div.contributor').xpath(".//text()").extract()

>>> post_data

['by ', 'Soy ugly', ' April 24, 2019']

By using the Scrapy shell, we were able to quickly find a general selector that suits our needs.

definition.css('div.<meaning|example|contributor>').xpath(".//text()").extract()

// returns an array with all the text found inside the <meaning|example|contributor>

ex: ['Yolo ', 'means', ', '', 'You Only Live Once', ''.']To learn more about Scrapy selectors, check out the documentation. https://docs.scrapy.org/en/latest/topics/selectors.html

Implementing the Urban Dictionary scraper

Great job! Now that you've gotten the hang of using the Scrapy Shell and understand the inner workings of a Scrapy project, it's time to dive into the implementation of our Urban Dictionary scraper. By now, you should be feeling confident and ready to take on the task of extracting all those hilarious (and sometimes questionable) word definitions from the web. So without further ado, let's get started on building our scraper!

Defining an Item

First, we’ll implement an Item: (see items.py)

class UrbanDictionaryItem(scrapy.Item):

meaning = scrapy.Field()

author = scrapy.Field()

date = scrapy.Field()

example = scrapy.Field()

This structure will hold the scraped data from the Spider.

Defining a Spider

This is how we’ll define our Spider (see /spiders):

import scrapy

from ..items import UrbanDictionaryItem

class UrbanDictionarySpider(scrapy.Spider):

name = 'urban_dictionary'

start_urls = ['https://www.urbandictionary.com/define.php?term=Yolo']

def parse(self, response):

definitions = response.css('div.definition')

for definition in definitions:

item = UrbanDictionaryItem()

item['meaning'] = definition.css('div.meaning').xpath(".//text()").extract()

item['example'] = definition.css('div.example').xpath(".//text()").extract()

author = definition.css('div.contributor').xpath(".//text()").extract()

item['date'] = author[2]

item['author'] = author[1]

yield item

To run the urban_dictionary spider, use the following command:

scrapy crawl urban_dictionary

// the results should appear in the console (most probably at the top of the logs)

Creating a Pipeline

At this point, the data is unsanitized.

{'author': 'Soy ugly',

'date': ' April 24, 2019',

'example': ['“Put your ',

'seatbelt',

' on.” Jessica said.\n',

'“HAH, YOLO!” Replies Anna.\n',

'(They then proceed to have a ',

'car crash',

'. ',

'Long story short',

'...Wear a seatbelt.)'],

'meaning': ['Yolo ', 'means', ', ‘', 'You Only Live Once', '’.']}We want to modify the “example” and “meaning” fields to hold strings, not arrays. To do that, we’ll write a Pipeline (see pipelines.py) that will transform the arrays into strings by concatenating the words.

class SanitizePipeline:

def process_item(self, item, spider):

# Sanitize the 'meaning' field

item['meaning'] = "".join(item['meaning'])

# Sanitize the 'example' field

item['example'] = "".join(item['example'])

# Sanitize the 'date' field

item['date'] = item['date'].strip()

return item

//ex: ['Yolo ', 'means', ', ‘', 'You Only Live Once', '’.'] turns to

'Yolo means, ‘You Only Live Once’.'

Enabling the Pipeline

After we define the Pipeline, we need to enable it. If we don’t, then our UrbanDictionaryItem objects won’t be sanitized. To do that, add it to your settings.py (see settings.py) file:

ITEM_PIPELINES = {

'myproject.pipelines.SanitizePipeline': 1,

}While you’re at it, you could also specify an output file for the scraped data to be placed in.

FEEDS = {

'items': {'uri': 'file:///tmp/items.json', 'format': 'json'},

}

// this will put the scraped data in an items.json file.Optional: Implementing a Proxy Middleware

Web scraping can be a bit of a pain. One of the main issues we often run into is that many websites require javascript rendering in order to fully display their content. This can cause huge problems for us as web scrapers, since our tools often don't have the ability to execute javascript like a regular web browser does. This can lead to incomplete data being extracted, or worse, our IP getting banned from the website for making too many requests in a short period of time.

Our solution to this problem is WebScrapingApi. With our service, you can simply make requests to the API and it will handle all the heavy lifting for you. It will execute JavaScript, rotate proxies, and even handle CAPTCHAs, ensuring that you can scrape even the most stubborn of websites with ease.

A proxy middleware will forward every fetch request made by Scrapy to the proxy server. The proxy server will then make the request for us and then return the result.

First, we’ll define a ProxyMiddleware class inside the middlewares.py file.

import base64

class ProxyMiddleware:

def process_request(self, request, spider):

# Set the proxy for the request

request.meta['proxy'] = "http://proxy.webscrapingapi.com:80"

request.meta['verify'] = False

# Set the proxy authentication for the request

proxy_user_pass = "webscrapingapi.proxy_type=residential.render_js=1:<API_KEY>"

encoded_user_pass = base64.b64encode(proxy_user_pass.encode()).decode()

request.headers['Proxy-Authorization'] = f'Basic {encoded_user_pass}'

In this example, we used the WebScrapingApi proxy server.

webscrapingapi.proxy_type=residential.render_js=1 is the proxy authentication username, <API_KEY> the password.

You can get a free API_KEY by creating a new account at https://www.webscrapingapi.com/

After we define the ProxyMiddleware, all we need to do is enable it as a DOWNLOAD_MIDDLEWARE in the settings.py file.

DOWNLOADER_MIDDLEWARES = {

'myproject.middlewares.ProxyMiddleware': 1,

}Aand, that’s it. As you can see, web scraping with Scrapy can simplify a lot of our work.

Wrapping up

We did it! We built a scraper that can extract definitions from Urban Dictionary and we learned so much about Scrapy along the way. From creating custom items to utilizing middlewares and pipelines, we've proven how powerful and versatile web scraping with Scrapy can be. And the best part? There's still so much more to discover.

Congratulations on making it to the end of this journey with me. You should now feel confident in your ability to tackle any web scraping project that comes your way. Just remember, web scraping doesn't have to be daunting or overwhelming. With the right tools and knowledge, it can be a fun and rewarding experience. If you ever need a helping hand, don't hesitate to reach out to us here at https://www.webscrapingapi.com/ We know all about web scraping and are more than happy to assist you in any way we can.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore the complexities of scraping Amazon product data with our in-depth guide. From best practices and tools like Amazon Scraper API to legal considerations, learn how to navigate challenges, bypass CAPTCHAs, and efficiently extract valuable insights.

Explore the in-depth comparison between Scrapy and Selenium for web scraping. From large-scale data acquisition to handling dynamic content, discover the pros, cons, and unique features of each. Learn how to choose the best framework based on your project's needs and scale.

Learn how to scrape dynamic JavaScript-rendered websites using Scrapy and Splash. From installation to writing a spider, handling pagination, and managing Splash responses, this comprehensive guide offers step-by-step instructions for beginners and experts alike.