Top 7 PhantomJS Alternatives Every Developer Must Know

WebscrapingAPI on Oct 31 2022

If you’re getting into JavaScript coding, we’re sure you may have heard about PhantomJS. Although now obsolete, it was an OG in its time.

Introduced to the world in January 2011, PhantomJS quickly changed how developers worked with websites.

Vitaly Slobodin was the developer and maintainer of PhantomJS. Sadly, it was discontinued in April 2017 when he decided to step down from the position. He cited several reasons for his decision, which we’ll cover later in this blog.

Now that PhantomJS is no more a thing, you need to know about PhantomJS alternatives. We’ll get to that, too. Before that, you must understand what PhantomJS was all about.

What is PhantomJS?

PhantomJS was a headless browser generally used for web automation, i.e., automating manual tasks on the web.

Developers also used PhantomJS to run JavaScript on any web page and scrape website data. You could scrape most of the data you can with modern-day web scraping tools like WebScraping API.

Now, what is a headless browser?

A headless browser is a browser that doesn’t have a Graphical User Interface (GUI). Simply put, it is unlike Google Chrome, Safari, and Mozilla Firefox. It is controlled programmatically, without you having to open a web page you want to work on.

The reasons developers preferred headless browsers were:

- Speed.

- Less load on the system.

- Scraping data from websites.

- Unit testing.

Many people have always wondered why PhantomJS had to be discounted if it was this good. The answer lies in Vitaly Slobodin’s email.

See how in this email, he mentions that Chrome is faster and more stable than PhantomJS. Apart from that, he also highlights how working alone on PhantomJS is difficult.

These are some of the key reasons he had to step down.

7 Fantastic PhantomJS Alternatives

Now that you know why PhantomJS died, it is time to learn about some of its alternatives, so you can keep using headless browsers. Moreover, they have developed a lot in the last five years and offer even more functionality.

Here is our list of the 7 fantastic PhantomJS alternatives you can start using from today:

- Headless Chrome

- Selenium

- CasperJS

- Zombie.js

- BrowserSync

- HtmlUnit

- web scraping API

1. Headless Chrome

Headless Chrome is the number one alternative on our list because Vitaly Slobodin himself highlighted it.

This headless browser is being used by hundreds of thousands of developers regularly. The features and capabilities of PhantomJS are found in Headless Chrome.

We all know how Google Chrome is at the forefront of web browsers. Many browsers, such as Opera, Vivaldi, and Google Chrome, were built using Chromium. For people who don’t know- Chromium is an open-source browser created by Google.

Headless Chrome launched around the same time PhantomJS was discontinued. It was first introduced as a part of Chrome in the 59th version. After that, every version of Chrome has built-in Headless Chrome. Presently, Chrome is running on its 105th version, so we know it has been a while since they’ve been experimenting and improving Headless Chrome.

Pros:

- Supports lots of features.

- Uses less memory.

- Debugging is easy because it is a headless browser.

- Installation is relatively quick and easy.

- Better speed and stability.

- 24x7 support.

- Regular updates.

Cons:

- Headless Chrome is almost perfect, and many developers prefer it over others.

2. Selenium

Selenium was introduced to the world some 20 years back in 2002. It is similar to PhantomJS because it also automates web applications and helps in testing the various parts of a web page.

When you open the Selenium website, you see a green and white themed website with "Selenium automates browsers" written at the top. The website makes it clear from the start that the primary purpose of this browser is to automate.

When you scroll down a bit, you see three ways Selenium can help you. They are:

- Browser-based regression automation.

- Creating bug reproduction and automation scripts.

- Running tests on multiple machines simultaneously.

Selenium takes care of these three purposes through its three different services- Selenium WebDriver, Selenium IDE & Selenium Grid. Honestly, every developer will have different reasons for using headless browsers. The website does an excellent job of highlighting them at the top of the website.

Mind you; Selenium comes with its pros and cons.

Pros:

- Automates browsers.

- Offers multiple services, each with its purpose.

- It's open-source, which means constant changes are made

- Setting up is easy.

Cons:

- No dedicated support in case you need help.

- It doesn't support mobile applications.

3. CasperJS

CasperJS is another headless browser. The primary purpose of this browser is to navigate, script, and test web pages. CasperJS is generally used for UI testing, while other headless browsers are used for unit testing. CasperJS automates the task of filling forms, clicking links, taking screenshots, downloading resources, and many others.

Pros:

- High-level third-party integration

- Written in JavaScript.

- Learning how to use CasperJS is easy.

Cons:

- Not for unit testing.

- At times, screenshots are not accurate.

4. Zombie.js

Zombie.js is another headless browser known for its insane speed (at least, that's what they say on their website). It is a complete tool; you can use it for both front-end and back-end testing. It uses Node.js and runs flawlessly in JavaScript. Many developers prefer it because it is a light framework. It tests client-side code and does so quickly.

Pros:

- Integration is easy, as it runs on Node.js.

- Adding it to your framework is also pretty easy.

- It is blazing fast.

- Lightweight. It puts a negligible load on your machine.

Cons:

- Can't take screenshots

- Documentation isn't complete.

- No support is available.

- It doesn't load many sites.

5. Browsersync

Browsersync is a headless browser, but at the same time, it is not a headless browser. Let me explain. You can use it either way; testing web pages and extracting data on a command line, or if you want a GUI for assistance, that's also possible. Browsersync gets more than 2 million downloads a month. That's a significant number, and we're sure you can guess that if so many developers trust it, they must be doing something good. Big names like Google and Adobe also use Browsersync.

Pros:

- It is swift and Free.

- URLs are saved.

- Option to choose between Graphical User Interface (GUI) or Command Line (CL).

- It runs smoothly on Windows, Mac OS & Linux.

- Open-source, so it is constantly updated.

- It does not need a browser plugin.

- Flawlessly works on Desktop and Mobile devices.

Cons:

- Setting Browsersync in Windows can be a bit challenging.

6. HtmlUnit

Written by Mike Bowler and released under Apache 2 license, HtmlUnit works as a headless or GUI-less browser. It is written in Java and works amazingly well with JavaScript.

You can fill out forms, click links, and do all things possible with a browser using this headless browser. The website says that HtmlUnit JavaScript is constantly improving, which is good news for developers.

It is also said to work well with complex ajax libraries and supports HTTP & HTTPS protocols.

Pros:

- Free and easy set up.

- Handles complex libraries effectively.

- Testing can be done using HtmlUnit.

- Information can also be retrieved from websites.

- It also works on Android.

Cons:

- It offers limited features, so it is not a good option for people who want many features.

7. WebScraping API

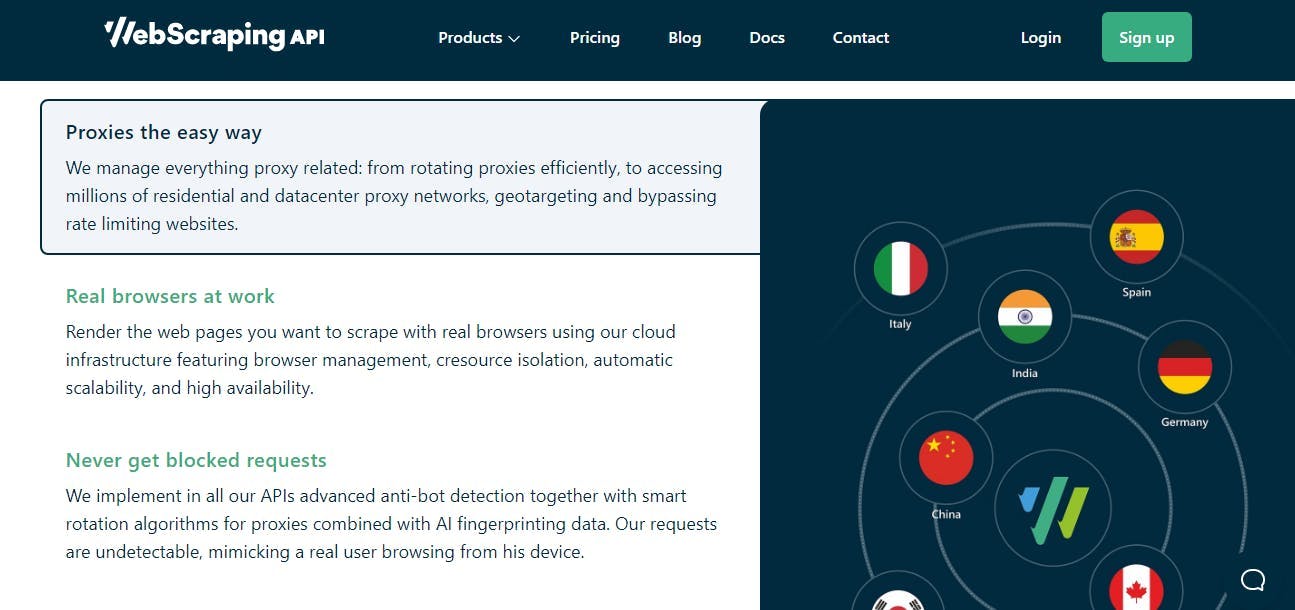

Most of the PhantomJS alternatives in today's blog are also used for extracting data from websites. Although they do it at an average level, tools like WebScraping API takes everything to the next level.

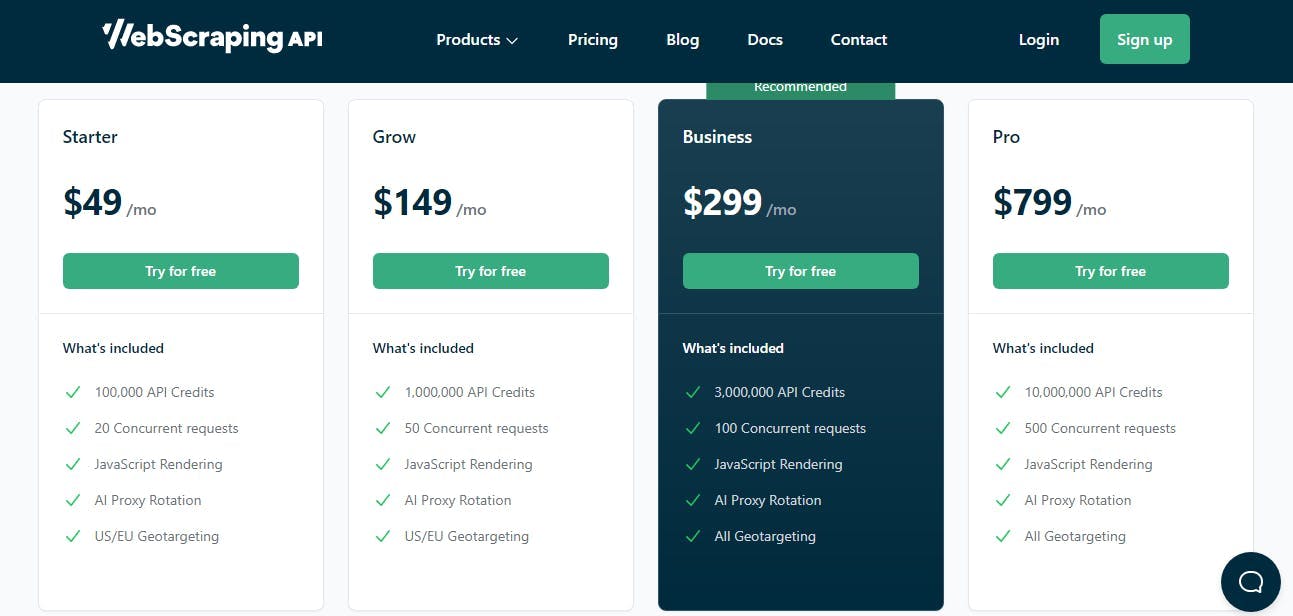

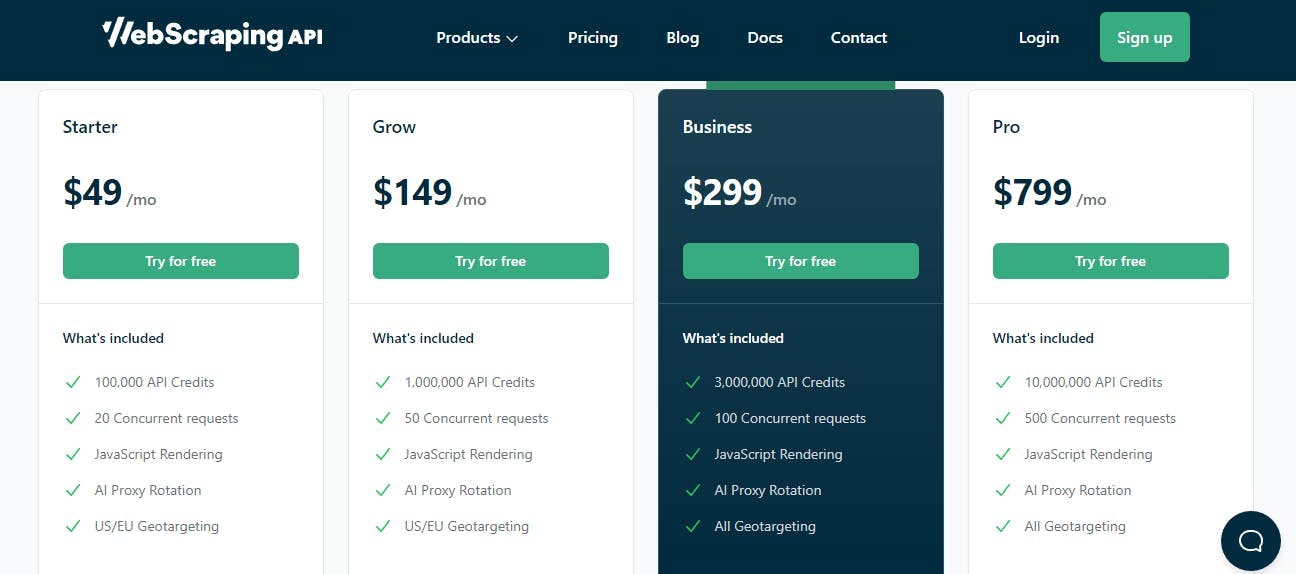

Web scraping API is not just any web scraper tool. It is easily one of the best scraper tools as it offers so much for a small price of $49 per month. You can choose a pricing plan, which provides you with the best ROI.

Generally, the more you pay for Web scraper tools, the more features and API calls you'll get, as many of them have minor differences in features. Still, the price they charge is almost double, unlike the case when you choose WebScraping API.

10,000+ established companies rely on this tool, getting everything done without distracting busy business owners from their primary goal. Deloitte, Perrigo, and InfraWare are just some of the many names that choose WebScraping API as their go-to tool for extracting data that adds value.

The way WebScraping API works is simple. It collects HTML from any web page using a simple API and displays it to you in an easy-to-understand manner because we know that not everyone is an expert at deciphering complex data.

Many web scraper tools often get the job done initially but then get blocked from the website. This problem is taken care of when you choose WebScraping API. IP blocks and CAPTCHAs become a thing of the past when this fantastic tool is at your disposal.

Pros:

- 99.99% uptime means you never have to wait to extract essential data from the website of your choice.

- Enterprise customers benefit significantly from Geotargeting, as they access more than 195 locations.

- You get constant support from the WebScrapingAPI team, meaning you never have to worry about any issues.

- Any business size can benefit from the four different plans.

Cons:

- We couldn't pick a single disadvantage of using web scraping API.

Web scraping API is my top alternative

Now that you've read the blog, we know you may still be confused because choosing among so many good options is not easy. But don't worry because we have decided on the best option, so you don't have to spend your time and money.

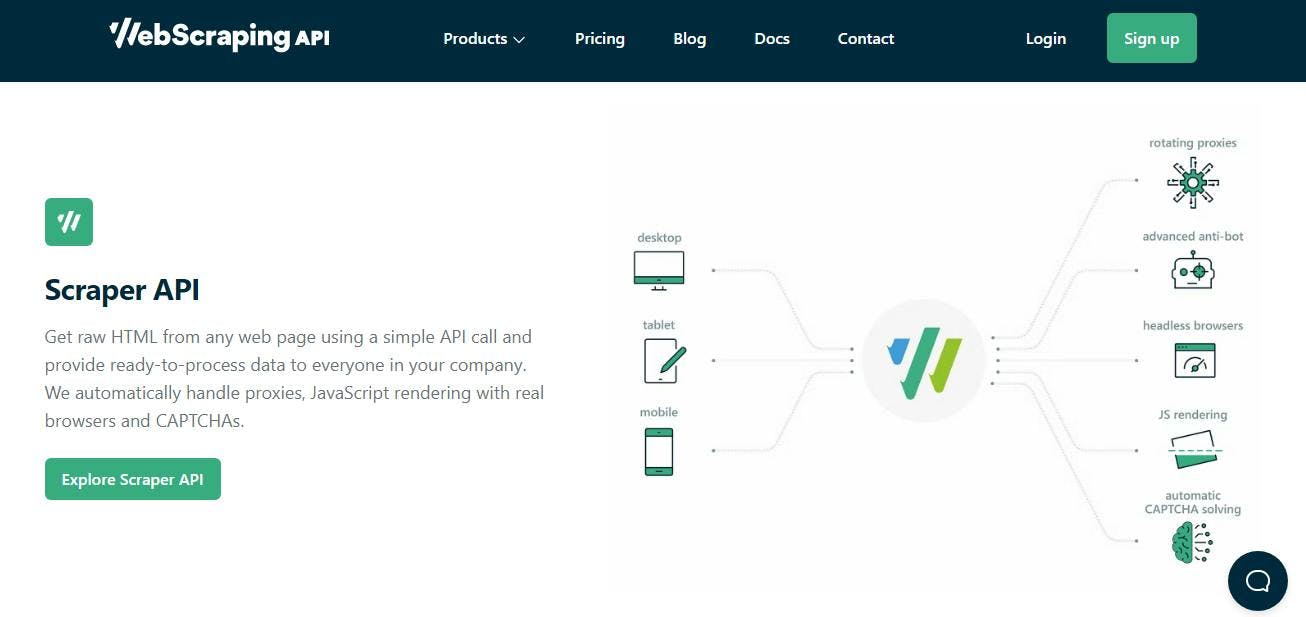

Products

- ScaperAPI

Our ScraperAPI tool can help you retrieve data from a web page without any fuss. You can quickly and easily obtain raw HTML from any online page using our user-friendly API.

Additionally, we automatically manage JavaScript rendering, CAPTCHAs, and proxies so you can concentrate on acquiring the data you require. ScraperAPI is your tool if you need to collect data for analysis or research.

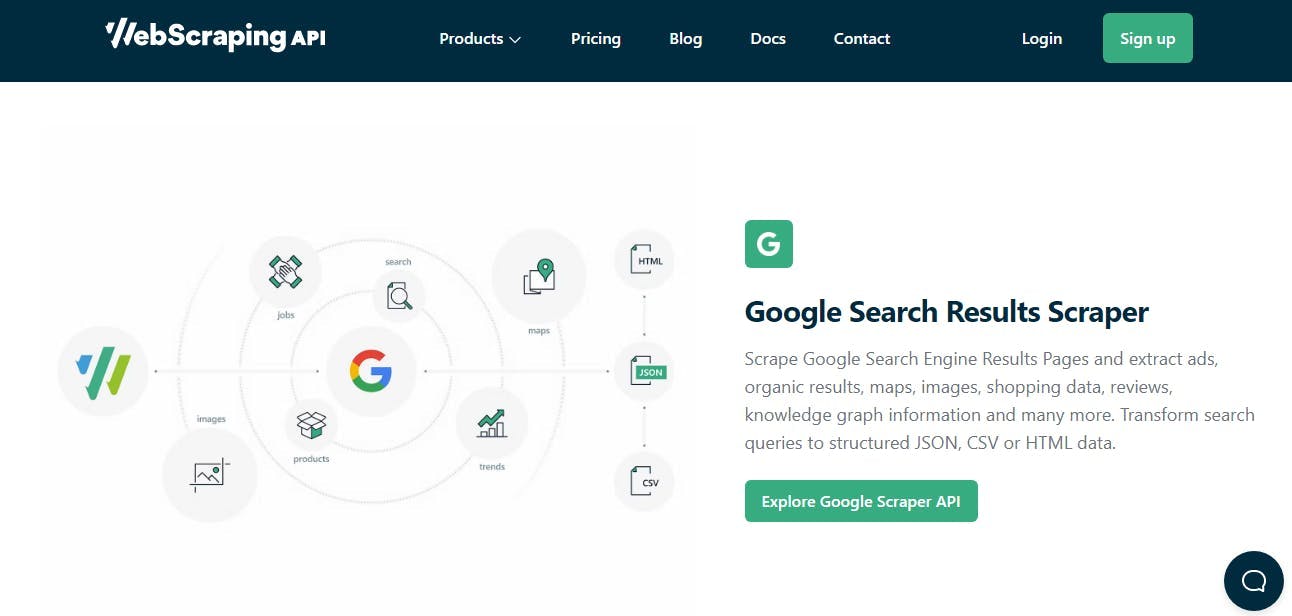

- Google Search Engine Results Scraper

You can scrape SERPs using WebScrapringAPI to find information on advertisements, organic results, maps, photos, shopping data, reviews, knowledge graphs, and more. Additionally, search results can be converted into structured JSON, CSV, or HTML data. This makes it simple to obtain the data you require, allowing you to concentrate on using it to enhance your company.

For companies and people who want to get the most of their data, WebScrapringAPI is a great tool. It is the ideal tool for extracting data from SERPs thanks to its user-friendly interface and robust functionality.

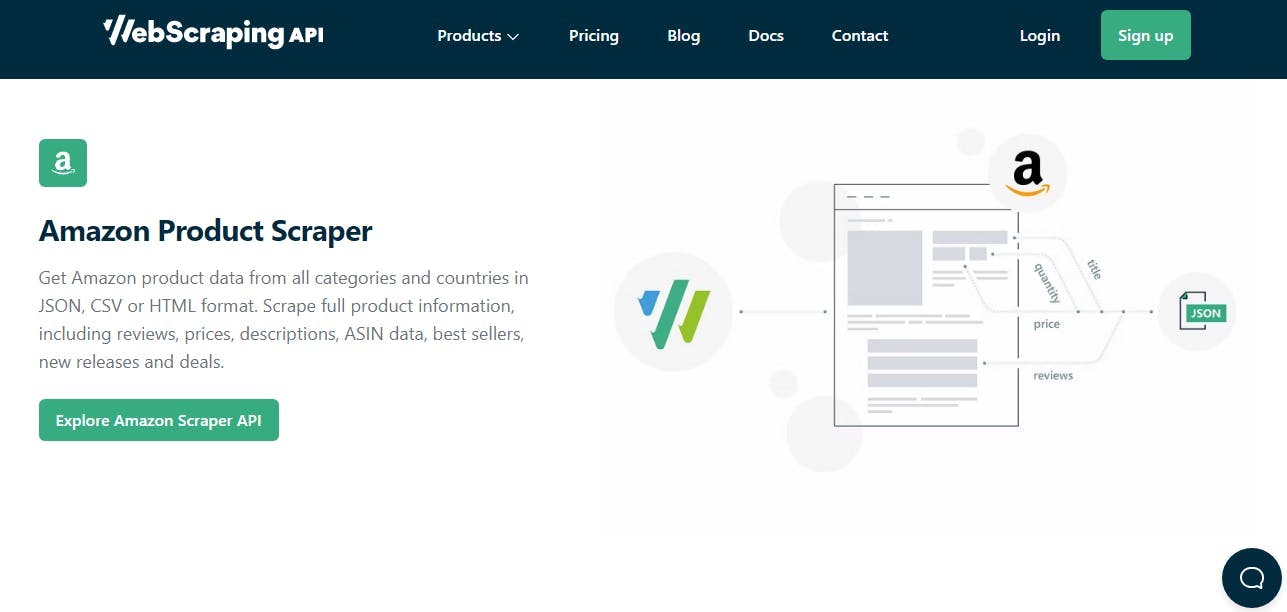

- Amazon Product Scraper

WebScrapingAPI is the ideal tool for anyone looking to gather information about Amazon products data. With this tool, you can obtain complete product details in JSON, CSV, or HTML format from all categories and nations. This information includes reviews, prices, descriptions, ASIN data, best sellers, new releases, and deals.

Features:

- 360-degree web scraping: All web scraping tasks and use cases, such as market analysis, price monitoring, information on transportation expenses, real estate, financial data, and many more, are fully supported by the Web Scraper API.

- Getting Formatted Data Out: With the help of our custom extraction rule capabilities, you can get structured JSON data based on your individual needs with just one API call. Having rapid data flow will provide your business a competitive advantage.

- JavaScript interactions: To collect data accurately, use JavaScript websites like a pro by clicking, scrolling, and running unique JS code on the target page while you wait for components to load.

- Security: To find potentially dangerous information or compromised data, automated data extraction flows can be built from any website.

- Data images: Incorporate high-resolution screenshots of the pages or sections of the target website in your tools or applications. The Web Scraper API may provide screenshots, structured JSON, and raw HTML.

- Scaling for businesses: We cut down unnecessary costs by using hardware or software infrastructure. The collection of precise data at a big scale is made simple by our cloud infrastructure.

Pricing:

Depending on your demands, WebScrapingAP offers a variety of price options. The enterprise plan, which includes custom volume API credits, Amazon search API, product extraction API, priority email support, and a dedicated account manager, starts at $299 per month. The starter plan starts at $49 per month.

Conclusion

When compared to other options, WebScrapingAPI wins. Why? The tool is overstuffed. Not just tightly packed, either—filled with features that people really use. It is a platform that automates the process of extracting both structured and unstructured data from a web page, and it can be crucial for data management.

WebScrapingAPI provides mass web crawling, clean code, 99.99% uptime, the latest architecture to boost performance, a range of value-loaded plans, and the trust of 10,000+ businesses globally.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Learn how to scrape JavaScript tables using Python. Extract data from websites, store and manipulate it using Pandas. Improve efficiency and reliability of the scraping process.

Maximize Twitter data with expert web scraping. Learn scraping Twitter for sentiment analysis, marketing, and business intel. Comprehensive guide using TypeScript.

This post will go over several browser automation tools and use cases. Find out how to get started with browser automation and what are the main obstacles.