From Sentiment Analysis to Marketing: The Many Benefits of Web Scraping Twitter

Raluca Penciuc on Apr 13 2023

Twitter is a popular microblogging and social networking website that allows users to post and interact with messages known as "tweets." These tweets can contain a variety of information, including text, images, and links, making them a valuable source of data for various use cases.

From individual researchers to companies, web scraping Twitter can have many practical applications: trends and news monitoring, consumer sentiment analysis, advertising campaign improvements, etc.

Although Twitter provides an API for you to access the data, it presents some caveats that you should be aware of:

- rate-limiting: you can only make a certain number of requests within a given time period. If you exceed these limits, your API access may be temporarily suspended;

- data availability: you have access to a limited set of data, such as tweets, user profiles, and direct messages. Some data, such as deleted tweets, is not available through the API.

In this article, we will discuss the process of web scraping Twitter using Typescript and Puppeteer. We will cover setting up the necessary environment, locating and extracting data, and the potential uses of this data.

Additionally, we will also discuss why using a professional scraper is better for web scraping Twitter than using the Twitter API alone. This article will provide a step-by-step guide on how to effectively web scrape Twitter, making it easy for you to gather the data you need.

Prerequisites

Before we begin, let's make sure we have the necessary tools in place.

First, download and install Node.js from the official website, making sure to use the Long-Term Support (LTS) version. This will also automatically install Node Package Manager (NPM) which we will use to install further dependencies.

For this tutorial, we will be using Visual Studio Code as our Integrated Development Environment (IDE) but you can use any other IDE of your choice. Create a new folder for your project, open the terminal and run the following command to set up a new Node.js project:

npm init -y

This will create a package.json file in your project directory, which will store information about your project and its dependencies.

Next, we need to install TypeScript and the type definitions for Node.js. TypeScript offers optional static typing which helps prevent errors in the code. To do this, run in the terminal:

npm install typescript @types/node --save-dev

You can verify the installation by running:

npx tsc --version

TypeScript uses a configuration file called tsconfig.json to store compiler options and other settings. To create this file in your project, run the following command:

npx tsc -init

Make sure that the value for “outDir” is set to “dist”. This way we will separate the TypeScript files from the compiled ones. You can find more information about this file and its properties in the official TypeScript documentation.

Now, create an “src” directory in your project, and a new “index.ts” file. Here is where we will keep the scraping code. To execute TypeScript code you have to compile it first, so to make sure that we don’t forget this extra step, we can use a custom-defined command.

Head over to the “package. json” file, and edit the “scripts” section like this:

"scripts": {

"test": "npx tsc && node dist/index.js"

}This way, when you will execute the script, you just have to type “npm run test” in your terminal.

Finally, to scrape the data from the website we will be using Puppeteer, a headless browser library for Node.js that allows you to control a web browser and interact with websites programmatically. To install it, run this command in the terminal:

npm install puppeteer

It is highly recommended when you want to ensure the completeness of your data, as many websites today contain dynamic-generated content. If you’re curious, you can check out before continuing the Puppeteer documentation to fully see what it’s capable of.

Data location

Now that you have your environment set up, we can start looking at extracting the data. For this article, I chose to scrape the Twitter profile of Netflix: https://twitter.com/netflix.

We’re going to extract the following data:

- the profile name;

- the profile handle;

- the user bio;

- the user location;

- the user website;

- the user join date;

- the user following count;

- the user followers count;

- information about the user tweets

- author name

- author handle

- publishing date

- text content

- media (videos or photos)

- reply count

- retweet count

- like count

- view count.

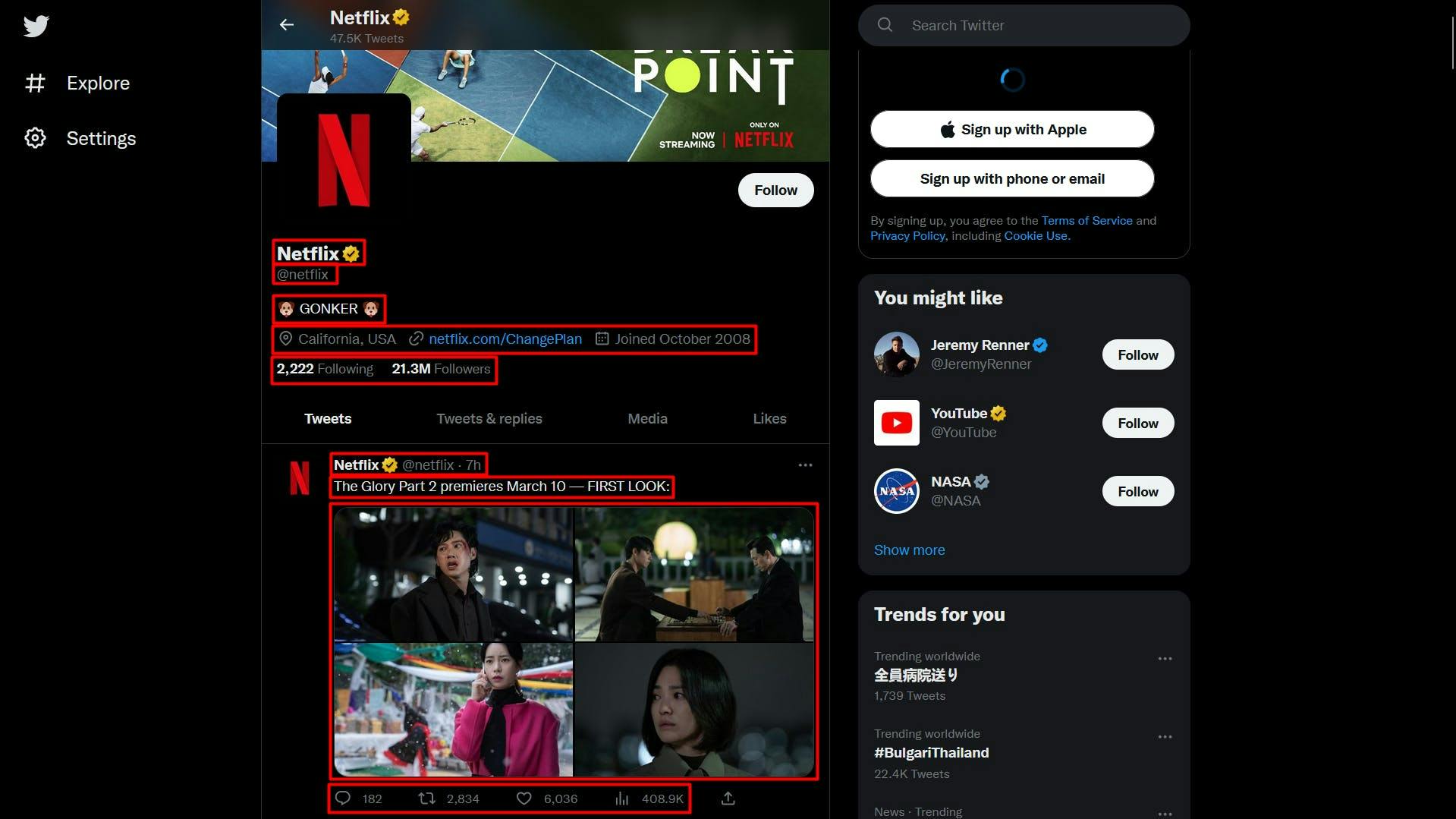

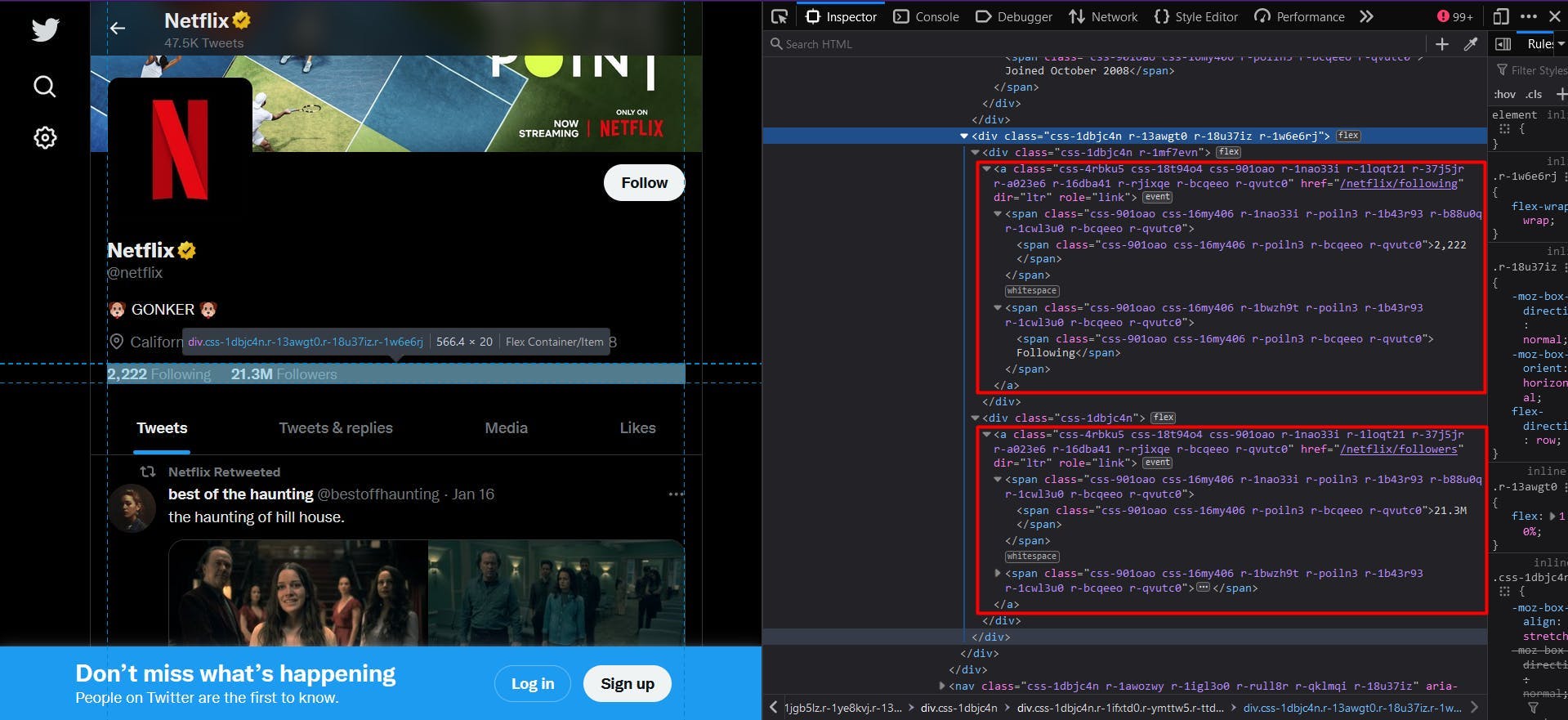

You can see all this information highlighted in the screenshot below:

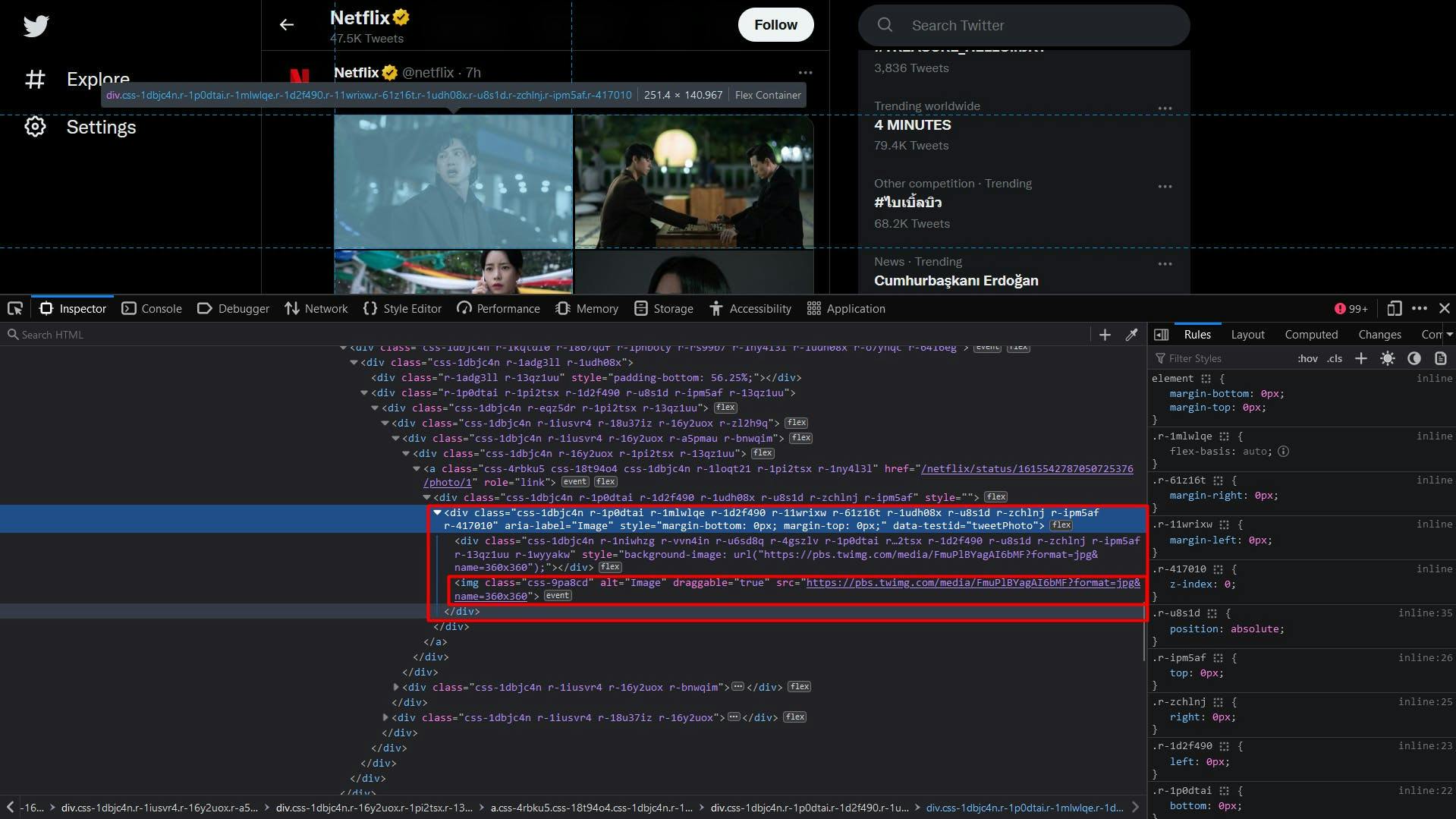

By opening the Developer Tools on each of these elements you will be able to notice the CSS selectors that we will use to locate the HTML elements. If you’re fairly new to how CSS selectors work, feel free to reach out to this beginner guide.

Extracting the data

Before writing our script, let’s verify that the Puppeteer installation went alright:

import puppeteer from 'puppeteer';

async function scrapeTwitterData(twitter_url: string): Promise<void> {

// Launch Puppeteer

const browser = await puppeteer.launch({

headless: false,

args: ['--start-maximized'],

defaultViewport: null

})

// Create a new page

const page = await browser.newPage()

// Navigate to the target URL

await page.goto(twitter_url)

// Close the browser

await browser.close()

}

scrapeTwitterData("https://twitter.com/netflix")

Here we open a browser window, create a new page, navigate to our target URL and then close the browser. For the sake of simplicity and visual debugging, I open the browser window maximized in non-headless mode.

Now, let’s take a look at the website’s structure and extract the previous list of data gradually:

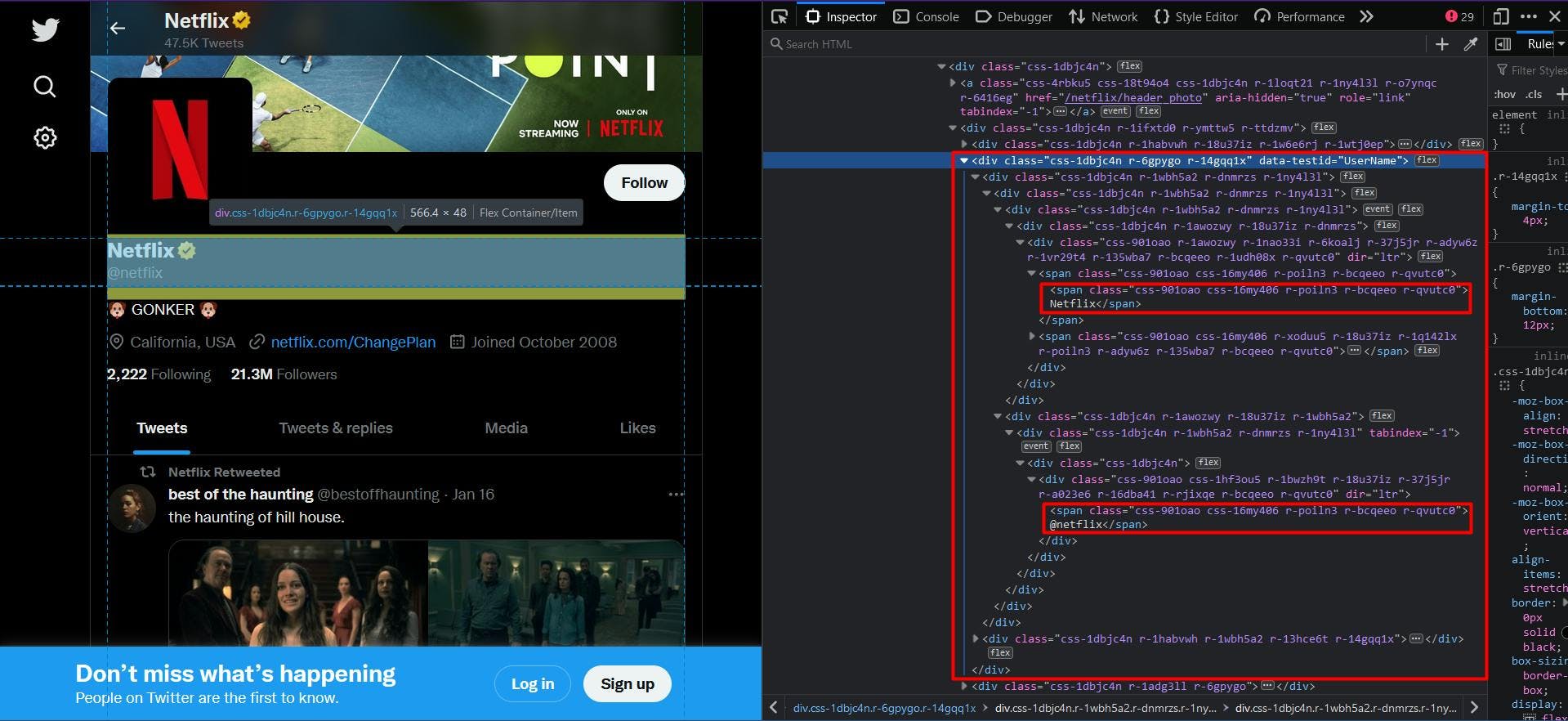

From the first glance you may have noticed that the website’s structure is pretty complex. The class names are randomly generated and very few HTML elements are uniquely identified.

Luckly for us, as we navigate through the parent elements of the targeted data, we find the attribute “data-testid”. A quick search in the HTML document can confirm that this attribute uniquely identifies the element we target.

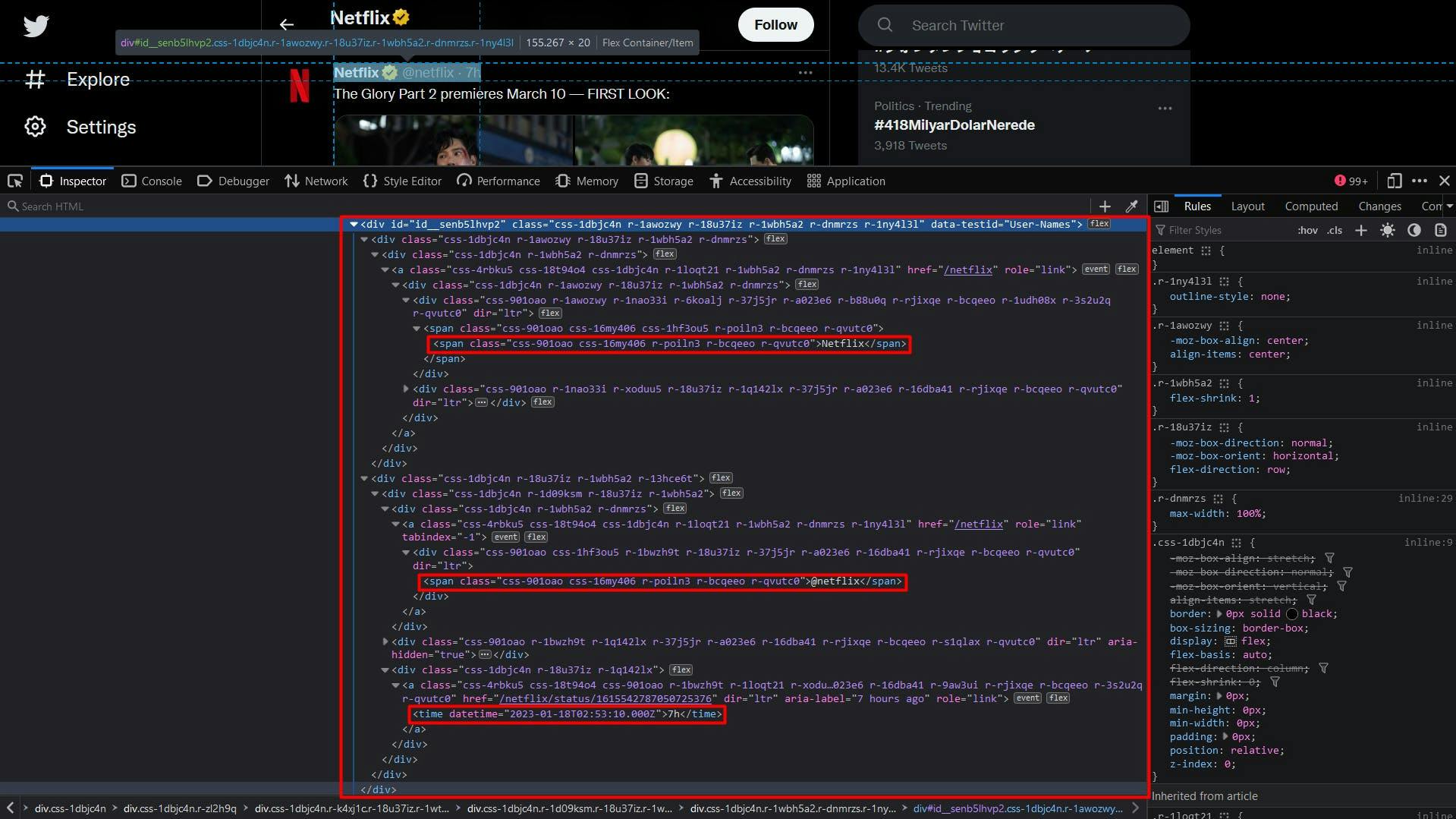

Therefore, to extract the profile name and handle, we will extract the “div” element that has the “data-testid” attribute set to “UserName”. The code will look like this:

// Extract the profile name and handle

const profileNameHandle = await page.evaluate(() => {

const nameHandle = document.querySelector('div[data-testid="UserName"]')

return nameHandle ? nameHandle.textContent : ""

})

const profileNameHandleComponents = profileNameHandle.split('@')

console.log("Profile name:", profileNameHandleComponents[0])

console.log("Profile handle:", '@' + profileNameHandleComponents[1])

Because both profile name and profile handle have the same parent, the final result will appear concatenated. To fix this, we use the “split” method to separate the data.

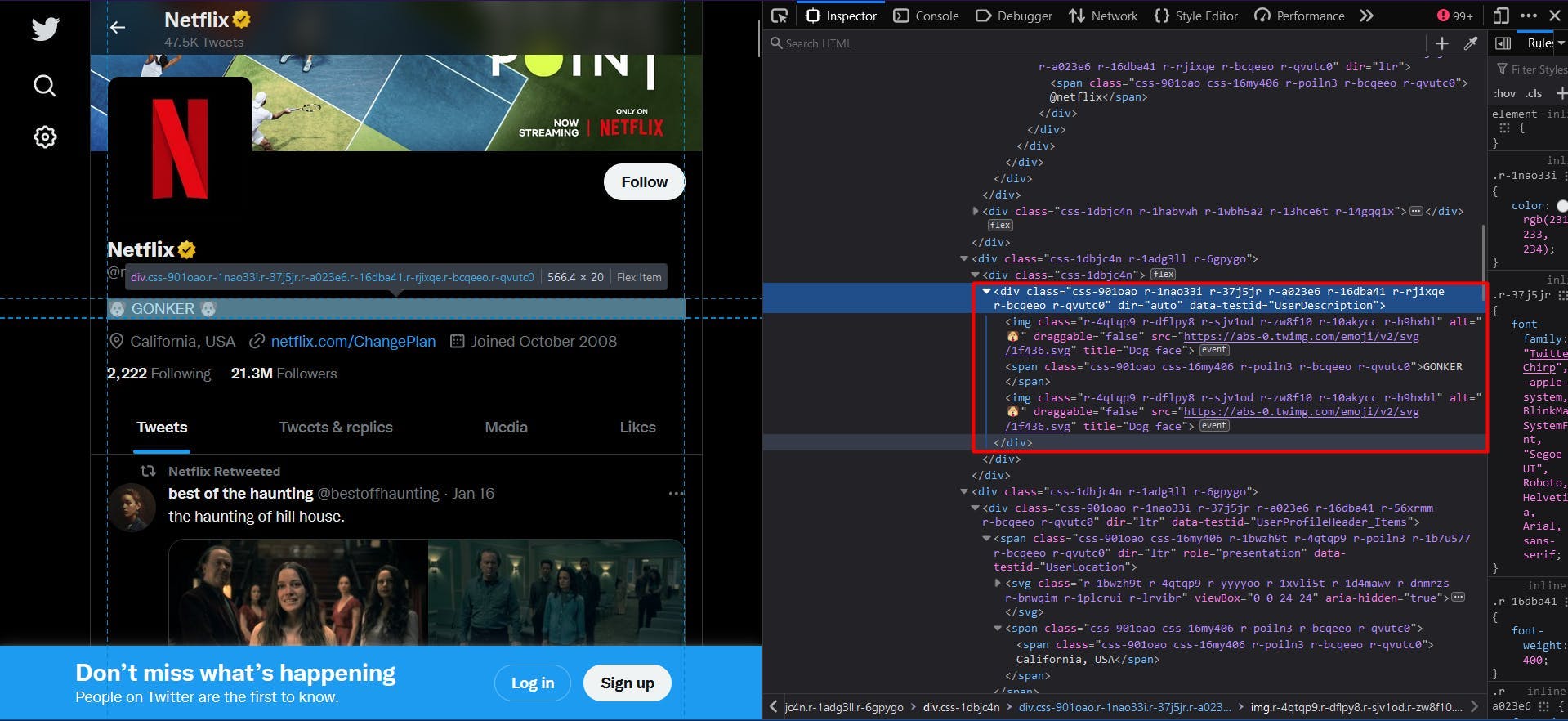

We then apply the same logic to extract the profile’s bio. In this case, the value for the “data-testid” attribute is “UserDescription”:

// Extract the user bio

const profileBio = await page.evaluate(() => {

const location = document.querySelector('div[data-testid="UserDescription"]')

return location ? location.textContent : ""

})

console.log("User bio:", profileBio)

The final result is described by the “textContent” property of the HTML element.

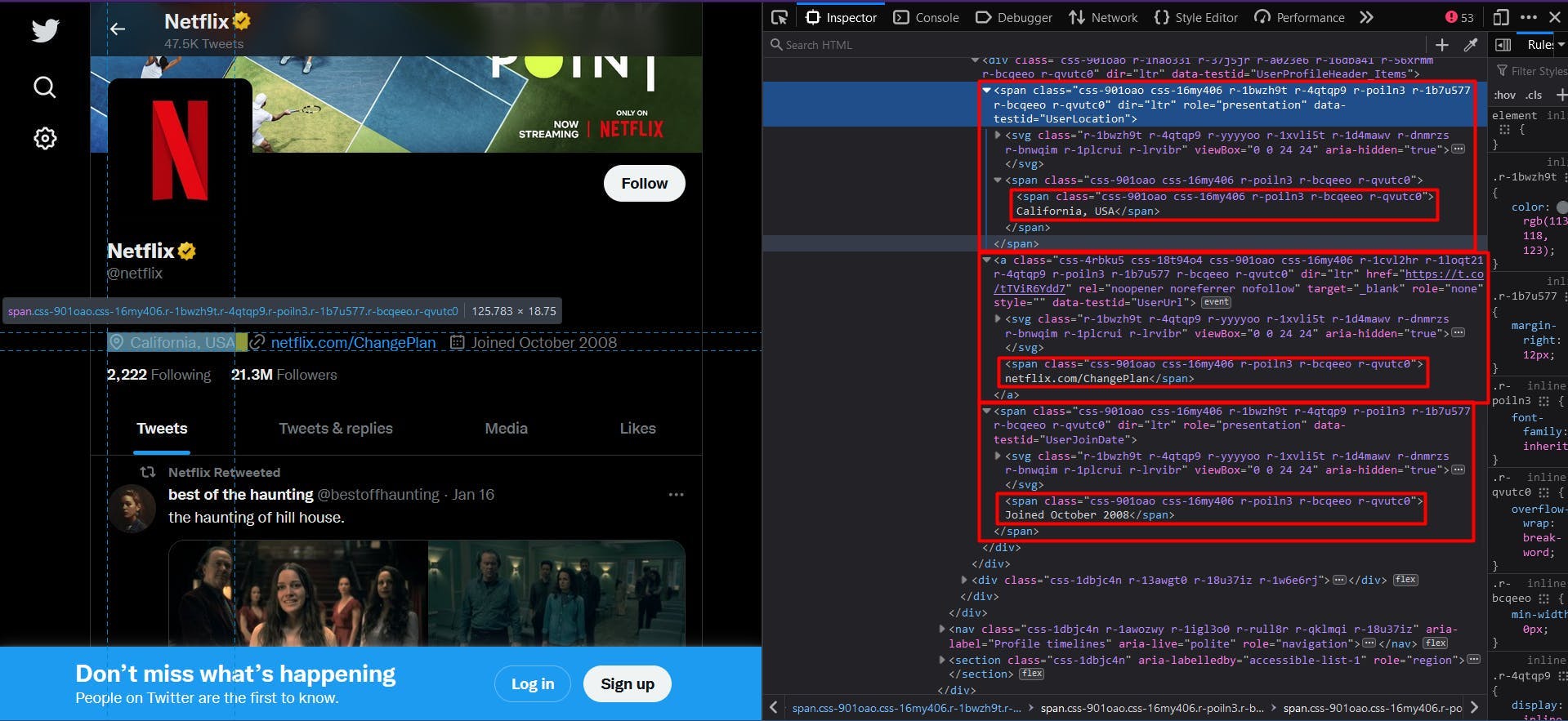

Moving on the the next section of the profile’s data, we find the location, the website and the join date under the same structure.

// Extract the user location

const profileLocation = await page.evaluate(() => {

const location = document.querySelector('span[data-testid="UserLocation"]')

return location ? location.textContent : ""

})

console.log("User location:", profileLocation)

// Extract the user website

const profileWebsite = await page.evaluate(() => {

const location = document.querySelector('a[data-testid="UserUrl"]')

return location ? location.textContent : ""

})

console.log("User website:", profileWebsite)

// Extract the join date

const profileJoinDate = await page.evaluate(() => {

const location = document.querySelector('span[data-testid="UserJoinDate"]')

return location ? location.textContent : ""

})

console.log("User join date:", profileJoinDate)

To get the number of following and followers, we need a slightly different approach. Check out the screenshot below:

There is no “data-testid” attribute and the class names are still randomly generated. A solution would be to target the anchor elements, as they provide an unique “href” attribute.

// Extract the following count

const profileFollowing = await page.evaluate(() => {

const location = document.querySelector('a[href$="/following"]')

return location ? location.textContent : ""

})

console.log("User following:", profileFollowing)

// Extract the followers count

const profileFollowers = await page.evaluate(() => {

const location = document.querySelector('a[href$="/followers"]')

return location ? location.textContent : ""

})

console.log("User followers:", profileFollowers)

To make the code available for any Twitter profile, we defined the CSS selector to target the anchor elements whose “href” attribute ends with “/following” or “/followers” respectively.

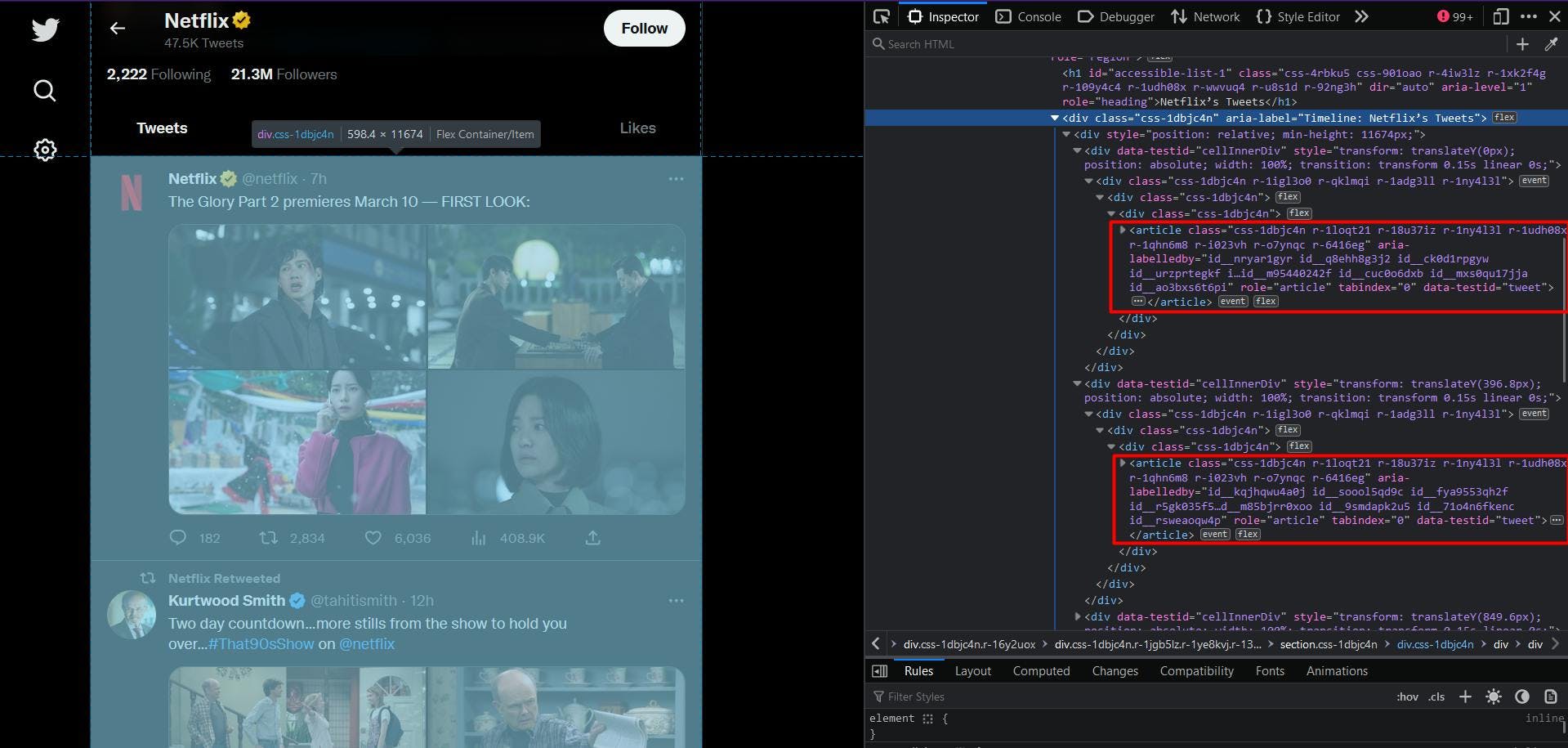

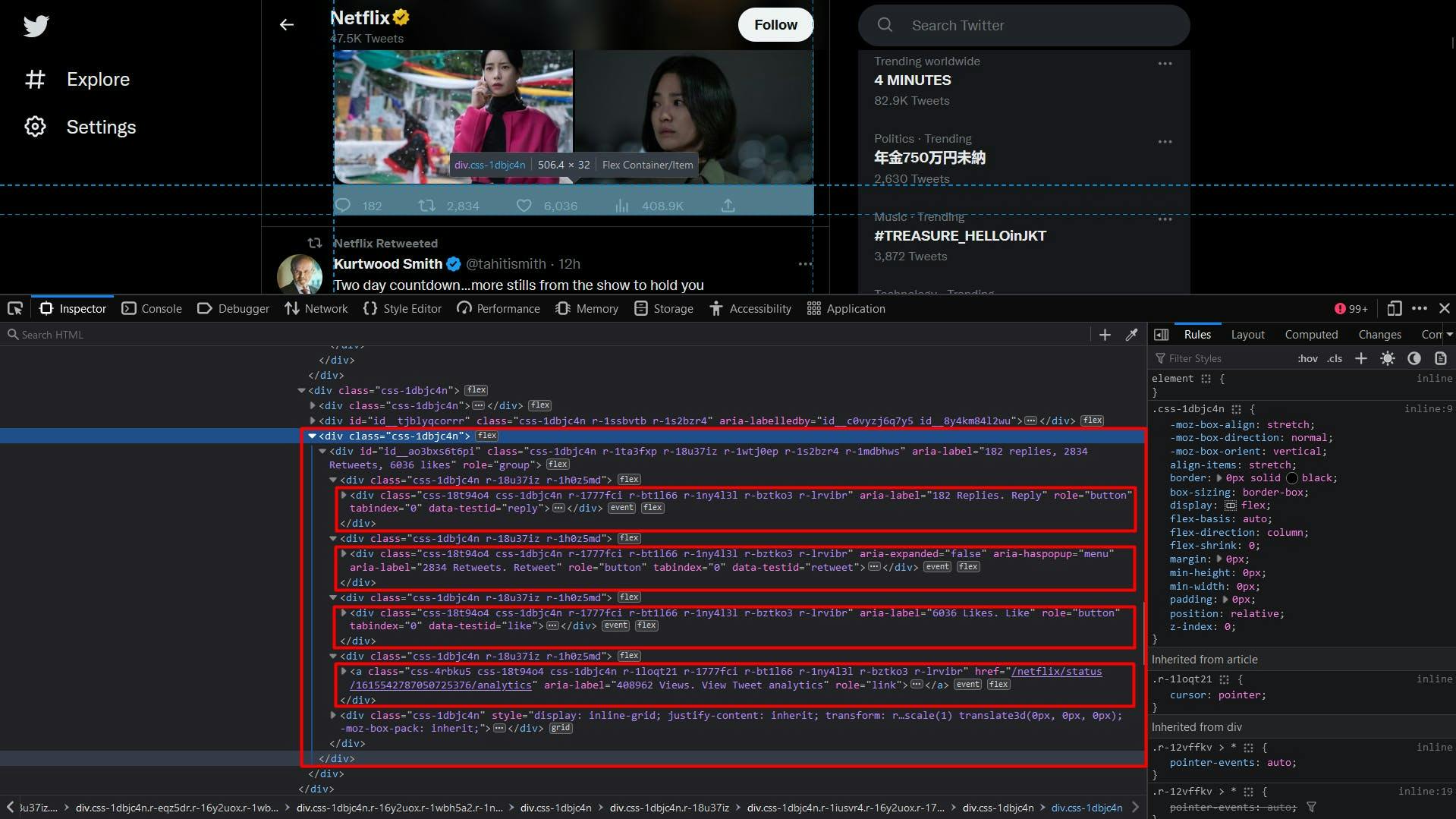

Moving on to the list of tweets, we can again easily identify each one of them using the “data-testid” attribute, as highlighted below:

The code is no different than what we did until this point, with the exception of using the “querySelectorAll” method and converting the result to a Javascript array:

// Extract the user tweets

const userTweets = await page.evaluate(() => {

const tweets = document.querySelectorAll('article[data-testid="tweet"]')

const tweetsArray = Array.from(tweets)

return tweetsArray

})

console.log("User tweets:", userTweets)

However, even though the CSS selector is surely correct, you may have noticed that the resulting list is almost always empty. That’s because the tweets are loaded a few seconds after the page has been loaded.

The simple solution to this problem is to add an extra waiting time after we navigate to the target URL. One option is to play around with a fixed amount of seconds, while another is to wait until a specific CSS selector appears in the DOM:

await page.waitForSelector('div[aria-label^="Timeline: "]')So, here we instruct our script to wait until a “div” element whose “aria-label” attribute starts with “Timeline: “ is visible on the page. And now the previous snippet should work flawlessly.

Moving on, we can identify the data about the tweet’s author just like before, using the “data-testid” attribute.

In the algorithm, we will iterate through the list of HTML elements and apply the “querySelector” method on each one of them. This way, we can better ensure that the selectors that we use are unique, as the targeted scope is much smaller.

// Extract the user tweets

const userTweets = await page.evaluate(() => {

const tweets = document.querySelectorAll('article[data-testid="tweet"]')

const tweetsArray = Array.from(tweets)

return tweetsArray.map(t => {

const authorData = t.querySelector('div[data-testid="User-Names"]')

const authorDataText = authorData ? authorData.textContent : ""

const authorComponents = authorDataText.split('@')

const authorComponents2 = authorComponents[1].split('·')

return {

authorName: authorComponents[0],

authorHandle: '@' + authorComponents2[0],

date: authorComponents2[1],

}

})

})

console.log("User tweets:", userTweets)

The data about author will appear concatenated here as well, so to make sure that the result makes sense, we apply the “split” method on each section.

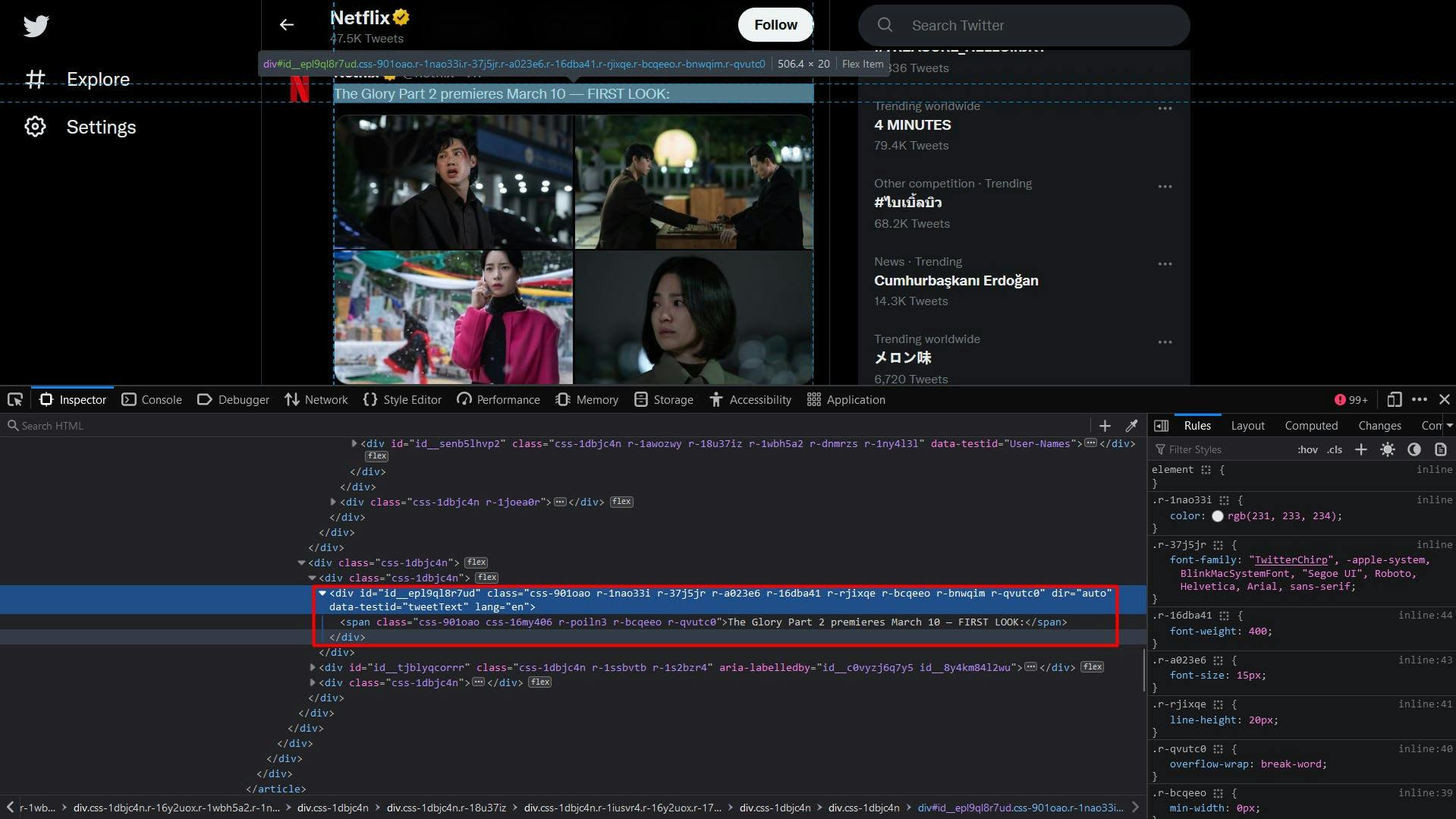

The text content of the tweet is pretty straightforward:

const tweetText = t.querySelector('div[data-testid="tweetText"]')

For the tweet’s photos, we will extract a list of “img” elements, whose parents are “div” elements with the “data-testid” attribute set to “tweetPhoto”. The final result will be the “src” attribute of these elements.

const tweetPhotos = t.querySelectorAll('div[data-testid="tweetPhoto"] > img')

const tweetPhotosArray = Array.from(tweetPhotos)

const photos = tweetPhotosArray.map(p => p.getAttribute('src'))

And finally, the statistics section of the tweet. The number of replies, retweets and likes are accessible in the same way, through the value of the “aria-label” attribute, after we identify the element with the “data-testid” attribute.

To get the number of views, we target the anchor element whose “aria-label” attribute ends with the “Views. View Tweet analytics” string.

const replies = t.querySelector('div[data-testid="reply"]')

const repliesText = replies ? replies.getAttribute("aria-label") : ''

const retweets = t.querySelector('div[data-testid="retweet"]')

const retweetsText = retweets ? retweets.getAttribute("aria-label") : ''

const likes = t.querySelector('div[data-testid="like"]')

const likesText = likes ? likes.getAttribute("aria-label") : ''

const views = t.querySelector('a[aria-label$="Views. View Tweet analytics"]')

const viewsText = views ? views.getAttribute("aria-label") : ''Because the final result will also contain characters, we use the “split” method to extract and return the numerical value only. The complete code snippet to extract tweets data is shown below:

// Extract the user tweets

const userTweets = await page.evaluate(() => {

const tweets = document.querySelectorAll('article[data-testid="tweet"]')

const tweetsArray = Array.from(tweets)

return tweetsArray.map(t => {

// Extract the tweet author, handle, and date

const authorData = t.querySelector('div[data-testid="User-Names"]')

const authorDataText = authorData ? authorData.textContent : ""

const authorComponents = authorDataText.split('@')

const authorComponents2 = authorComponents[1].split('·')

// Extract the tweet content

const tweetText = t.querySelector('div[data-testid="tweetText"]')

// Extract the tweet photos

const tweetPhotos = t.querySelectorAll('div[data-testid="tweetPhoto"] > img')

const tweetPhotosArray = Array.from(tweetPhotos)

const photos = tweetPhotosArray.map(p => p.getAttribute('src'))

// Extract the tweet reply count

const replies = t.querySelector('div[data-testid="reply"]')

const repliesText = replies ? replies.getAttribute("aria-label") : ''

// Extract the tweet retweet count

const retweets = t.querySelector('div[data-testid="retweet"]')

const retweetsText = retweets ? retweets.getAttribute("aria-label") : ''

// Extract the tweet like count

const likes = t.querySelector('div[data-testid="like"]')

const likesText = likes ? likes.getAttribute("aria-label") : ''

// Extract the tweet view count

const views = t.querySelector('a[aria-label$="Views. View Tweet analytics"]')

const viewsText = views ? views.getAttribute("aria-label") : ''

return {

authorName: authorComponents[0],

authorHandle: '@' + authorComponents2[0],

date: authorComponents2[1],

text: tweetText ? tweetText.textContent : '',

media: photos,

replies: repliesText.split(' ')[0],

retweets: retweetsText.split(' ')[0],

likes: likesText.split(' ')[0],

views: viewsText.split(' ')[0],

}

})

})

console.log("User tweets:", userTweets)

After running the whole script, your terminal should show something like this:

Profile name: Netflix

Profile handle: @netflix

User bio:

User location: California, USA

User website: netflix.com/ChangePlan

User join date: Joined October 2008

User following: 2,222 Following

User followers: 21.3M Followers

User tweets: [

{

authorName: 'best of the haunting',

authorHandle: '@bestoffhaunting',

date: '16 Jan',

text: 'the haunting of hill house.',

media: [

'https://pbs.twimg.com/media/FmnGkCNWABoEsJE?format=jpg&name=360x360',

'https://pbs.twimg.com/media/FmnGkk0WABQdHKs?format=jpg&name=360x360',

'https://pbs.twimg.com/media/FmnGlTOWABAQBLb?format=jpg&name=360x360',

'https://pbs.twimg.com/media/FmnGlw6WABIKatX?format=jpg&name=360x360'

],

replies: '607',

retweets: '37398',

likes: '170993',

views: ''

},

{

authorName: 'Netflix',

authorHandle: '@netflix',

date: '9h',

text: 'The Glory Part 2 premieres March 10 -- FIRST LOOK:',

media: [

'https://pbs.twimg.com/media/FmuPlBYagAI6bMF?format=jpg&name=360x360',

'https://pbs.twimg.com/media/FmuPlBWaEAIfKCN?format=jpg&name=360x360',

'https://pbs.twimg.com/media/FmuPlBUagAETi2Z?format=jpg&name=360x360',

'https://pbs.twimg.com/media/FmuPlBZaEAIsJM6?format=jpg&name=360x360'

],

replies: '250',

retweets: '4440',

likes: '9405',

views: '656347'

},

{

authorName: 'Kurtwood Smith',

authorHandle: '@tahitismith',

date: '14h',

text: 'Two day countdown...more stills from the show to hold you over...#That90sShow on @netflix',

media: [

'https://pbs.twimg.com/media/FmtOZTGaEAAr2DF?format=jpg&name=360x360',

'https://pbs.twimg.com/media/FmtOZTFaUAI3QOR?format=jpg&name=360x360',

'https://pbs.twimg.com/media/FmtOZTGaAAEza6i?format=jpg&name=360x360',

'https://pbs.twimg.com/media/FmtOZTGaYAEo-Yu?format=jpg&name=360x360'

],

replies: '66',

retweets: '278',

likes: '3067',

views: ''

},

{

authorName: 'Netflix',

authorHandle: '@netflix',

date: '12h',

text: 'In 2013, Kai the Hatchet-Wielding Hitchhiker became an internet sensation -- but that viral fame put his questionable past squarely on the radar of authorities. \n' +

'\n' +

'The Hatchet Wielding Hitchhiker is now on Netflix.',

media: [],

replies: '169',

retweets: '119',

likes: '871',

views: '491570'

}

]

Scaling up

While scraping Twitter may seem easy at first, the process can become more complex and challenging as you scale up your project. The website implements various techniques to detect and prevent automated traffic, so your scaled-up scraper starts getting rate-limited or even blocked.

One way to overcome these challenges and continue scraping at large scale is to use a scraping API. These kinds of services provide a simple and reliable way to access data from websites like twitter.com, without the need to build and maintain your own scraper.

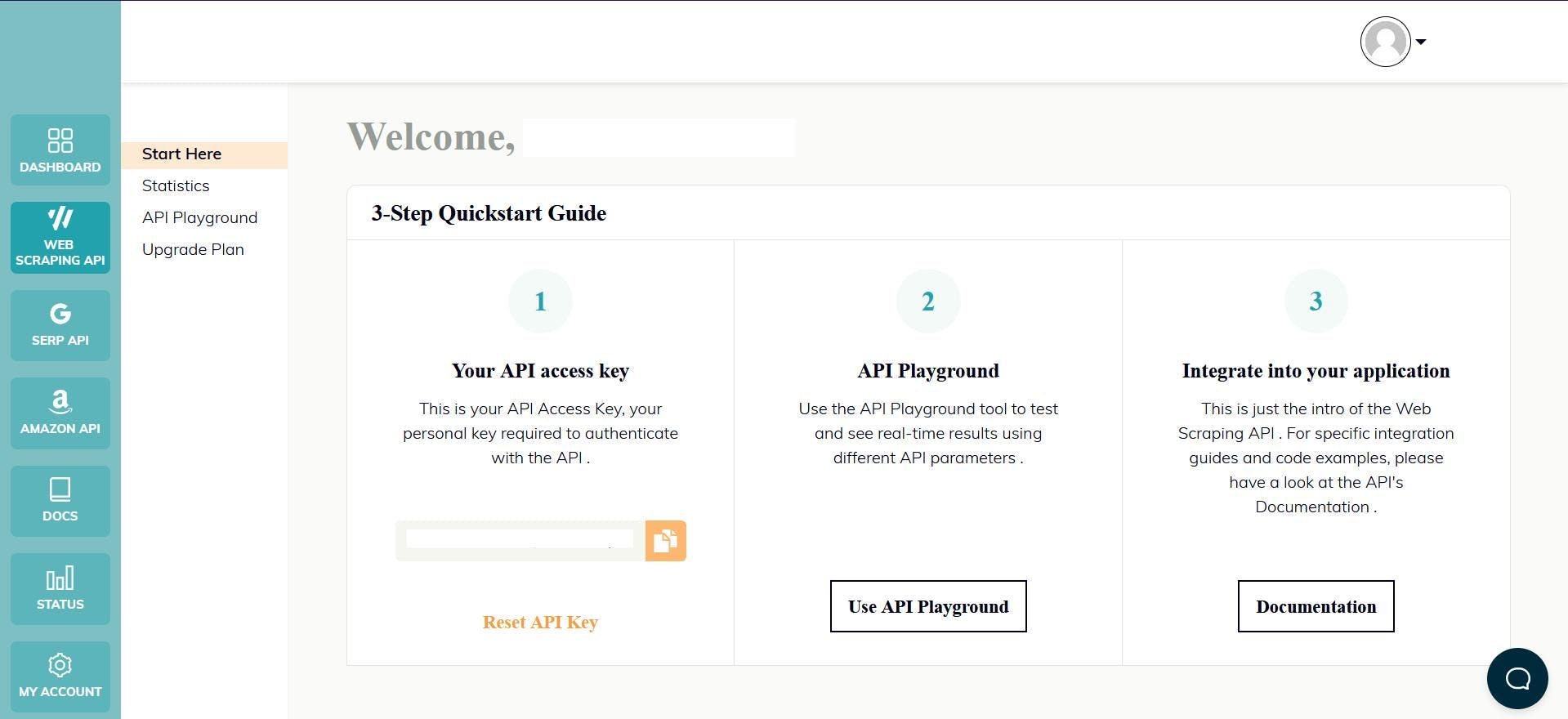

WebScrapingAPI is an example of such a product. Its proxy rotation mechanism avoids blocks altogether, and its extended knowledge base makes it possible to randomize the browser data so it will look like a real user.

The setup is quick and easy. All you need to do is register an account, so you’ll receive your API key. It can be accessed from your dashboard, and it’s used to authenticate the requests you send.

As you have already set up your Node.js environment, we can make use of the corresponding SDK. Run the following command to add it to your project dependencies:

npm install webscrapingapi

Now all it’s left to do is to send a GET request so we receive the website’s HTML document. Note that this is not the only way you can access the API.

import webScrapingApiClient from 'webscrapingapi';

const client = new webScrapingApiClient("YOUR_API_KEY");

async function exampleUsage() {

const api_params = {

'render_js': 1,

'proxy_type': 'residential',

'wait_for_css': 'div[aria-label^="Timeline: "]',

'timeout': 30000

}

const URL = "https://twitter.com/netflix"

const response = await client.get(URL, api_params)

if (response.success) {

console.log(response.response.data)

} else {

console.log(response.error.response.data)

}

}

exampleUsage();

By enabling the “render_js” parameter, we send the request using a headless browser, just like you previously did along with this tutorial.

After receiving the HTML document, you can use another library to extract the data of interest, like Cheerio. Never heard of it? Check out this guide to help you get started!

Conclusion

This article has presented a comprehensive guide on how to web scrape Twitter effectively using TypeScript. We covered the steps of setting up the necessary environment, locating and extracting data, and the potential uses of this information.

Twitter is a valuable source of data for those looking to gain insights into consumer sentiment, social media monitoring, and business intelligence. However, it's important to note that using the Twitter API alone may not be enough to access all the data you need, and that's why using a professional scraper is a better solution.

Overall, web scraping Twitter can provide valuable insights and can be a great asset for any business or individual looking to gain a competitive edge.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore a detailed comparison between Scrapy and Beautiful Soup, two leading web scraping tools. Understand their features, pros and cons, and discover how they can be used together to suit various project needs.

Discover how to efficiently extract and organize data for web scraping and data analysis through data parsing, HTML parsing libraries, and schema.org meta data.

Are XPath selectors better than CSS selectors for web scraping? Learn about each method's strengths and limitations and make the right choice for your project!