Top 5 Node-Fetch Alternatives For Making HTTP Requests

WebscrapingAPI on Oct 20 2022

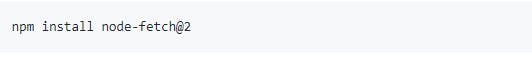

Those in the node-fetch team know how easy it is to install the module. You have the resources with a straightforward npm install node-fetch instantly. You do not have to implement the XMLHttpRequest.

Learning how to fetch data can be overwhelming as thousands of solutions are out there. Each solution claims to be better than the other. Some solutions offer cross-platform support, while others focus on developer experience.

Web applications need to communicate with web servers frequently to acquire various resources. You might need to post or fetch data to an API or external server.

In this blog, I will be taking you through node-fetch. You will understand what it is and how it can be used. I have also included node-fetch alternatives that you may opt for.

Without further ado, let’s dive in!

Node-fetch

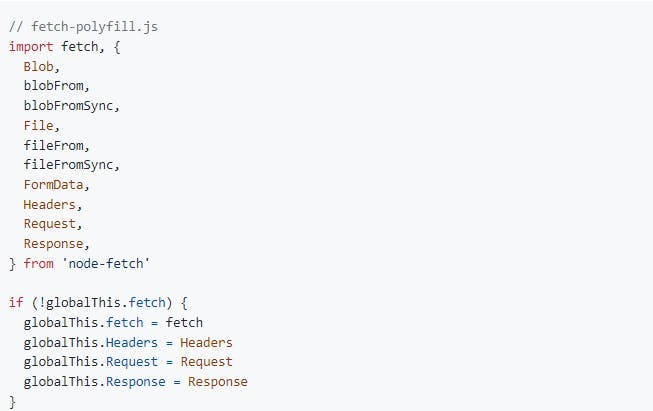

Node-Fetch is a small module that adds the Fetch API to Node.js.

With fetch (in the browser or via Node Fetch), you can combine the await and .then syntax to make turning the reading stream into JSON a little more pleasant—so data,

As seen in the sample below, it contains the JSON without the requirement for an ugly intermediary variable.

Furthermore, Node Fetch supports handy extensions such as response size restriction, redirect limit, and explicit failures for debugging.

You can use several libraries, including node-fetch in NodeJS, to post or fetch data. While on the client-side javascript Fetch API can be used to do the same

You can load the node-fetch module before getting the Google home page. The Address of the server to which you're sending an HTTP request is the sole argument you supply to the fetch() method.

You can chain a handful of .then() routines to assist you in controlling the response and data from our request because node-fetch is promise-based.

The URL field simply contains the actual URL of the resource we want to get. If it is not an absolute URL, the function will fail.

When you wish to utilize get() for something other than a standard GET request, we use the optional options parameter, but we'll go over that in more detail later.

The method returns a Response object, which provides practical functions and HTTP response information such as:

- Text () - returns the body of the answer as a string.

- JSON() - converts the response body to a JSON object and returns an error if it cannot be processed.

- Status and statusText - these fields include information about the HTTP status code.

- Ok - equals true if the status is a 2xx status code (a flourishing condition).

- Headers - an object holding response headers; the get() function may be used to obtain a single header.

Popularity

- 8 MM npm weekly downloads

- +6.8K Modules that depend on node-rest

- +383 Forks

- 3.8K GitHub stars

- 38 contributors

Features of node-fetch

- Maintain consistency with the window .fetch API.

- When following the stream spec and WHATWG fetch spec implementation details, make mindful trade-offs and describe known variances.

- Using async functions and Native promise

- On both the request and the response, use native Node streams for the body.

- Decode content encoding (gzip/brotli/deflate) correctly and automatically convert string output (including res.json() and res.text()) to UTF-8.

- For debugging, valuable additions include response size restriction, redirect limit, and explicit errors.

Pros

- With an install size of about 150 KB, Node-fetch is perhaps the most lightweight HTTP request module.

- It offers impressive features such as JSON mode, promise API, browser compatibility, request cancellation, and the ability to replace its promise library or decode contemporary web encoding as deflate/grip.

- It adheres to the most recent JavaScript HTTP request patterns and remains the most downloaded module after request, with about 8 million downloads each week (surpassing Got, Axios, and Superagent).

Why You Need a Node-fetch Alternative

- It does not support HTTP/2 or cookies

- Node-fetch does not allow RFC-compliant caching

- Node-fetch does not retry on failure.

- It does not support progress events, metadata errors, advanced timeouts, or hooks.

My top node-fetch alternatives

Here is a list of my top 5 node-fetch alternatives you can use in your solutions

- Axios

- Got

- Superagent

- Request

- WebscrapingAPI

I will go through each of them for you to better understand what they are and what they offer.

Let’s dive in!

1. Axios

Axios is a promise-based HTTP client for Node.js and the browser. It, like SuperAgent, automatically parses JSON answers. Its ability to perform concurrent queries using Axios distinguishes it even more. all,

To install Axios

You can request by passing the relevant config.

Because of its simplicity, some developers prefer Axios to built-in APIs. However, many people overestimate the necessity of such a library. The node-fetch is fully capable of recreating Axios' essential functionality.

Popularity

- +4.4 MM npm downloads

- +15.6K Modules depends on it

- +57K GitHub stars

- 71 contributors

- +4.4K Forks

Features

- Make XMLHttpRequests in the browser

- Supports Promise API

- Make HTTP requests in node.js

- Intercept response and request

- Cancel requests

- Transform response and request data

- JSON data automatic transforms

- Automatic data object serialization

- Client-side support

Pros

- Axios allows you to fully set up and customize your requests by feeding it a single configuration object. It can monitor the status of POST requests and execute automated modifications of JSON data.

- Axios also serves as the most used front-end HTTP request module. It is pretty popular and adheres to the most recent JavaScript patterns. It handles request cancellation, redirection, gzip/deflate, metadata problems, and hooks.

Cons

- Axios do not support HTTP2, Stream API, and electron. It also does not retry failures and runs on Node.js with built-in promise support. Q promise or Bluebird is required for older versions.

2. Got

Got is yet another user-friendly and robust HTTP request framework for Node.js. It was initially designed as a lightweight replacement for the popular Request package. Check out this thorough table to learn how Got compares against other libraries.

To install got

Got has an option for JSON payload handling.

Unlike SuperAgent and Axios, Got does not automatically parse JSON. To enable this capability, { JSON: true } is added as an argument in a code.

Based on the documentation, Got was produced because the request is too large (it contains several gigabytes!). 4.46 MB vs. 302 KB received).

Popularity

- +6.2 MM npm downloads

- +2.5K Modules depends on Got

- +280 Forks

- +5K GitHub stars

- 71 contributors

Features

- Supports HTTP/2

- Supports PromiseAPI and StreamAPI

- Retries on failure

- Follows redirects

Pros

- Compared to the other alternatives, Got supports more functionalities and is growing in popularity since it is user-friendly, has a minimal installation size, and is up to date with all JavaScript new patterns.

Cons

- Got does not have browser support

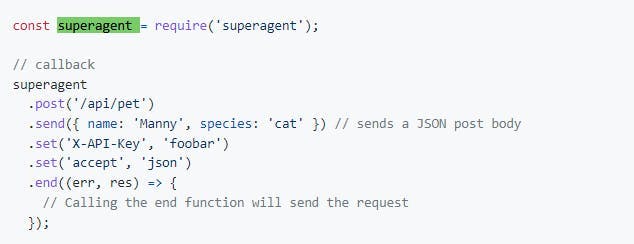

3. SuperAgent

SuperAgent is a tiny HTTP request library that can be used in Node.js and browsers to make AJAX queries.

SuperAgent has thousands of plugins available to carry out tasks like preventing caching, transforming server payloads, and suffix or prefix URLs.

To install SuperAgent

Usage in node

You might also increase functionality by creating your plugin. SuperAgent can also parse JSON data for you.

Popularity

- 2.5 MM npm downloads

- +6.4K Modules depends on SuperAgent

- +1.2K Forks

- +14K stars on GitHub

- 182 contributors

Pros

- Superagent is well-known for providing a fluent interface for performing HTTP requests, a plugin architecture, and multiple plugins for many popular functionalities currently accessible (for example, its prefix to add a prefix to every URL).

- Superagent also offers a stream and promise API, request cancellation, retries when a request fails, supports gzip/deflate, and handles progress events.

Cons

- Superagent's build is presently failing. It also does not offer upload progress tracking like XMLHttpRequest

- It does not support timers, metadata errors, or hooks.

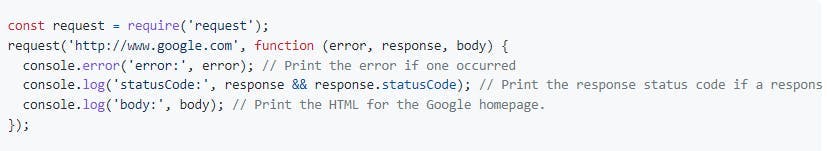

4. Request

Request is among the most popular Node.js simple HTTP request client, and it was among the first modules to be published to the npm registry.

It has over 14 million downloads each week and is built to be the simplest way to perform HTTP requests in Node.js.

A file can also be streamed to a POST or PUT request. This method will compare the file extension mapping content types to file extensions.

You can also customize HTTP headers like User-Agent in the options object.

Properties

- +9 MM npm downloads

- +6.4K Modules depends on Request

- +3.2K Forks

- +25.2K stars on GitHub

- 126 contributors

Features

- It supports HTTPS

- by default, follows redirects.

Pros

- It is easy to get started with Request and easy to use.

- It is a popular and widely used module for making HTTP calls

Cons

It has been fully deprecated since 2020. No new changes are expected

5. WebScrapingAPI

I have to say WebScrapingAPI has offered me practical solutions to challenges I have faced while fetching data from the web. WebScrapingAPI has saved me time and money, helping me focus on building my product.

WebscrapingAPI is an enterprise-grade scaled, easy-to-use API that helps collect and manage HTML data. Let's not forget you get all your web scraping solution under one API which means one clean code.

Setting the API key and URL arguments of the URL and your access key to the website you wish to scrape is the most straightforward basic request you can make to the API.

Understanding what capabilities WebScrapingAPI offers is critical to assist us with our web scraping journey. This information may be obtained in the extensive documentation, including code samples in several programming languages.

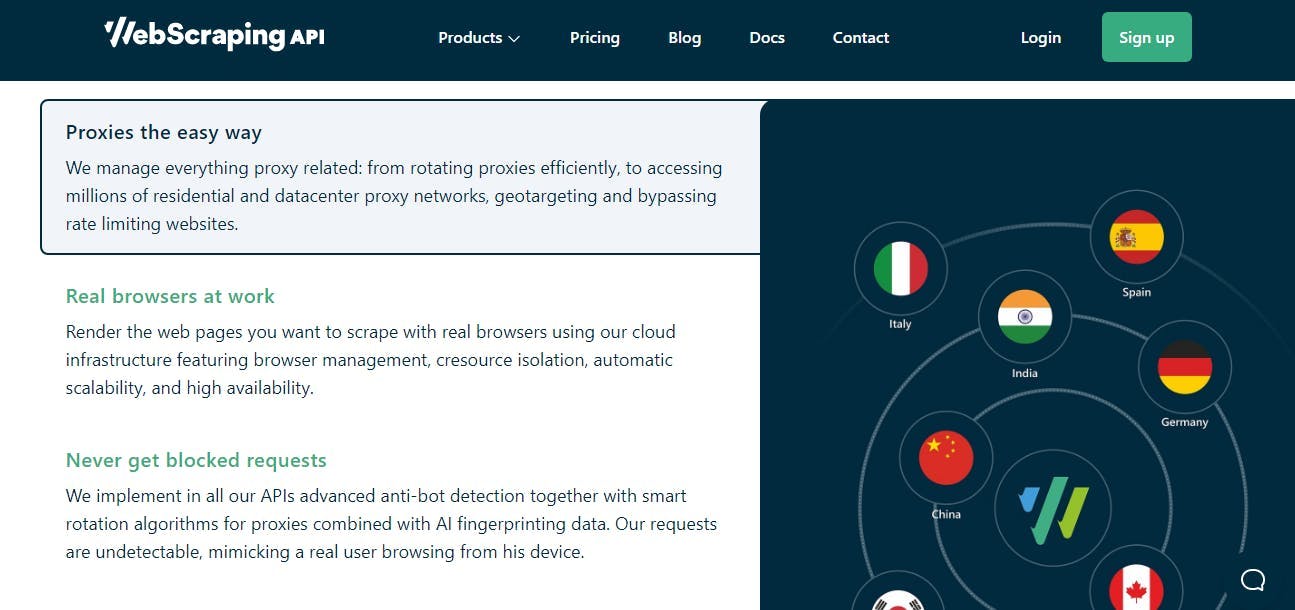

Many times I was faced with countermeasures that detected and blocked my bot from doing my bidding. That is because you cannot scrape all websites. Some employ countermeasures like browser fingerprinting and CAPCHAs, which are a bummer.

Dealing with bot-detecting technologies might be challenging, yet WebScrapingAPI offers solutions from CAPTCHAs to IP blocking and automated retries, managing it all. You merely need to concentrate on your objectives. They handle everything else.

it has excellent technical proficiency with over 100 million proxies ensuring you don’t get blocked. That is because some websites can only be scraped in certain places worldwide. To do this, you need a proxy to access their data.

Since managing a proxy pool is difficult, WebScrapingAPI does everything for you. It has millions of rotating proxies to ensure you remain undetected. It also allows you access to geo-restricted content using a specific IP address.

This API offers Javascript rendering. You can activate Javascript rendering using real browsers. After activating it, you get to see anything being displayed to users. That includes single-page applications using AngularJS, React, or other libraries.

What the users see is what you get equally. What better competitive advantage could that have?

Moreover, the API’s infrastructure is built in Amazon Web Services, offering you access to extensive, secure, and reliable global mass data.

In my honest opinion, using WebScrapingAPI is a win.

Pros

- Built on AWS

- Affordable pricing

- Speed Obsessive Architecture

- EVERY package has Javascript rendering

- High-quality services, uptime, and absolute stability

- Over 100 million rotating proxies to reduce blocking

- Customizable features

Cons

None so far.

Pricing

- The starting plan for using this API is $49 per month.

- Free trial options

WebScrapingAPI is a good option if you feel like you don’t have the time to build the web scraper from scratch. Go ahead and check it out.

Why WebScrapingAPI is my Top Recommendation:

I recommend WebScrapingAPI because it offers straightforward solutions in web scraping for everyone in one API. It also has one of the best user-interface making it easy to scrape data.

The API is aggressive enough to get your job done.

Let’s take a moment and consider all the data at your disposal. Don’t forget you can get your hands on the competitive costs and offer your customers better deals.

WebScrapingAPI offers you pricing optimization. How? Let me put it this way. Your business can grow significantly by having a better view of your competition. As prices fluctuate in your industry, you can use data from this API to know how your business will survive.

WebScrapingAPI comes in handy when searching for an item you want to purchase. You can use the data to compare prices from various suppliers and choose the best deal.

Moreover, you don’t have to worry about being blocked. Why? Because this API makes sure you fetch the data you need without blockers. With millions of rotating proxies, you remain undetected and can access geo-restricted content using a specific IP address.

How cool is that?

The API’s infrastructure is also built in Amazon Web Services, offering you access to extensive, secure, and reliable global mass data. Hence, companies like Steelseries, Deloitte, and Wunderman Thompson trust this API for their data needs and web scraping services.

Moreover, it is only $49 per month. I am obsessed with the speed it possesses. And with the use of a global rotating proxy network, it has more than 10.000 users already using its services. That is why I would recommend using WebScrapingAPI in fetching data.

Start your scraping journey with the leading web scraping REST API

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore the complexities of scraping Amazon product data with our in-depth guide. From best practices and tools like Amazon Scraper API to legal considerations, learn how to navigate challenges, bypass CAPTCHAs, and efficiently extract valuable insights.

Explore the in-depth comparison between Scrapy and Selenium for web scraping. From large-scale data acquisition to handling dynamic content, discover the pros, cons, and unique features of each. Learn how to choose the best framework based on your project's needs and scale.

Explore the transformative power of web scraping in the finance sector. From product data to sentiment analysis, this guide offers insights into the various types of web data available for investment decisions.