HTTP Headers 101: How to Use Them for Effective Web Scraping

Raluca Penciuc on Feb 03 2023

Web scraping is an incredible tool for extracting valuable information from the internet, but let's be real, it can be pretty frustrating when your scraping scripts get blocked.

It's like a game of cat and mouse, with website owners always coming up with new ways to keep you out. But, there's a secret weapon in your toolbox that can give you the upper hand: HTTP headers and cookies.

These two elements play a critical role in how your scraping scripts interact with websites, and mastering them can mean the difference between a blocked scrape and a successful one.

In this tutorial, we'll uncover the secrets of HTTP headers and cookies and show you how to use them to make your scraping efforts as human-like as possible.

You'll learn about the most common headers used in web scraping, how to grab headers and cookies from a real browser, and how to use custom headers to bypass security measures. So, let's dive in and see how we can take our scraping game to the next level!

Understanding HTTP headers

HTTP headers are key-value pairs that you send as part of an HTTP request or response. They are separated by a colon and a space and their names (keys) are case-insensitive.

You can group HTTP headers into different categories, depending on their function and the direction in which you send them. These categories include:

- general headers: apply to both request and response messages

- request headers: contain more information about the resource you want to fetch, or about the client making the request

- response headers: hold additional information about the response, such as its location or the server providing it

- entity headers: contain information about the body of the resource, such as its size or MIME type

- extension headers: used to provide backward compatibility

HTTP headers provide a wide range of information, including the type of request you are making, the browser you are using, and any additional information that the server needs to process the request.

They also allow you to provide authentication and security information, control caching and compression, and specify the language and character set of the request.

For example, here are the headers that Chrome sends when accessing youtube.com:

:authority: www.youtube.com

:method: GET

:path: /

:scheme: https

accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9

accept-encoding: gzip, deflate, br

accept-language: en-US,en;q=0.9

cache-control: no-cache

pragma: no-cache

referer: https://www.google.com/

sec-ch-ua: "Not_A Brand";v="99", "Google Chrome";v="109", "Chromium";v="109"

sec-ch-ua-arch: "x86"

sec-ch-ua-bitness: "64"

sec-ch-ua-full-version: "109.0.5414.75"

sec-ch-ua-full-version-list: "Not_A Brand";v="99.0.0.0", "Google Chrome";v="109.0.5414.75", "Chromium";v="109.0.5414.75"

sec-ch-ua-mobile: ?0

sec-ch-ua-model: ""

sec-ch-ua-platform: "Windows"

sec-ch-ua-platform-version: "15.0.0"

sec-ch-ua-wow64: ?0

sec-fetch-dest: document

sec-fetch-mode: navigate

sec-fetch-site: same-origin

sec-fetch-user: ?1

upgrade-insecure-requests: 1

user-agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36

x-client-data: CIm2yQEIorbJAQjEtskBCKmdygEI5PDKAQiWocsBCKr2zAEI7oLNAQibiM0BCLqIzQEI9YjNAQ==

Decoded:

message ClientVariations {

// Active client experiment variation IDs.

repeated int32 variation_id = [3300105, 3300130, 3300164, 3313321, 3324004, 3330198, 3357482, 3359086, 3359771, 3359802, 3359861];

}

Understanding web cookies

Web cookies, or HTTP cookies, are small text files that a website stores on the user’s browser. After that, every time the user sends a new request to the website, the cookies are included automatically.

They are uniquely identifiable, and you can find them as:

- session cookies: they are temporary and expire when the user closes the browser

- persistent cookies: they have a specific expiration date and remain on the user's device until they expire or are deleted by the user.

Websites use web cookies to personalize the user’s experience. This may include remembering their login information and storing shopping cart contents to understand how users interact with the website and delivering targeted advertising.

Taking youtube.com as an example here as well, we can notice what cookies store:

cookie: CONSENT=YES+srp.gws-20210816-0-RC3.ro+FX+801; __Secure-3PAPISID=jG4abs_wYhyzcDG5/A2yfWlePlb1U9fglf; VISITOR_INFO1_LIVE=pJuwGIYiJlE; __Secure-3PSIDCC=AEf-XMRV_MjLL0AWdGWngxFHvNUF3OIpk3_jdeUwRiZ76WZ3XsSY0Vlsl1jM9n7FLprKTqFzvw; __Secure-3PSID=RAi8PYLbf3qLvF1oEav9BnHK_eOXwimNM-0xwTQPj1-QVG1Xwpz17T4d-EGzT6sVps1PjQ.; YSC=4M3JgZEwyiA; GPS=1; DEVICE_INFO=ChxOekU1TURJMk1URTBOemd5TWpJeU5qVTJOdz09EOvgo54GGOvgo54G; PREF=tz=Europe.Bucharest&f6=40000000&f4=4000000; CONSISTENCY=ACHmjUr7DnoYSMf5OL-vaunKYfoLGz1lWYRUZRepFyIBDRpp_jrEa85E4wgRJLJ2j15l688hk9IVQu7fIjYXo7sdsZamArxVHTMuChgHd22PkX_mbfifnMjyp4OX2swyQJRS-8PE6cOCt_6129fGyBs; amp_adc4c4=Ncu7lbhgeiAAYqecSmyAsS.MXVDWTJjd3BXdmRkQ3J0YUpuTkx3OE5JcXVKMw==..1gn4emd4v.1gn4en556.0.4.4

The importance of headers and cookies in web scraping

In web scraping, you can use headers and cookies to bypass security measures and access restricted content, as well as to provide information that can help identify your scraping script as a legitimate browser.

For example, by specifying the correct User-Agent header (more details in the following section), you can make your script appear as if it is a Chrome browser, which can help you avoid detection by the website.

Also, by storing and sending cookies, your scraper can access content that is only available to logged-in users. As the website uses cookies to provide targeted advertising, you can also use them to extract more accurate data and get a better understanding of the website.

Besides playing with HTTP headers and cookies, consider some pretty useful web scraping best practices listed in this guide.

Common headers used in web scraping

There are many different headers that can be used in web scraping, but some of the most commonly used are:

User-Agent

It is used to identify the browser and operating system being used by the client making the request. This information is used by servers to determine which browser and operating system the client is using in order to provide the appropriate content and features.

The User-Agent string contains information such as the browser name, version, and platform. For example, a User-Agent string for Google Chrome on Windows might look like this:

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36

In web scraping, by specifying a User-Agent that belongs to a common web browser, you can make your script appear as a browser that is commonly used by legitimate users, thus making it less likely to be blocked.

Accept

It is used to specify the types of content that the browser is willing to accept in response to an HTTP request, such as text, images, audio, or video. An Accept header may look like this:

Accept:

text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,/;q=0.8

In web scraping, by specifying the correct Accept header, you can ensure that your script receives the correct data. For example, if you want to scrape an HTML page, you can specify the Accept header as text/html.

However, some websites can use this header to detect scrapers, so it's important to use it carefully and only when necessary.

Accept-Language

This header specifies the preferred language of the content that the browser is willing to accept in response to an HTTP request. The Accept-Language header is used by servers to determine which language to send to the client.

A browser requesting an HTML page in English might send an Accept-Language header that looks like this:

Accept-Language: en-US,en;q=0.9

Cookie

Websites use this header to send cookies to a client after a request is made. A Cookie header may look like this:

Cookie: session_id=1234567890; user_id=johndoe

In web scraping, you can use this header to pass session cookies and access content that is available only to logged-in users. Another use case would be to use persistent cookies to get personalized results.

Referer

This header specifies the URL of the previous webpage from which a link to the current page was followed. It's used by servers to track the origin of the request and to understand the context of the request.

For example, if a user clicks on a link from a webpage to another webpage, the browser sends a request to the second webpage with the URL of the first webpage in the Referer header. So the request to the second webpage would have a Referer header that looks like this:

Referer: https://www.example.com/

In web scraping, you can use the Referer header to access websites that do not allow direct requests.

Retrieving headers and cookies from a real browser

But enough theory. Let’s see how you can extract HTTP headers and web cookies that are sent to a website.

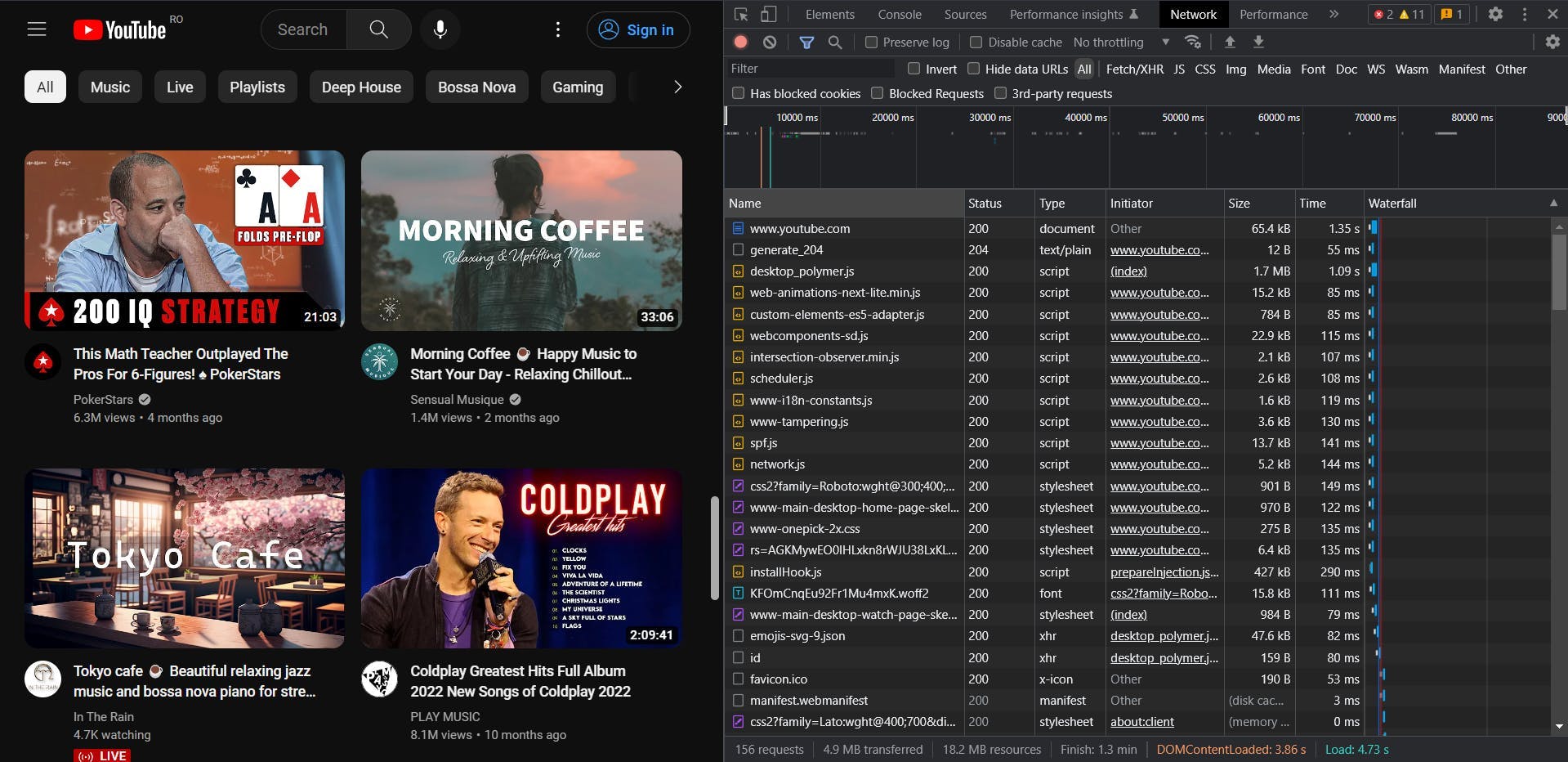

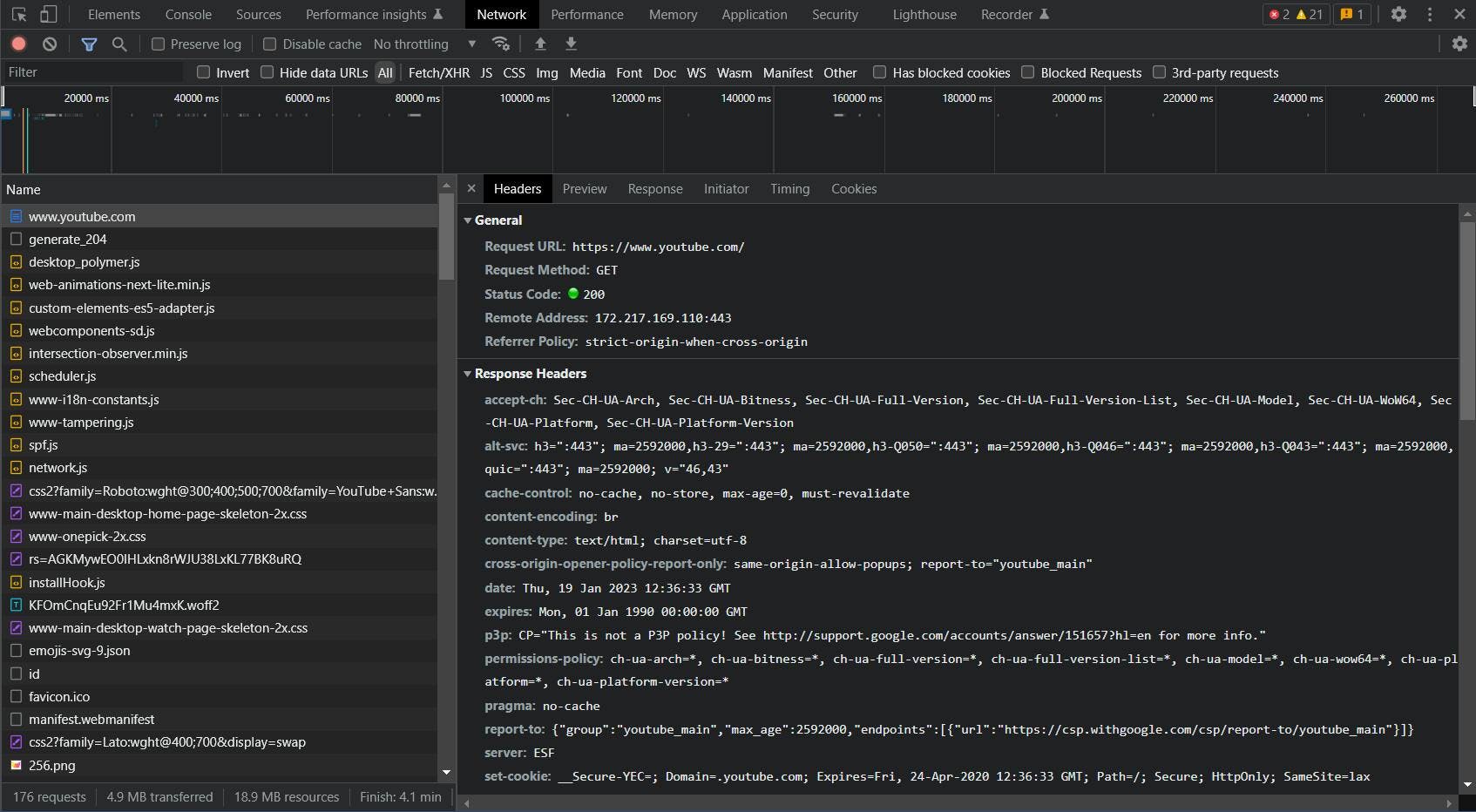

Back to our example, navigate to youtube.com in your real browser. Then right-click anywhere on the page, and choose the “Inspect” option. When the Developer Tools open, go to the “Network” tab.

After refreshing the page you should see the requests loading in real time. Click the first (and the main) one, and an additional tab will appear.

Here you can see all the details of the request you sent: URL, method, status code, remote address, and most importantly: the request and response headers that we were looking for.

Using custom headers in web scraping

- use httpbin.org to show the headers sent by a browser

- pick a programming language and send a basic GET request to see the headers

- pass the custom headers that you grabbed

- repeat the process for node.js and python

- rewrite the section for WSA

- link to API docs

-describe the feature of custom headers

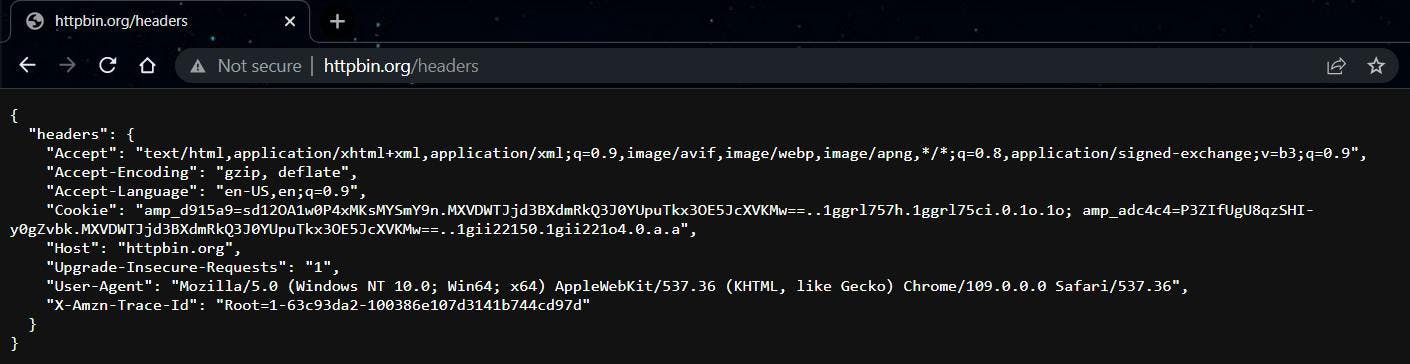

Now let’s see how we can make use of these headers to improve our scrapers. For this part, we’ll take as an example a simple mirroring website, which will directly show us the headers we send: https://httpbin.org/headers.

Copy the content of the “headers” object and let’s start writing the code.

Node.js

After making sure that your Node.js environment is set up correctly and your project initialized, run the following code:

import got from 'got';

(async () => {

const response = await got('https://httpbin.org/headers')

console.log(response.body)

})()

This way we can see how the most basic GET request looks like. The result should be:

{

"headers": {

"Accept-Encoding": "gzip, deflate, br",

"Host": "httpbin.org",

"User-Agent": "got (https://github.com/sindresorhus/got)",

"X-Amzn-Trace-Id": "Root=1-63c93ff5-0c352d6319620b3d6b46df02"

}

}This looks very different from what we saw in our browser. The User-Agent alone makes it really easy for a server to detect that the request is automated.

Now let’s pass our custom headers and send the request again:

import got from 'got';

(async () => {

const custom_headers = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "en-US,en;q=0.9",

"Cache-Control": "no-cache",

"Cookie": "amp_d915a9=sd12OA1w0P4xMKsMYSmY9n.MXVDWTJjd3BXdmRkQ3J0YUpuTkx3OE5JcXVKMw==..1ggrl757h.1ggrl75ci.0.1o.1o; amp_adc4c4=P3ZIfUgU8qzSHI-y0gZvbk.MXVDWTJjd3BXdmRkQ3J0YUpuTkx3OE5JcXVKMw==..1gn51hk3v.1gn51lql7.0.e.e",

"Host": "httpbin.org",

"Pragma": "no-cache",

"Sec-Ch-Ua": "\"Not_A Brand\";v=\"99\", \"Google Chrome\";v=\"109\", \"Chromium\";v=\"109\"",

"Sec-Ch-Ua-Mobile": "?0",

"Sec-Ch-Ua-Platform": "\"Windows\"",

"Sec-Fetch-Dest": "document",

"Sec-Fetch-Mode": "navigate",

"Sec-Fetch-Site": "none",

"Sec-Fetch-User": "?1",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36",

"X-Amzn-Trace-Id": "Root=1-63c93e34-1ad0141279d49bfc28fb058e"

}

const response = await got('https://httpbin.org/headers', {

headers: custom_headers

})

console.log(response.body)

})()

By running the script again, you should notice that now our request looks like it’s sent from a real Chrome browser even though we didn’t open an actual one.

Python

Now let’s try the same thing in Python. Even though the syntax and the libraries are different, the principle is just the same.

import requests

url = 'https://httpbin.org/headers'

headers = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "en-US,en;q=0.9",

"Cache-Control": "no-cache",

"Cookie": "amp_d915a9=sd12OA1w0P4xMKsMYSmY9n.MXVDWTJjd3BXdmRkQ3J0YUpuTkx3OE5JcXVKMw==..1ggrl757h.1ggrl75ci.0.1o.1o; amp_adc4c4=P3ZIfUgU8qzSHI-y0gZvbk.MXVDWTJjd3BXdmRkQ3J0YUpuTkx3OE5JcXVKMw==..1gn51hk3v.1gn51lql7.0.e.e",

"Host": "httpbin.org",

"Pragma": "no-cache",

"Sec-Ch-Ua": "\"Not_A Brand\";v=\"99\", \"Google Chrome\";v=\"109\", \"Chromium\";v=\"109\"",

"Sec-Ch-Ua-Mobile": "?0",

"Sec-Ch-Ua-Platform": "\"Windows\"",

"Sec-Fetch-Dest": "document",

"Sec-Fetch-Mode": "navigate",

"Sec-Fetch-Site": "none",

"Sec-Fetch-User": "?1",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36",

"X-Amzn-Trace-Id": "Root=1-63c93e34-1ad0141279d49bfc28fb058e"

}

response = requests.get(url, headers=headers)

print(response.text)

The result after running the script will look the same no matter what programming language you’ll use.

Custom Headers in WebScrapingAPI

Any respectable scraping API should provide the powerful feature of passing custom HTTP headers and cookies to a request. The standard may differ depending on the API, so make sure to always consult the official documentation first.

By default, WebScrapingAPI provides a set of custom headers with every request. Random User-Agent headers would be only one of the examples.

However, how websites can go extra complex or simply outdated, you have the liberty to disable this behavior and fully customize your request. Just make sure to grab an API key first, then run the following code:

import got from 'got';

(async () => {

const response = await got("https://api.webscrapingapi.com/v1", {

searchParams: {

api_key: "YOUR_API_KEY",

url: "https://httpbin.org/headers",

keep_headers: '0',

},

headers: {

"Wsa-Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"Wsa-Accept-Encoding": "gzip, deflate, br",

"Wsa-Accept-Language": "en-US,en;q=0.9",

"Wsa-Cache-Control": "no-cache",

"Wsa-Cookie": "amp_d915a9=sd12OA1w0P4xMKsMYSmY9n.MXVDWTJjd3BXdmRkQ3J0YUpuTkx3OE5JcXVKMw==..1ggrl757h.1ggrl75ci.0.1o.1o; amp_adc4c4=P3ZIfUgU8qzSHI-y0gZvbk.MXVDWTJjd3BXdmRkQ3J0YUpuTkx3OE5JcXVKMw==..1gn51hk3v.1gn51lql7.0.e.e",

"Wsa-Pragma": "no-cache",

"Wsa-Sec-Ch-Ua": "\"Not_A Brand\";v=\"99\", \"Google Chrome\";v=\"109\", \"Chromium\";v=\"109\"",

"Wsa-Sec-Ch-Ua-Mobile": "?0",

"Wsa-Sec-Ch-Ua-Platform": "\"Windows\"",

"Wsa-Sec-Fetch-Dest": "document",

"Wsa-Sec-Fetch-Mode": "navigate",

"Wsa-Sec-Fetch-Site": "none",

"Wsa-Sec-Fetch-User": "?1",

"Wsa-Upgrade-Insecure-Requests": "1",

"Wsa-User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36",

"Wsa-X-Amzn-Trace-Id": "Root=1-63c93e34-1ad0141279d49bfc28fb058e"

}

})

console.log(response.body)

})()

In this case, according to the API docs, we have to append the “Wsa-” prefix to a header’s name in order to pass it to the request. This measure has been implemented to prevent unintentional headers from being passed, like when the API request is sent from a browser.

Conclusion

This article has presented an overview of the importance and use of HTTP headers and web cookies in web scraping.

We have discussed what headers and cookies are, how you can use them to access restricted content and make your scraping script appear as a legitimate browser, as well as how they can be used for tracking and analytics.

We also presented some of the common headers used in web scraping and explained how to retrieve headers and cookies from a real browser. Then we used code samples to illustrate how to use them in your scraping scripts.

By understanding and effectively using headers and cookies, you can improve the efficiency of your web scraping efforts. With this knowledge, you can take your scraping skills to the next level and extract valuable information from the web.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore the complexities of scraping Amazon product data with our in-depth guide. From best practices and tools like Amazon Scraper API to legal considerations, learn how to navigate challenges, bypass CAPTCHAs, and efficiently extract valuable insights.

Explore the transformative power of web scraping in the finance sector. From product data to sentiment analysis, this guide offers insights into the various types of web data available for investment decisions.

Dive into the transformative role of financial data in business decision-making. Understand traditional financial data and the emerging significance of alternative data.