Node Unblocker for Web Scraping

Suciu Dan on Jan 16 2023

We all hate the moments when we try to access a page and the site blocks our request for no good reason. Geoblocking, for example, can be passed only by using a proxy.

Node-unblocker helps us to create a custom proxy and have it up and running in a matter of minutes.

What is Node-Unblocker?

Node unblocker is a general-purpose library to create a web proxy, intercept and alter requests and responses.

This library is also used in web scraping for bypassing restrictions implemented by the site like geo-blocking, hiding the IP address and rate limiting, or for sending authentication tokens.

To make it shorter, while using this library, you can kiss blocked and censored content goodbye.

In this article, we create an Express application with a custom proxy using Node Unblocker, we add a middleware that changes the user agent for each request, discuss the proxy limitations, deploy it to Heroku and compare it to a managed service like WebScrapingAPI.

Prerequisites

Before we start, make sure you have the latest version of Node.JS installed. Installing Node.JS for each platform (Windows, Linux, Mac) would be the subject of a separate article so instead of going into details, check the official website and follow the instructions.

Setting things up

We start by creating a directory for our project named unblocked and we initialize a Node.JS project inside of it:

mkdir unblocked

cd unblocked

npm init

Install dependencies

For this application, we install two libraries: Express, a minimalist framework for Node.JS, and Node Unblocker.

npm install express unblocker

Create the base application

Creating the Express app

Because of node.unblocker running inside an Express instance, we have to set up the Hello World example in our application.

Create an index.js file and paste the following code:

const express = require('express') const app = express() const port = 8080 app.get('/', (req, res) => { res.send('Hello World!') }) app.listen(process.env.PORT || 8080, () => { console.log(`Example app listening on port ${port}`) })We can run the application with this command:

node index.js

If we access http://localhost:8080 we see a Hello World message. This means our application is up and running.

Adding Node Unblocker To Express

It’s time to import the Node Unblock library into our application:

var Unblocker = require('unblocker')We create a node unbloker instance and pass the proxy parameter. You can find the full list of available parameters here.

var unblocker = new Unblocker({prefix: '/proxy/'})We register the node unblocker library to Express as a middleware so requests are intercepted:

app.use(unblocker)

We update the Express app listener to add support for websockets:

app.listen(process.env.PORT || 8080, () => { console.log(`Example app listening on port ${port}`) }).on('upgrade', unblocker.onUpgrade)After following all these steps, our application should look like this:

const express = require('express') const Unblocker = require('unblocker') const app = express() const port = 8080 const unblocker = new Unblocker({prefix: '/proxy/'}) app.use(unblocker) app.get('/', (req, res) => { res.send('Hello World!') }) app.listen(process.env.PORT || 8080, () => { console.log(`Example app listening on port ${port}`) }).on('upgrade', unblocker.onUpgrade)Testing the proxy

Restart the application and access the following URL in your browser:

http://localhost:8080/proxy/https://webscrapingapi.com

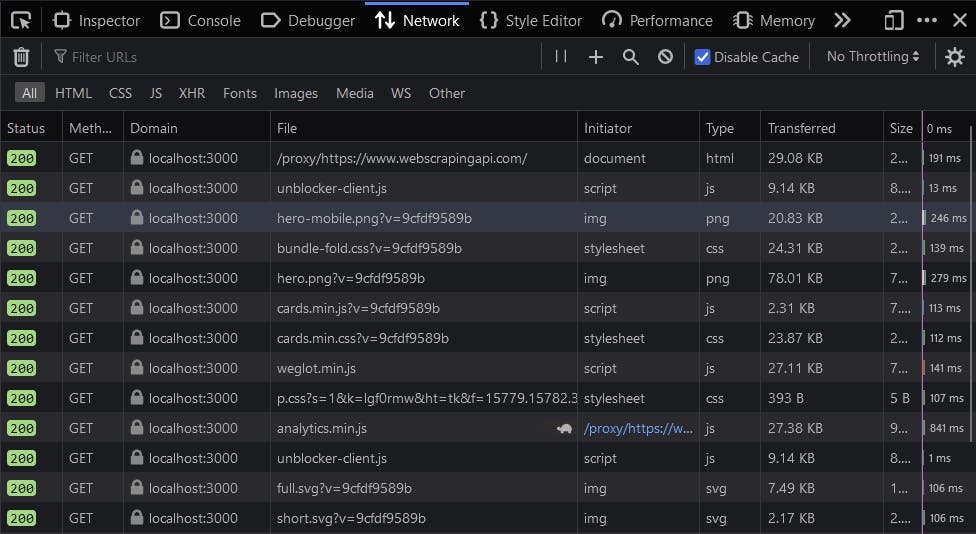

To make sure the proxy works as expected, we open the Developer Tools in the browser and check the Network tab. All requests should go through the proxy.

For any issues the proxy might throw, it’s recommended to enable the debug mode by setting the DEBUG environment variable. Use this command to start the proxy in debug mode:

DEBUG=unblocker:* node index.js

It’s never a good idea to enable this in production so let’s keep it only for the development environment.

Using Middlewares

Nodeunblocker is not just a custom proxy solution but allows the interception and alteration of outgoing and incoming requests through middleware.

We can use this feature to block specific resources from loading based on the resource type or domain, update the user agent, replace returned content, or inject authentication tokens in request headers.

You can find a full list of examples here.

Let’s start by creating a middleware for setting a custom user agent. Create a file called user-agent.js and add this code to it:

module.exports = function(userAgent) {

function setUserAgent(data) {

data["headers"]["user-agent"] = userAgent

}

return setUserAgent

}This function accepts the custom user agent with the userAgent parameter and registers it in the data object using the setUserAgent function. Node unblocked will call the setUserAgent function with each request.

const userAgent = require('./user-agent')We set the requestMiddleware parameter in the Unblocker constructor and we should be good to go.

const unblocker = new Unblocker({

prefix: '/proxy/',

requestMiddleware: [userAgent("nodeunblocker/1.5")]

})Our index.js file should look like this:

const express = require('express')

const Unblocker = require('unblocker')

const userAgent = require('./user-agent')

const app = express()

const port = 8080

const unblocker = new Unblocker({

prefix: '/proxy/',

requestMiddleware: [userAgent("nodeunblocker/1.5")]

})

app.use(unblocker)

app.get('/', (req, res) => {

res.send('Hello World!')

})

app.listen(process.env.PORT || 8080, () => {

console.log(`Example app listening on port ${port}`)

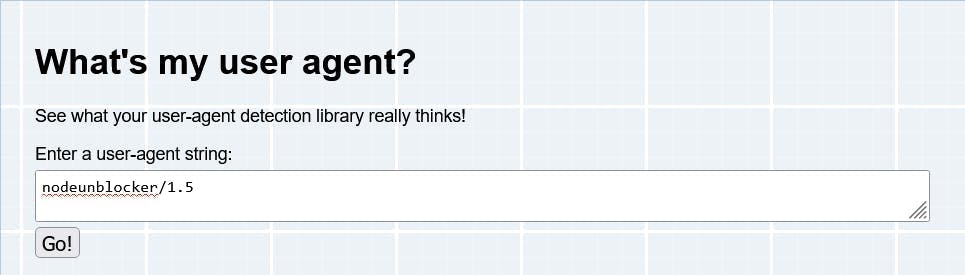

}).on('upgrade', unblocker.onUpgrade)It’s time to check if our code works. We have to change the node-unblocker URL to make sure the headers are properly updated.

Restart the application and open this URL in your browser:

http://localhost:8080/proxy/https://www.whatsmyua.info/

If the site displays nodeunblocker/1.5, our middleware works.

Deploy to Heroku

With our proxy running, it’s time to deploy it to Heroku, a platform as a service (PaaS) that allows us to create, launch, and manage apps fully in the cloud.

Keep in mind that not all providers allow proxies and web scraping apps on their infrastructure. Heroku accepts these kinds of apps as long as the rules from robots.txt are not ignored.

With the legal part discussed, let’s prepare our project for deployment.

Script and Engine

We have to set the start script and the engines in the package.json file.

The `engines` property tells Heroku we require the latest version of Node.JS 16 installed in our environment. The start script is executed when the environment is set and our application is ready to run.

Our package.json should look like this:

{

"name": "unblocked",

"version": "1.0.0",

"main": "index.js",

"engines": {

"node": "16.x"

},

"scripts": {

"start": "node index.js"

},

"dependencies": {

"express": "^4.18.1",

"unblocker": "^2.3.0"

}

}Heroku made the deployment of a Node.JS application a bliss. Before moving to the next section, make sure you have installed the Heroku CLI and the git tools.

Login and Setup

Use this command to authenticate with Heroku from your local terminal:

heroku login

Create a new Heroku application by running this command:

heroku apps:create

This command will return the app’s ID and a git repository. Let’s use the ID to set the remote origin for our repository:

git init

heroku git:remote -a [YOUR_APP_ID]

Because versioning the node_modules folder is never a good idea, let’s create a .gitignore file and add the folder to it.

Deploy

The last step before our code will reach production is to commit it and deploy it. Let’s add all the files, create a commit and merge the master branch in the heroku one.

git add .

git commit -am "Initial commit"

git push heroku master

After a few seconds, the application will be deployed to Heroku. Congratulations! It’s time to access it in our browser and make sure it works.

Use the following URL structure to build the Heroku URL:

[HEROKU_DYNO_URL]/proxy/https://webscrapingapi.com

If you forgot or lost the Dyno URL, you can use this command to get the available information about the current app:

heroku info

Limitations

The ease of setup for this custom proxy comes with a caveat: it works well only for simple sites and it fails for advanced tasks. Some of these limitations are impossible to overcome and will require the use of a different library or third-party services.

A managed service like WebScrapingAPI implements fixes for all these limitations and adds a few extra features like automatic captcha solving, residential proxies, and advanced evasions to prevent services like Akamai, Cloudflare, and Datadome to detect your request.

Here’s a list of limitations you should be aware of before you’re thinking about using Node Unblocker in your production project.

OAuth Issues

OAuth is the authentication standard preferred by modern sites like Facebook, Google, Youtube, Instagram, Twitter. Any library that uses postMessage will fail to work with Node Unblocker and, maybe you already guessed, OAuth requires postMessage to function properly.

If you want to give up 57% of the Internet traffic just to use this library, you can go ahead and add it to your project.

Complex Sites

Sites like YouTube, HBO Max, Roblox, Discord, Instagram don’t work and there is no timeframe for releasing a version that will make these sites work.

The community is invited to contribute with patches to fix these problems but until someone creates a pull request, you won’t be able to scrape any data from them.

Cloudflare

Cloudflare offers a free detection service that is activated by default on all accounts. Our custom proxy server will be detected in seconds and a captcha prompt will be displayed on the screen.

Around 80% of the sites use the Cloudflare CDN. Having your requests blocked by captcha can be the end of your scraper.

Maintenance

Even though setting up a custom proxy is easy, the maintenance work will create an extremely large overhead and will take your focus away from your business goals.

You will have to deal with running the proxy instances, setting up an auto-scaling infrastructure, dealing with concurrency, and managing the clusters. The list is endless.

Conclusion

You now have a web proxy running on Heroku and a good understanding of how to set it up, how to deploy it, and its limitations. If you’re planning to use it for a hobby project, Node Unblocker is a good choice.

But these drawbacks and the poor community support prevent you from using it in any production-ready application.

A managed service like WebScrapingAPI, which offers access to a large pool of datacenter, mobile, and residential proxies as well as the ability to play with geolocation, change headers and create cookies with a single parameter, has none of these limitations.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Learn how to scrape dynamic JavaScript-rendered websites using Scrapy and Splash. From installation to writing a spider, handling pagination, and managing Splash responses, this comprehensive guide offers step-by-step instructions for beginners and experts alike.

Learn what’s the best browser to bypass Cloudflare detection systems while web scraping with Selenium.

Learn how to use proxies with Axios & Node.js for efficient web scraping. Tips, code samples & the benefits of using WebScrapingAPI included.