How to Web Scrape Google Maps Place Results

Andrei Ogiolan on Apr 20 2023

Introduction

Google Maps is one of the most widely used mapping and navigation services in the world, providing users with an easy way to find and explore places, businesses, and points of interest. One of the key features of Google Maps is the ability to search for places and view detailed information about them, such as their location, reviews, photos, and more.

Scraping this data from Google Maps can be useful for a variety of purposes. For example, businesses can use this data to track and analyze the performance of their locations, researchers can use it to study patterns in consumer behavior, and individuals can use it to find and explore new places.

The purpose of this article is to provide a step-by-step guide on how to scrape Google Maps place results with our API using Node.js. We will cover everything from setting up the development environment to extracting relevant data and describe potential issues. By the end of this article, you will have the knowledge and tools you need to scrape Google Maps place results on your own.

Why should you use a professional scraper instead of building yours?

Using a professional scraper can be a better option than creating your own for several reasons. Firstly, professional scrapers are built to handle a wide variety of scraping tasks and are optimized for performance, reliability, and scalability. They are designed to handle large amounts of data and can handle various types of websites and web technologies. This means that professional scrapers can often extract data faster and more accurately than a custom-built scraper.

Additionally, professional scrapers often come with built-in features such as CAPTCHA solving, IP rotation, and error handling, which can make the scraping process more efficient and less prone to errors. They also offer support and documentation which can be helpful when you face any issues.

Another important aspect is that professional scrapers providers are compliant with the scraping policies of the websites they scrape and they can provide legal use of the data, which is important to keep in mind when scraping data.

Finally, in our particular case, in order to scrape Google Maps place results, you need to pass a data parameter to your Google URL like this :

The data parameter usually looks something like this: !4m5!3m4!1s + data_id + !8m2!3 + latitude + !4d + longitude. I know this may sound intimidating at first as you may have no idea how to get the data_id property for a specific place and you are right because Google hides this information and it is not visible on the page when you are searching for a place in Google Maps. But, fortunately, using a professional scraper like ours takes care of that by finding this data for you. We will talk in the later sections about how to get the data_id, coordinates, and how to easily build the data parameter.

Defining our target

What are Google Maps Place results?

Google Maps place results are the results that are displayed when a user searches for a place on Google Maps. These results can include places such as businesses, restaurants, hotels, landmarks, and other points of interest. Each place result includes information such as the place's name, address, phone number, website, reviews, and photos. Place results also include a Google Maps Street View image of the location, and a map showing the location of the place. The place results can also include a link to the place's Google My Business page.

When a user searches for a place on Google Maps, they are presented with a list of place results that match their search query. These results are displayed on the map and in a list format, and they can be filtered by various criteria such as rating, price, and distance.

Scraping these data can be useful for businesses who want to track and analyze the performance of their locations, researchers who want to study patterns in consumer behavior, and individuals who want to find and explore new places.

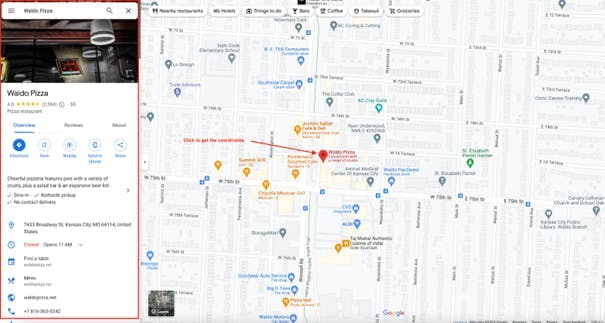

What does our target look like?

Setting up

Before we can start scraping Google Maps place results, we need to make sure that we have the necessary tools available for us. The first thing we will need is Node.js, a JavaScript runtime that allows us to run JavaScript on the server-side which you can install from their official website. The 2nd thing you need to have is an API KEY, which you can get easily by creating an account here and activating SERP service.

Once you have these things set up, in order to run a Node.js script, you just need to create a js file. You can achieve that by running the following command:

$ touch scraper.js

And now paste the following line in your file:

console.log("Hello World!")And the run the following command:

$ node scraper.js

If you are now able to see the message “Hello World!” printed on the terminal it means you successfully installed Node.js and you are ready to proceed to the last step.

Now the last step is to get the data_id information about the place you are interested in. This is the time when our API comes into play. Using it is very straightforward and does not require any external libraries to be installed.

Firstly, in a js file you need to import the Node.js `https` built in module in order to be able to send requests to our API. This can be done as following:

const https = require("https");Secondly, you need to specify your API key a search term and the coordinates of the place you are interested in:

const API_KEY = "<YOUR-API-KEY-HERE>" // You can get by creating an account - https://app.webscrapingapi.com/register

const query = "Waldo%20Pizza"

const coords = "@38.99313451901278,-94.59368586441806"

Now, what you need to do is to pass this information in an options object so our API gets to understand which place you need to scrape information from:

const options = {

"method": "GET",

"hostname": "serpapi.webscrapingapi.com",

"port": null,

"path": `/v1?engine=google_maps&api_key=${API_KEY}&type=search&q=${query}&ll=${coords}`,

"headers": {}

};Next, you now need to set up a call to our API with all this information:

const req = https.request(options, function (res) {

const chunks = [];

res.on("data", function (chunk) {

chunks.push(chunk);

});

res.on("end", function () {

const body = Buffer.concat(chunks);

const response = JSON.parse(body.toString());

const data_id = response.place_results.data_id;

if (data_id) {

console.log(data_id);

}

else {

console.log('We could not find a data_id property for your query. Please try using another query')

}

});

});

req.end();Finally, you just need to run the script you have built and wait for your results:

$ node scraper.js

And you should get back the data_id property printed on the screen:

$ 0x87c0ef253b04093f:0xafdfd6dc1d3a2b4es

And that’s it, at this point you have everything you need to create the data parameter and you are ready to move to the next section which contains the purpose of this article.

Let’s start scraping Google Place Results

Now that you have your environment set up, you are now ready to start scraping Google Maps place results with Node.js. As already mentioned above, in order to scrape Google Maps place results you now need to set up the data parameter. Having everything available by now, you can achieve that as following:

const data_id = "0x87c0ef253b04093f:0xafdfd6dc1d3a2b4e" // the data_id we retrieved earlier

const latitude = '38.99313451901278'

const longitude = '-94.59368586441806'

const data = '!4m5!3m4!1s' + data_id + '!8m2!3d' + latitude + '!4d' + longitude

Now, you need to modify your options object to tell our API that you are looking for place results. Having this new data parameter, our API will know exactly what place you need to scrape information about:

const options = {

"method": "GET",

"hostname": "serpapi.webscrapingapi.com",

"port": null,

"path": `/v1?engine=google_maps&api_key=${API_KEY}&type=place&data=${data}`, // this time the type is place and there is no query needed

"headers": {}

};The resulting script should look like this :

const https = require("https");

const API_KEY = "<YOUR-API-KEY-HERE>" // You can get by creating an account - https://app.webscrapingapi.com/register

const data_id = "0x87c0ef253b04093f:0xafdfd6dc1d3a2b4e" // the data_id we retrieved earlier

const latitude = '38.99313451901278'

const longitude = '-94.59368586441806'

const data = '!4m5!3m4!1s' + data_id + '!8m2!3d' + latitude + '!4d' + longitude

const options = {

"method": "GET",

"hostname": "serpapi.webscrapingapi.com",

"port": null,

"path": `/v1?engine=google_maps&api_key=${API_KEY}&type=place&data=${data}`, // this time the type is place and there is no query needed

"headers": {}

};

const req = https.request(options, function (res) {

const chunks = [];

res.on("data", function (chunk) {

chunks.push(chunk);

});

res.on("end", function () {

const body = Buffer.concat(chunks);

const response = JSON.parse(body.toString());

console.log(response)

});

});

req.end();And after running this script, you should get back a response looking like this:

place_results: {

title: 'Waldo Pizza',

data_id: '0x89c259a61c75684f:0x79d31adb123348d2',

place_id: 'ChIJT2h1HKZZwokR0kgzEtsa03k',

data_cid: '8778389626880739538',

website: 'https://www.stumptowntogo.com/',

gps_coordinates: { latitude: 38.99313451901278, longitude: -94.59368586 },

reviews_link: 'https://serpapi.webscrapingapi.com/v1?engine=google_maps_reviews&data_id=0x89c259a61c75684f:0x79d31adb123348d2',

place_id_search: 'https://serpapi.webscrapingapi.com/v1?engine=google_maps&type=place&device=desktop&data=!4m5!3m4!1s0x89c259a61c75684f:0x79d31adb123348d2!8m2!3d38.99313451901278!4d-94.59368586',

thumbnail: 'https://lh5.googleusercontent.com/p/AF1QipNtnPBJ2Oi_C2YNamHTXyqU9I8mRBarCIvM5g5v=w408-h272-k-no',

rating: 4.6,

reviews: 2594,

price: '$$',

type: [ 'Pizza restaurant' ],

service_options: { dine_in: true, curbsidepickup: true, no_contactdelivery: true },

extensions: [

[Object], [Object],

[Object], [Object],

[Object], [Object],

[Object], [Object],

[Object], [Object]

],

open_state: 'Closed',

hours: [

[Object], [Object],

[Object], [Object],

[Object], [Object],

[Object]

],

contact_details: {

address: [Object],

action_1: [Object],

menu: [Object],

phone: [Object],

plus_code: [Object]

},

address: '7433 Broadway St, Kansas City, MO 64114',

images: [

[Object], [Object],

[Object], [Object],

[Object], [Object],

[Object], [Object],

[Object], [Object],

[Object], [Object]

],

people_also_search_for: [ [Object], [Object], [Object] ],

user_reviews: { summaries: [Array], most_relevant: [Array] },

popular_times: { graph_results: [Object] }

}

}And that’s it. That means you have managed successfully to scrape the Google Maps place results using our API and you are ready now to use the data obtained for a lot of different purposes such as data analysis, business analysis, machine learning, and more. For further reference and code samples in other 6 programming languages for you to get started, feel free to explore our Google Maps docs.

Limitations of Google Maps Place Results

Google Maps place results, although a powerful tool to find and explore places, have some limitations which you should keep in mind. Firstly, the amount of data available for each place is limited and some data like photos, Place ID, etc are only available through the Google Maps API and require an API key. Additionally, the data provided in the Google Maps place results can be dynamic and change over time, which means that the data you scrape may not be accurate or up-to-date.

Conclusion

In conclusion, scraping Google Maps place results can be a useful tool for businesses, researchers, and individuals to find and explore new places. By following the steps outlined in this article, you should now have the knowledge and tools you need to scrape Google Maps place results using our API using Node.js.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Effortlessly gather real-time data from search engines using the SERP Scraping API. Enhance market analysis, SEO, and topic research with ease. Get started today!

Top 7 Google SERP APIs Compared: WebScrapingAPI, Apify, Serp API & More - Best Value for Money, Features, Pros & Cons

Discover 3 ways on how to download files with Puppeteer and build a web scraper that does exactly that.