How to Execute Java Script With Scrapy

Mihai Maxim on Jan 30 2023

Introduction

Welcome to the exciting world of dynamic website scraping! As you may know from our previous articles, these types of websites can be a bit tricky to navigate with traditional web scraping tools. But fear not! Scrapy, the trusty web scraping assistant, has got your back with a variety of plugins and libraries that make dynamic website scraping a breeze.

In this article, we'll dive into some of the most popular options for scraping those JavaScript-powered websites with Scrapy. And to make things even easier, we'll provide examples of how to use each one so you can confidently tackle any website that comes your way.

If you’re new to Scrapy don’t worry. You can refer to our guide for an introduction to webscraping with Scrapy

Headless browsers?

If you're unfamiliar with headless browsers, let me fill you in. Essentially, these are web browsers that operate without a visible interface. Yeah, I know it sounds weird to not be able to see the browser window when you're using it. But trust me, headless browsers can be a real game-changer when it comes to web scraping.

Here's why: unlike regular browsers that simply display web pages, headless browsers can execute JavaScript. This means that if you're trying to scrape a website that relies on JavaScript to generate its content, a headless browser can help you out by executing the JavaScript and allowing you to scrape the resulting HTML.

Exploring different solutions

The best strategy for rendering JavaScript with Scrapy depends on your specific needs and resources. If you're on a tight budget, you'll want to choose a solution that is cost-effective. Using a headless browser or a JavaScript rendering library might be the least expensive option, but you’ll still have to deal with the possibility of IP blocks and the cost of maintaining and running the solution.

It is always best to try out a few different options and see which one works best for your use case.

How to execute Javascript with Scrapy using Splash

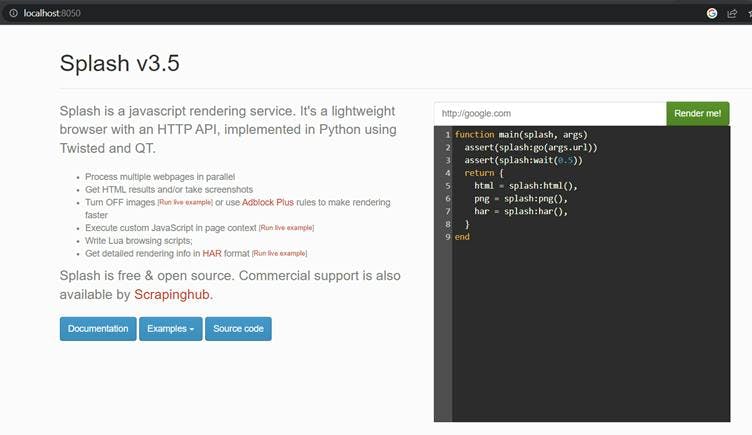

Splash is a lightweight, headless browser designed specifically for web scraping. It is based on the WebKit engine, which is the same engine that powers the Safari browser. The great thing about Splash is that it's easy to configure, especially if you use Docker. It is also integrated with Scrapy through the scrapy-splash middleware.

In order to use the middleware, you’ll first need to install this package with pip:

$ pip install scrapy-splash

Setting up Splash with Docker is easy. All you need to do is run an instance of Splash on your local machine using Docker (https://docs.docker.com/get-docker/).

$ docker run -p 8050:8050 scrapinghub/splash

After that, you should be able to access the local Splash instance at http://localhost:8050/

Splash has a REST API that makes it easy to use with Scrapy or any other web scraping tool. You can test the server by making a fetch request inside the Scrapy shell:

fetch('http://localhost:8050/render.html?url=<target_url>')To configure the Middleware, add the following lines to your settings.py file.

SPLASH_URL = 'http://localhost:8050'

DOWNLOADER_MIDDLEWARES = {

'scrapy_splash.SplashCookiesMiddleware': 723,

'scrapy_splash.SplashMiddleware': 725,

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware': 810,

}

SPIDER_MIDDLEWARES = {

'scrapy_splash.SplashDeduplicateArgsMiddleware': 100,

}

DUPEFILTER_CLASS = 'scrapy_splash.SplashAwareDupeFilter'

HTTPCACHE_STORAGE = 'scrapy_splash.SplashAwareFSCacheStorage'

Visit https://github.com/scrapy-plugins/scrapy-splash to learn more about the each setting.

The easiest way to render requests with Splash is to use scrapy_splash.SplashRequest inside your spider:

import scrapy

from scrapy_splash import SplashRequest

class RandomSpider(scrapy.Spider):

name = 'random_spider'

def start_requests(self):

start_urls = [

'<first_url',

'<second_url>'

]

for url in start_urls:

yield SplashRequest(url=url, callback=self.parse, args={'wait': 5})

def parse(self, response):

result = response.css("h3::text").extract()

yield result

You can add a ‘wait’ parameter to specify the amount of time you want Splash to wait for before returning your request.

One potential drawback of using Splash is that it requires the use of the Lua scripting language to perform actions such as clicking on buttons, filling out forms, and navigating to pages.

How to execute Javascript with Scrapy using Selenium

You can use Scrapy with the Selenium webdriver. The scrapy-selenium middleware works by injecting the Selenium webdriver into the request process, so that the resulting HTML is returned to the spider for parsing.

Before you implement this solution, it's important to note that you will need to install a web driver in order to interact with a browser. For example, you will need to install geckodriver in order to use Firefox with Selenium. Once you have a web driver installed, you can then configure Selenium on your Scrapy project settings:

SELENIUM_DRIVER_NAME = 'firefox'

SELENIUM_DRIVER_EXECUTABLE_PATH = which('geckodriver')

SELENIUM_DRIVER_ARGUMENTS=['-headless'] # '--headless' if using chrome instead of firefox

DOWNLOADER_MIDDLEWARES = {

'scrapy_selenium.SeleniumMiddleware': 800

}

ITEM_PIPELINES = {

'myproject.pipelines.SanitizePipeline': 1,

}

Then you can configure your spider:

import scrapy

from scrapy_selenium import SeleniumRequest

class RandomSpider(scrapy.Spider):

name = 'random_spider'

def start_requests(self):

start_urls = [

'<first_url',

'<second_url>'

]

for url in start_urls:

yield SeleniumRequest(url=url, callback=self.parse)

def parse(self, response):

print(response.request.meta['driver'].title)

#The request will be handled by selenium, and the request will have an additional meta key, named driver containing the selenium driver with the request processed.

result = response.selector.css("#result::text").extract()

#The selector response attribute work as usual (but contains the html processed by the selenium driver).

yield result

For more information about the available driver methods and attributes, refer to the selenium python documentation:

http://selenium-python.readthedocs.io/api.html#module-selenium.webdriver.remote.webdriver

Selenium requires a web browser to be installed on the machine where it is running, as it is not a standalone headless browser. This makes it harder to deploy and run on multiple machines or in a cloud environment.

How to execute Javascript with Scrapy using WebScrapingApi

WebScrapingAPI provides an API that will handle all the heavy lifting for you. It can execute JavaScript, rotate proxies, and even handle CAPTCHAs, ensuring that you can scrape websites with ease. Plus, you'll never have to worry about getting your IP banned for sending too many requests. In order to configure Scrappy to work with WebScrapingAPI, we’ll configure a proxy middleware that will tunnel all the fetch requests through WSA.

To achieve this, we will configure Scrapy to connect to the WSA proxy server:

import base64

# add this to your middlewares.py file

class WSAProxyMiddleware:

def process_request(self, request, spider):

# Set the proxy for the request

request.meta['proxy'] = "http://proxy.webscrapingapi.com:80"

request.meta['verify'] = False

# Set the proxy authentication for the request

proxy_user_pass = "webscrapingapi.render_js=1:<API_KEY>"

encoded_user_pass = base64.b64encode(proxy_user_pass.encode()).decode()

request.headers['Proxy-Authorization'] = f'Basic {encoded_user_pass}'

And enable the middleware:

DOWNLOADER_MIDDLEWARES = {

'myproject.middlewares.WSAProxyMiddleware': 1,

}webscrapingapi.render_js=1 is the proxy authentication username, <API_KEY> the password.

You can get a free API_KEY by creating a new account at https://www.webscrapingapi.com/

By specifying the render_js=1 parameter, you will enable WebScrapingAPI's capability of accessing the targeted web page using a headless browser which allows JavaScript page elements to render before delivering the final scraping result back to you.

You could also tell WSA to perform a specific action when processing your URL. You could do that by specifying the js_instructions parameter:

js_instructions=[

{"action":"click","selector":"button#log-in-button"}

]

// this sequence could be used to click on a button

And that’s it, WSA will now automatically make all the requests for you.

Wrapping up

Scraping dynamic websites can be a tough task, but with the right tools, it becomes much more manageable. In this article, we looked at three different options for scraping dynamic websites with Scrapy. Headless browsers like Splash and Selenium allow you to execute JavaScript and render web pages just like a regular browser. However, if you want to take the easy route, using an API like WebScrapingApi can also be a great solution. It handles all the complex tasks for you and allows you to easily extract data from even the most difficult websites. No matter which option you choose, it's important to consider your specific needs and choose the solution that best fits your project. Thank you for reading, and happy scraping!

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore the in-depth comparison between Scrapy and Selenium for web scraping. From large-scale data acquisition to handling dynamic content, discover the pros, cons, and unique features of each. Learn how to choose the best framework based on your project's needs and scale.

Learn how to scrape dynamic JavaScript-rendered websites using Scrapy and Splash. From installation to writing a spider, handling pagination, and managing Splash responses, this comprehensive guide offers step-by-step instructions for beginners and experts alike.

Explore a detailed comparison between Scrapy and Beautiful Soup, two leading web scraping tools. Understand their features, pros and cons, and discover how they can be used together to suit various project needs.