Data Scraping Apps : A New Solution to Retrieve Valuable Data from Multiple Websites

WebscrapingAPI on Nov 08 2022

What are data scraping apps?

Data scraping apps may collect information from the internet for study, analytics, or education. Other ways include adding browser extensions, creating code, and utilizing online apps. Knowing about the various online scraping choices can assist you in determining which is the best choice for your career or business.

This post will look at web scraping tools, what they do, how they may be used, and a list of several web scraping programs to check.

Numerous connection efforts from a single IP address may trigger the web address you're looking for. However, there is some great news: several websites provide firewalls for web scraping practice. This article will show you the finest scraping tools.

Data scraping is obtaining information from a system that employs an automatic toolkit that impersonates an application user or a web browser. It's nothing new. In addition, crawling may be the only option for software programmers who want data from an outdated system. The original connections may be lost and unreplaceable.

Scraping generally refers to a programmed technique for obtaining data from a website. The scraping program behaves similarly to a human user, pressing buttons and viewing the results. Scraping has numerous valid applications. One example is web crawlers, which enable search engines. So are systems like Skyscanner, which searches dozens of travel websites for the best discounts.

Fintech organizations also utilize it, extracting consumer accounting transactions from bank websites if no Application Programming Interfaces (APIs) are accessible to link the data. The efficiency and flexibility of screen scraping should be considered. If the sites are not designed to prevent it, the procedure can capture massive volumes of data from them.

A scraper may accumulate massive datasets at the speed of computation by interacting with webpages and the supporting software and datasets that underpin them.

What are data scraping apps used for?

data scraping apps can assist in gathering important information from the internet at a rapid and long-term rate.

For instance, if you're collecting data on a popular term, you might use a data scraping technique that only collects data when users on social media use that keyword in a tag style or as a header. This helps you quickly filter through the material to locate what you want.

You may also configure a data scraping program to collect data even while you are not in front of your computer. This may assist you in completing lengthy searches.

Applications of data scraping apps

Data scraping may be used for a variety of purposes, including:

- Cost tracking in e-commerce

- Identifying investment possibilities

- Web data from social media is being analyzed.

- Making use of machine learning methods

- Regularly collecting web data

- Investigating new ideas in a domain

- Contact information extraction

- Keeping track of news sources

- Making sales leads

- Selecting Data Scraping Apps

Scraping modules (Queries, Cheerio, BeautifulSoup, and others), platforms like Scrapy and Selenium, custom-built scrapers (ScrapingBee API, Smartproxy's SERP API), and prepared scraper solutions may all be used to scan the web (Octoparse, ParseHub, and others). Python is the most common data-gathering tool; many website scrapers are written in Python.

Several tools are employed to address various aspects of the experience. Data scraping platforms are comprehensive toolkits, while independent libraries typically require other programs to complete your extractor. On the other hand, for ready-made scrapers, you don't need to know any coding.

Top 7 data scraping apps

Given the variety of data scraping apps available on the market, choosing the right one to meet your company's demands may take time and effort. Here is a complete analysis of the Top 10 Data Scraping Apps to assist you in focusing your search.

1. Common Crawl

Common Crawl is a free-to-use certified nonprofit portal. It is a no-code platform that offers tools for experts who want to study or teach others how to apply data analysis techniques. Instead of extracting live data from the internet, it gives an open store of browser data that contains textual extraction methods and site data.

The data from the Common Crawl is kept on Amazon Web Services Public Data Sets and various academic cloud platforms worldwide. It comprises petabytes of data gathered during 12 years of data scraping. Core web page information, metadata extraction, and textual samples are all included in the collection.

The Amazon-hosted Common Crawl database is available for free. You can conduct logical operations on Amazon's cloud platform.

Textual Features approach

The critical assumption they propose is that the language of URLs corresponding to tiny and large photos differs significantly. For example, URLs from little pictures frequently include phrases like symbol, image, small, finger, up, downward, and pixels. URLs from large photos, on the other hand, often lack these terms and instead contain others.

In this situation, an n-gram is a continuous series of n characters from the picture URL. If the assumption is valid, a supervised learning algorithm should be able to differentiate things between the two distinct groups.

Non-textual features approach

An alternative non-textual technique relies on content collected from the picture HTML rather than the picture URL content. The objective behind their selection is to convey clues about the visual proportions.

For example, the first five attributes correlated to various image suffixes and were chosen because most real-world photographs are in JPG or PNG format. In contrast, BMP and GIF formats typically link to symbols and cartoons. Furthermore, a real-world photo is more likely to include an alternative or alternative caption than a backdrop image or a billboard.

A hybrid strategy

The hybrid method seeks to improve efficiency by using both textual and non-textual characteristics.

Pricing: - Free

2. Sequentum

Sequentum is a cloud-based online scraping application that collects data through custom-built web apps and their application programming interface (API). This application has both automatic and configurable functions.

With Content Grabber, you may explore webpages visually and select content from the pages where you wish to retrieve it. It then processes the collected information via your instructions, which you may change immediately.

Sequentum, a point-and-click online scraping program, offers a dependable and scalable solution for gathering information from complicated websites. Sequentum Enterprise is installed on-premises on Microsoft Windows systems. It enables us to carry out the task without the assistance of a third-party provider.

It can fulfill the most stringent security and privacy standards by having complete access to the infrastructure.

Features

- A highly user-friendly graphical editor that finds and customizes the necessary commands instantly.

- Allows basic macro automation techniques for agent generation, or you may have complete control of how each input is handled within your agent.

- Excellent versatility in developing agents, with no coding necessary. Almost everything is conceivable.

- Agent and query designs for simple renewability, including various agent designs for significant websites and command scripts such as a full-fledged website crawler.

- Enterprise-level monitoring, recording, error checking, and restoration capabilities.

- Tools for centrally managing calendars, data connectivity, firewalls, alerts, and script packages.

- Provides complete agents that may be white-labeled and supplied royalty-free.

- Advanced API for integrating with third-party software.

Pricing: - $69 - $299/month

3. Frontera

An open-source process model Frontera was developed to aid in the development of web crawlers. Data creation, crawling techniques, and add-on programs for leveraging other syntaxes and computing libraries are all built-in elements of Frontera. For large-scale data collection initiatives, take into account Frontera.

Features

- The crawl frontier framework manages employees, Scrapy liners, and system bus elements, which also monitors the crawler's progress toward its goals.

- Frontera has components that make it possible to use Scrapy to build a fully functional web crawler. Although it was created with Scrapy in mind, you may use it with any other crawling framework or system.

- Determine the document's canonical URL and utilize it.

Pricing: - $170 - $230/month

4. Mozenda

Mozenda is online scraping software that doesn't require any code to utilize. They provide telephone and email customer support services. You may host the cloud-based application remotely on a server for your company.

You may choose the content from the Website and launch the sources to collect information since it has a point-and-click layout. Other characteristics include:

Users may examine, arrange, and run reports on data gathered from websites using the program. Mozenda automatically recognizes content placed in lists on user-specified websites and enables users to create agents to collect this information.

Features

- Content extraction from websites, pdfs, text documents, and photos

- Exporting information as Excel, CSV, XML, JSON, or TSV files

- Automated data preparation for analysis and visualization

Pricing: - $99 - $199/month

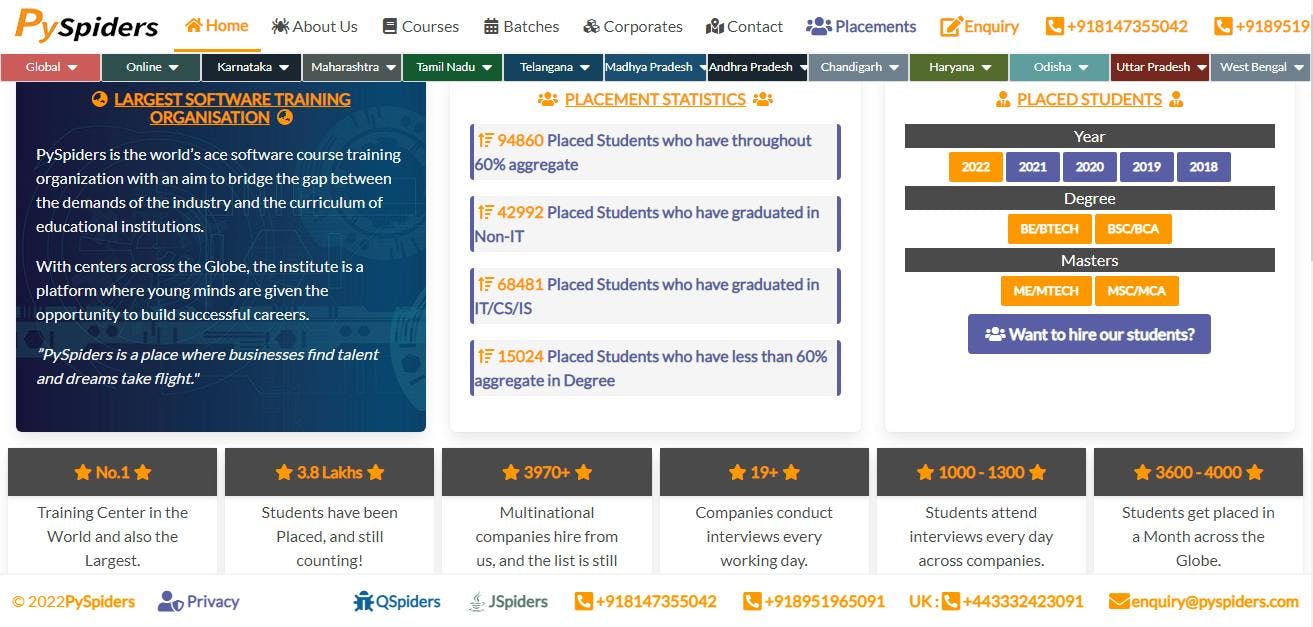

5. Pyspider

A web crawling program written in Python is called Pyspider. It has built-in SQL functionality that you may alter with extra code. Among the features are an API for authoring code scripts, a process tracker, a dashboard to see outcomes, and a project management capability.

PySpiders, the world's leading provider of programming course learning, aims to eliminate the disparity between the needs of business and academic organizations. The institute, which has locations worldwide, provides young people with the chance to develop successful professions.

A Python Spider (Web Crawler) System with Lots of Power. Create a GitHub account to contribute to the development of binux and Pyspider.

Pricing: - $39 - $899/month

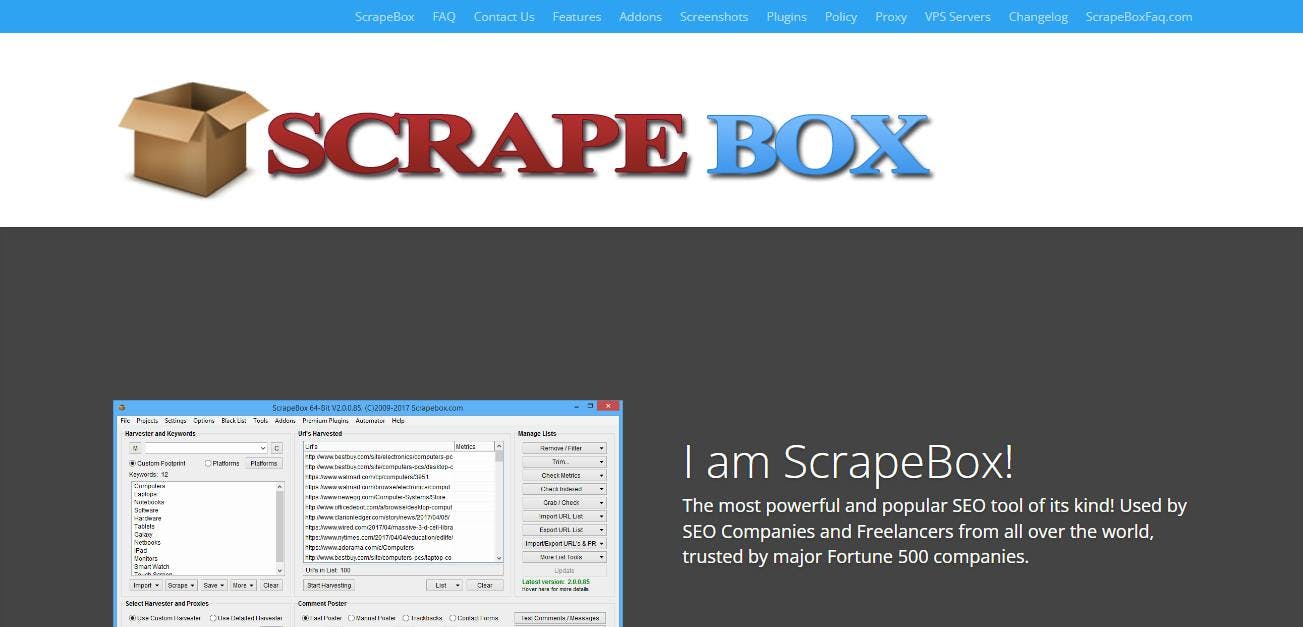

6. ScrapeBox

A desktop program called ScrapeBox crawls the internet to get information on search engine optimization. On your local computer, it can collect keyword information. ScrapeBox offers resources, including videos, manuals, and customer help around the clock. It has over 30 add-on features and customizable capabilities.

In your taskbar, ScrapeBox acts as a personal SEO and marketing assistant, ready to automate various activities such as collecting URLs, researching competitors, constructing links, doing findings to provide additional, sorting lists, and more.

Anyone may use this free program; no purchase, opt-in, or serial number is needed; it is free. For data scraping, it provides hundreds of video lessons.

Features

- Quick Multiple Thread Operation

Rapid operation with numerous connections active at once.

- Highly Modifiable

A wide range of extension and customization possibilities to meet your demands.

- Excellent Value

Plenty of features at a low cost to enhance your SEO.

- Many Add-Ons

To add many more functions to ScrapeBox, there are more than 30 free add-ons.

- Great Help

There are many help videos, manuals, and tech support professionals on hand around-the-clock.

- Tested

With regular upgrades, 2009 original is still running strong in 2022.

- Harvesting Search Engines

With the robust and customizable URL harvester, you can gather thousands of URLs from over 30 search engines, including Google, Yahoo, and Bing.

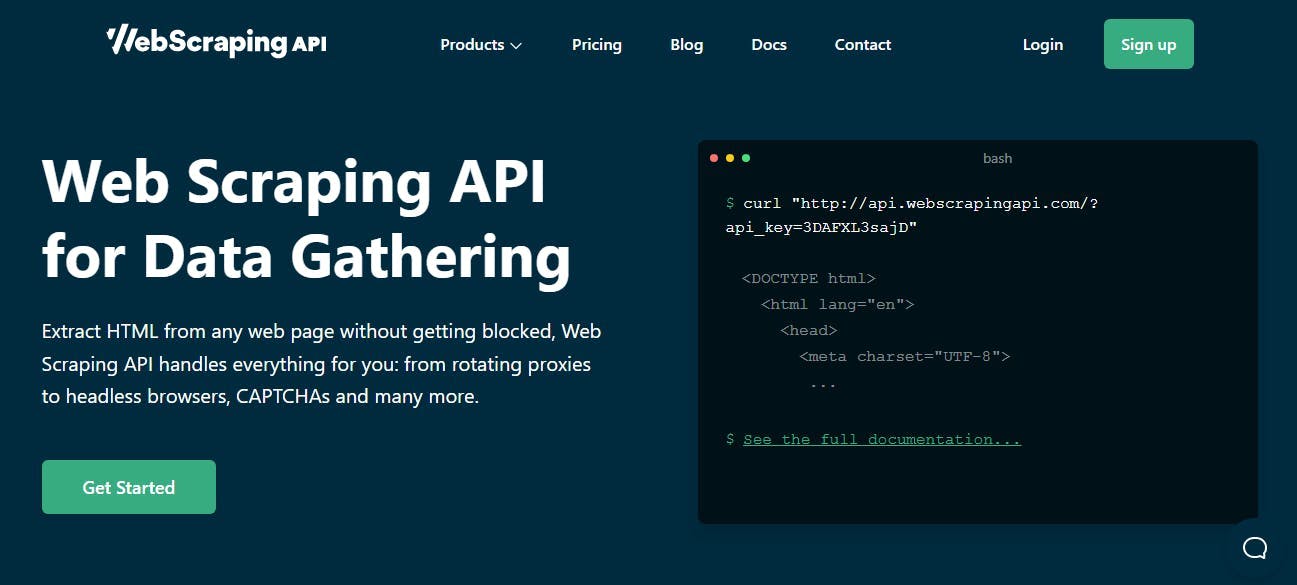

7. WebScrapingAPI

You can extract any internet content with the help of WebScrapingAPI without breaking any rules. It collects the HTML from any online page using a straightforward API. It provides prepared data that may be used to gather and check financial, human resources, and real estate data and keep track of crucial market information.

Features

- HTML replies with structure 100M+ rotational proxies

- The newest anti-bot detection tools control VPNs, routers, and CAPTCHAs and interact with any coding language to enable thorough scanning operations on any website you want.

- Unrestricted bandwidth

- request-based renderer customization for JavaScript

- Using our advanced capabilities, you can examine ports, IP mappings, persistent sessions, and other options to tailor your searches to your particular requirements.

- Enterprise-grade scraping that is quick

Pricing: - $49 - $799/month

Final Pick

If you're looking for top-notch independent data providers for web content scraping, WebScrapingAPI has you covered. The tool's Python module makes it simple to test web applications.

- JavaScript Rendering

JavaScript Rendering Conversations in JavaScript Use JavaScript domains like a pro by enabling scroll and page browsing to get exact information from your online scraping activity.

- Complete Web Scraping

All data scraping tasks and use cases, including market research, competition policy, information on commuting costs, real estate investment, accounting records, and more, are supported by the Online Scraper API.

- How to Get Formatted Data

Depending on your specific requirements, you can get formatted JSON data along with its ability to do custom retrieval using one API request. Having rapid data flow will give your company a competitive edge.

Register for a free 30-day trial to check out the robust WebScrapingAPI package. You can also look at the fantastic price to help you choose the package that best suits your company's needs.

Conclusion

The main takeaway from this essay is that a user should choose the internet data scraper tool that best suits their needs.

Initially, data scraping can be a little challenging, so we have written instructional instructions to assist you.

Visit our welcoming blogs here if you'd like to consult about data scraping, ask questions, suggest features, or report bugs.

See Also:

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore the in-depth comparison between Scrapy and Selenium for web scraping. From large-scale data acquisition to handling dynamic content, discover the pros, cons, and unique features of each. Learn how to choose the best framework based on your project's needs and scale.

Learn how to scrape dynamic JavaScript-rendered websites using Scrapy and Splash. From installation to writing a spider, handling pagination, and managing Splash responses, this comprehensive guide offers step-by-step instructions for beginners and experts alike.

Explore the transformative power of web scraping in the finance sector. From product data to sentiment analysis, this guide offers insights into the various types of web data available for investment decisions.