Bypass Restrictions and Scrape Data Effectively with Proxies and C# HttpClient

Suciu Dan on Apr 13 2023

Web scraping is a powerful tool that allows you to extract valuable data from websites for various purposes. However, many websites block scraping attempts to protect their data. One way to bypass these blocks is by using a proxy server.

In this article, we will explore how to use a proxy with C# HttpClient, a popular library for making HTTP requests in C#. We will cover the prerequisites, setup, and tips for debugging and troubleshooting.

Additionally, we'll go over how to send a request and improve the performance of the requests. By the end of this article, you'll have a good understanding of how to use a proxy with C# HttpClient and how it can benefit your web scraping efforts.

What are proxies?

Proxies, also referred to as proxy servers, act as middlemen between a client (such as a web browser or scraper) and a target server (such as a website). The client sends a request to the proxy, which then forwards it to the target server.

Once the target server responds, the proxy sends the response back to the client. In web scraping, using proxies can mask the IP address of the scraper, preventing the website from detecting and blocking the request.

Using multiple proxies can also aid in avoiding detection and getting blocked. Some proxy providers even offer the option to rotate IPs, providing additional protection against having your requests blocked.

Creating a C# Project

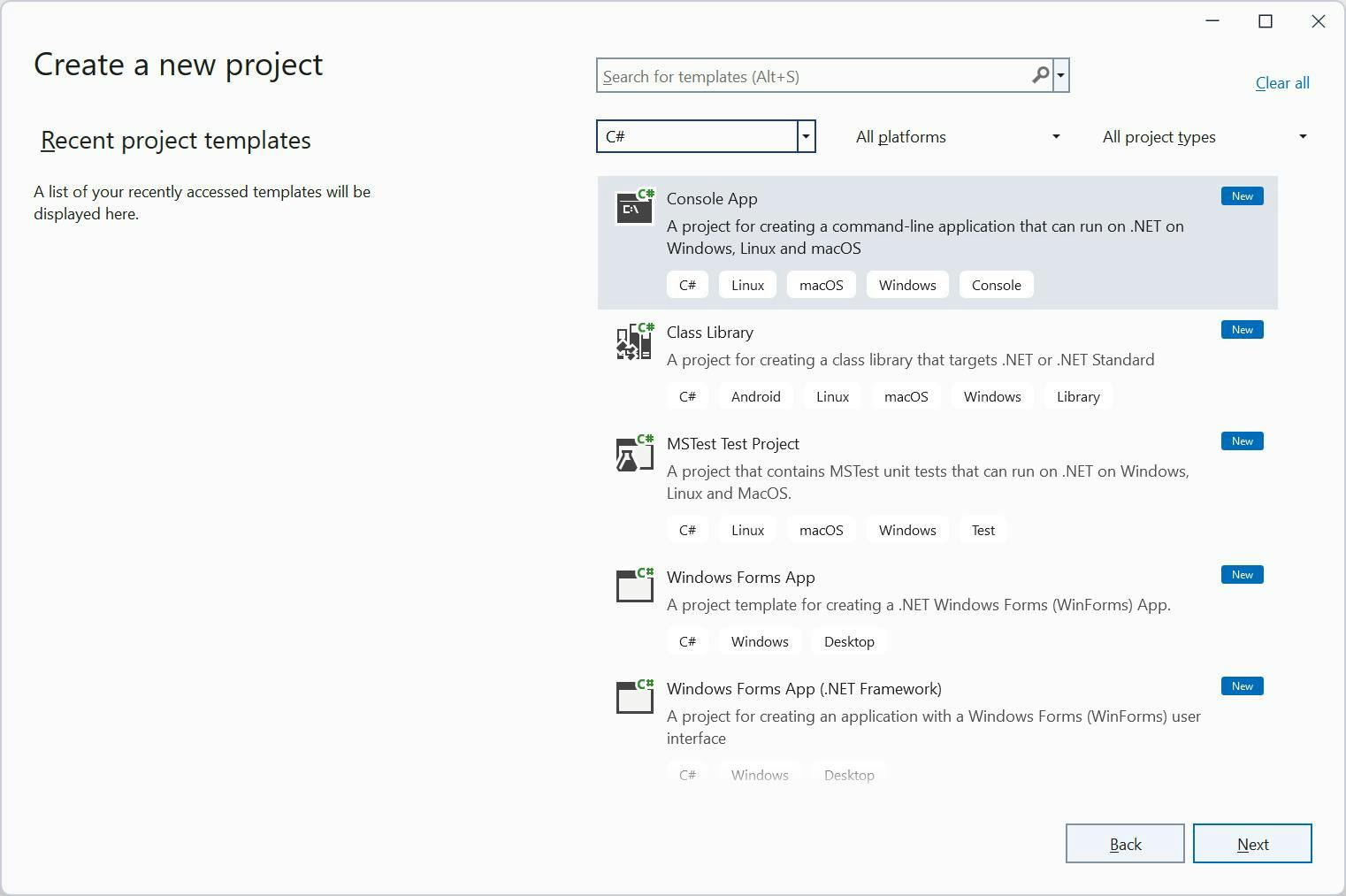

This article will use Visual Studio 2022 for Windows and .Net 6.0. To follow along, open Visual

In Visual Studio, select C# from the All Languages dropdown. If the Console App template is not visible, use the Search for templates input to locate it. Then click Next.

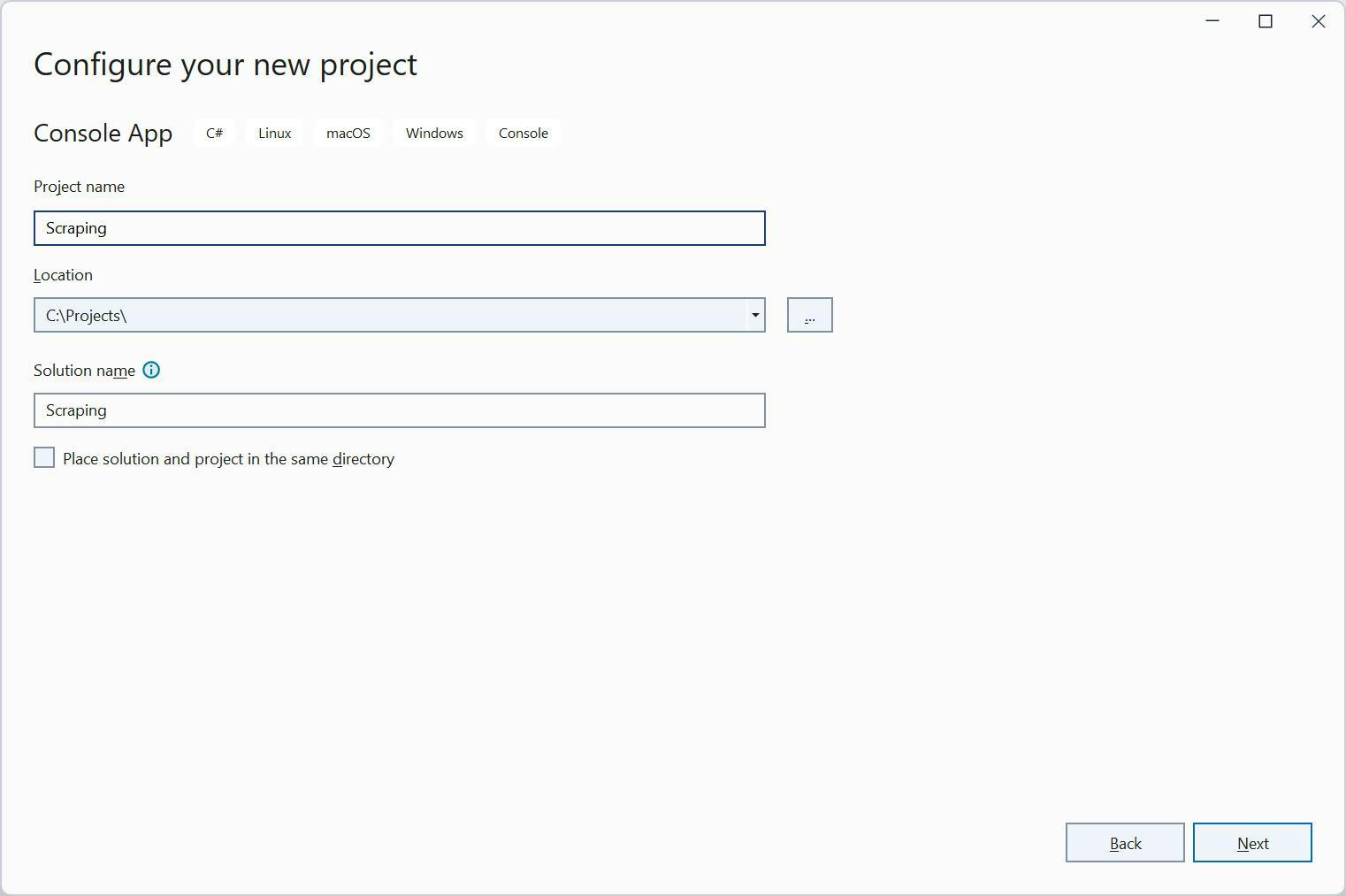

Choose the location for your project on the next screen. Then, click Next to proceed to the Additional Information screen. Ensure that you have .NET 7.0 selected before clicking Create.

After the project creation, you will se the Hello World code on the screen:

// See https://aka.ms/new-console-template for more information

Console.WriteLine("Hello, World!");

Making an HTTP Request

Let’s make our first request with `HttpClient`. Replace the dummy code with this one:

using var client = new HttpClient();

var result = await client.GetStringAsync("https://api.ipify.org?format=json");

Console.WriteLine(result);

This code uses the HttpClient class from the System.Net.Http namespace to make an HTTP GET request to the https://api.ipify.org/ endpoint and retrieve the response as a string.

Here is a breakdown of the code:

- `using var client = new HttpClient();`: This line creates a new instance of the `HttpClient` class and assigns it to the variable client. The `using` statement ensures the correct disposal of the client object once it is no longer needed.

- `var result = await client.GetStringAsync("https://api.ipify.org/");`: This line uses the `GetStringAsync()` method of the client object to send a GET request to the https://api.ipify.org/ endpoint. This method reads the response as a string and stores it in the variable result. The await keyword makes the request asynchronous, allowing the program to continue executing other code while the request is being processed.

- `Console.WriteLine(result);`: This line writes the contents of the result variable to the console, which is the response of the GET request.

Save the code and run it. You will see your IP address in the terminal.

Using Proxies with HttpClient

Before we get back to coding, visit the Free Proxy List website and select a proxy that is closest to your location. For this example, I will select a proxy located in Germany. Write down the IP address and the port of the selected proxy.

For using a proxy with HttpClient, we need to create a HttpClientHandler instance. Within this instance, we set two properties: the proxy URL and the port and `ServerCertificateCustomValidationCallback`. That’s a long name for a variable but this is important.

`ServerCertificateCustomValidationCallback` tells the HttpClientHandler to ignore any HTTPS certificate errors. You might be wondering why you have to do this.

The proxy server intercepts and inspects the traffic, including the HTTPS certificate, before forwarding it to the target server. As a result, the certificate presented by the target server to the proxy server may be different from the one presented to the client.

By default, the HttpClient and other similar libraries will validate the certificate presented by the target server, and if it is not valid or doesn't match the one presented to the client, it will raise an exception. This is where the certificate errors come from.

Ignoring the HTTPS certificate errors when using proxy mode allows the request to continue even if the certificate is not valid, which is useful in some cases where the certificate is intercepted and modified by the proxy server.

It’s time to write the code. Let’s start with the HttpClientHandler instance:

using System.Net;

using var httpClientHandler = new HttpClientHandler

{

Proxy = new WebProxy("http://5.9.139.204:24000"),

ServerCertificateCustomValidationCallback = HttpClientHandler.DangerousAcceptAnyServerCertificateValidator

};

We need to provide the HttpClient class with an instance of the HttpClientHandler. The modified client code should appear as follows:

using var client = new HttpClient(httpClientHandler);

The entire code should look like this:

using System.Net;

using var httpClientHandler = new HttpClientHandler

{

Proxy = new WebProxy("http://5.9.139.204:24000"),

ServerCertificateCustomValidationCallback = HttpClientHandler.DangerousAcceptAnyServerCertificateValidator

};

using var client = new HttpClient(httpClientHandler);

var result = await client.GetStringAsync("https://api.ipify.org?format=json");

Console.WriteLine(result);

Running the code will return the proxy IP address instead of your IP address. You can open the ipify URL in your browser and compare the results.

Let’s do authentication

When you sign up for a premium proxy service, you receive a username and password to use in your application for authentication.

It’s time to replace the WebProxy definition with this one:

Proxy = new WebProxy

{

Address = new Uri($"http://5.9.139.204:24000"),

Credentials = new NetworkCredential(

userName: "PROXY_USERNAME",

password: "PROXY_PASSWORD"

)

},

By replacing the placeholder credentials, updating the proxy URL, and executing the code, you will see that the IP address printed is different from the one used by your computer. Give it a try!

Rotating Proxies

Rotating proxies is beneficial as it helps to avoid detection and prevents websites from blocking your IP address. Websites can monitor and block IP addresses that make excessive requests in a short time frame or those connected to scraping activities.

We can use the Free Proxy List website to generate a list of proxies that we can rotate with each request sent. By implementing this technique, each request will have a distinct IP address, making the target website less suspicious.

Please note that the list of proxies that you might find on the website might differ from the list that I have compiled. I picked five proxies and defined them in a List like this:

List<string> proxiesList = new List<string> {

"http://65.108.230.238:45977",

"http://163.116.177.46:808",

"http://163.116.177.31:808",

"http://20.111.54.16:80",

"http://185.198.61.146:3128"

};Let's choose a random index from this list and use the item from that selected index with the Uri class:

var random = new Random();

int index = random.Next(proxiesList.Count);

What we need to do now is to combine all the parts. The final version of your scraper code should appear as follows:

using System.Net;

List<string> proxiesList = new List<string> {

"http://65.108.230.238:45977",

"http://163.116.177.46:808",

"http://163.116.177.31:808",

"http://20.111.54.16:80",

"http://185.198.61.146:3128"

};

var random = new Random();

int index = random.Next(proxiesList.Count);

using var httpClientHandler = new HttpClientHandler

{

Proxy = new WebProxy(proxiesList[index]),

ServerCertificateCustomValidationCallback = HttpClientHandler.DangerousAcceptAnyServerCertificateValidator

};

using var client = new HttpClient(httpClientHandler);

var result = await client.GetStringAsync("https://api.ipify.org?format=json");

Console.WriteLine(result);

Using WebScrapingAPI Proxies

Opting for premium proxies from a service like WebScrapingAPI is a better choice than using free proxies because they are more dependable, faster, and offer enhanced security. Premium proxies are less susceptible to getting blocked by websites and have a lower response time.

In comparison, free proxies may be slow, unreliable, contain malware, and are more likely to have a large failure rate because the target site will block your requests.

Interested in giving WebScrapingAPI a try? No problem, simply sign up for our 14-day free trial. You can use the 5,000 credits to test all the available features.

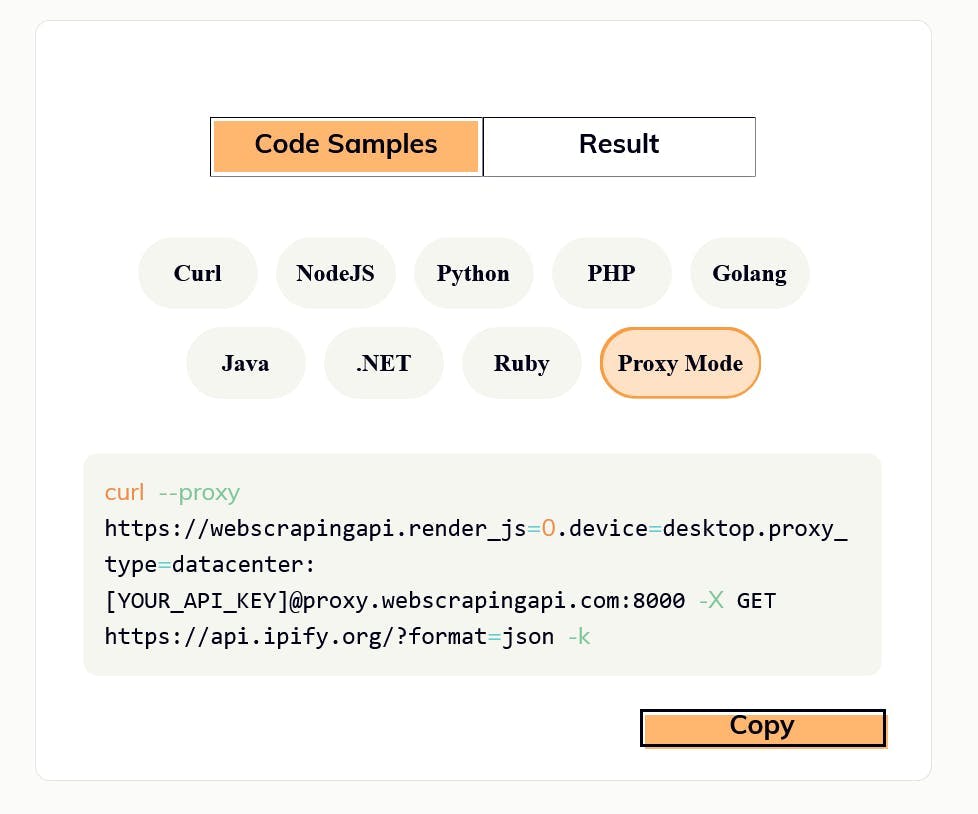

Once you have an account, go to the API Playground and choose the Proxy Mode tab in the Code Samples section.

Now we'll use the Proxy URL generated in the Proxy Mode tab with our C# implementation.

Update the proxy URL and the credentials in the httpClientHandler to look like this:

using var httpClientHandler = new HttpClientHandler

{

Proxy = new WebProxy

{

Address = new Uri($"http://proxy.webscrapingapi.com:80"),

Credentials = new NetworkCredential(

userName: "webscrapingapi.render_js=0.device=desktop.proxy_type=datacenter",

password: "YOUR_API_KEY"

)

},

ServerCertificateCustomValidationCallback = HttpClientHandler.DangerousAcceptAnyServerCertificateValidator

};

You can use the username property to enable or disable specific API features. Remember to also set your API key in the password property. You can refer to the full documentation here.

Every time you execute this code, you will receive a different IP address as WebScrapingAPI rotates IPs with every request. You can learn more about this feature by reading the documentation on Proxy Mode.

You also have the option to switch between datacenter and residential proxies. You can find more details about this in the Proxies section of our documentation.

Conclusion

Using a proxy is an essential aspect of web scraping, as it enables you to conceal your IP address and access restricted websites. The HttpClient library from C# is a powerful tool for extracting data, and when combined with a reliable proxy, you can achieve efficient and fast data extraction.

By subscribing to a premium proxy service like WebScrapingAPI, you will have access to a wide range of features, including IP rotation and the option to switch between datacenter and residential proxies.

We hope that this article has provided you with a useful understanding of using a proxy with HttpClient and how it can benefit your scraping needs. Feel free to sign up for our 14-day free trial, to test our service and explore all the features and functionalities.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Learn how to scrape dynamic JavaScript-rendered websites using Scrapy and Splash. From installation to writing a spider, handling pagination, and managing Splash responses, this comprehensive guide offers step-by-step instructions for beginners and experts alike.

Are XPath selectors better than CSS selectors for web scraping? Learn about each method's strengths and limitations and make the right choice for your project!

Learn how to use proxies with Axios & Node.js for efficient web scraping. Tips, code samples & the benefits of using WebScrapingAPI included.