Web Scraping using R: The Ultimate Guide with Steps

WebscrapingAPI on Oct 19 2022

Web scraping is a popular technique that is utilized by professionals for extracting data from various web pages. When you surf the internet, several websites will not let users save the data for private usage. That's why the data is manually copy-pasted, and the whole process is time-consuming and tedious.

This is where web scraping comes in, which automatically extracts data from the web pages, but the entire process is conducted through a web scraping software/tool, which is known as a "web scraper." The software will extract and load data automatically from the web pages according to the user's needs.

A web scraper can be custom-built to work for just one single project, or it can be easily configured to work with any web page. The web scraping work is done with various tools or software, but there are countless programming languages out there that are known to support this process greatly.

One such programming language is "R," and it's crowned as one of the leading programming languages for web scraping. So, do you want to do some web scraping using r? Go through this content to know how.

The R Programing Language: A Brief Definition

Before you jump to the main part of web scraping in R, gaining some knowledge about R programming is important. R is a well-known open-source programming language that can scrape data easily and effectively. It was presented for the first time in 1993 and transformed into an open-source platform back in 1995.

"R" also had its first-ever beta release in 2000, designed by Robert Gentleman and Ross Ihaka. R language was mainly created to transform all the ideas into software faithfully and quickly. Being one of the most widespread programming languages, "R" is well-known among all data scientists, and some of the well-known use cases of this scraping data tool are:

- Machine Learning

- Banking

- E-Commerce

- Finance

- Various Other Sectors That Utilize Massive Amounts of Data.

When compared to SPSS and SAS, R is viewed as a widely used analytics tool. Its support and active community are around 2 million users. When you check out other businesses using R for scraping data and how they get to do so, you will find the following:

- Trulia - Predict all the house prices along with the local crime rates

- Facebook - Update the status and the social network graph

- Foursquare - Using for the recommendations engine

- Google - Predicting economic activity and also enhancing the online advertising's efficiency.

But still, compared to other languages, "R" is currently competing with Python. It's because they contain active communities and offer web scraping tools. But you will surely see the difference when you check the target audience. Python contains an easy-to-learn syntax along with top-notch features.

Although using R for web scraping of a web page might look pretty intimidating for the first time. This language keeps its focus on statistical analysis. It also offers a massive set of built-in data visualization and data analysis tools, which make the web scraping work a lot easier because they are projects that need a massive amount of data.

Web Scraping In R: Important Things You Should Keep in Mind

When you have decided to do some web scraping in r, there are some things that you ought to comprehend.

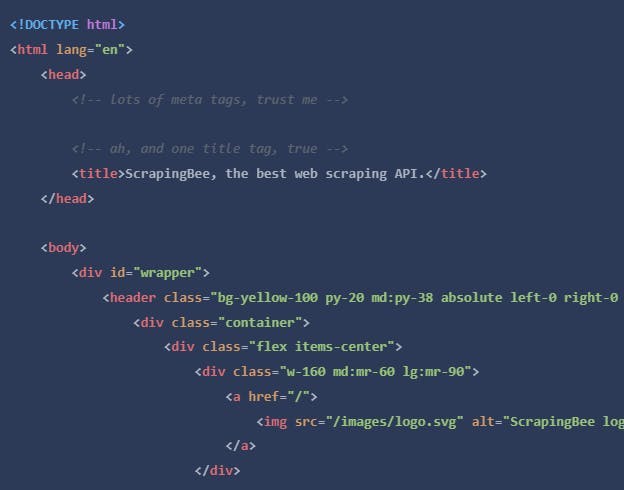

- Understanding Web Scraping and HTML Fundamentals

When it comes to web scraping with r, you first have to learn and understand the fundamentals of web scraping and HTML. You have to learn how to access the HTML code through the browser and check out all the underlying concepts of HTML and markup languages. This will surely set the course to scrape data.

Once you know these basics, scraping with R will become a lot easier than you think. Here are the following items that will help with the web scraping work with R.

- HTML Basics

Since it was first proposed by Tim Berners-Lee in the late 80s, the idea for the platform of documents [The World Wide Web] connected with each other through HTML is the foundation of every web page and the web itself. When you type a site on the browser, the browser will download and render the page.

But how exactly will you do the web scraping with r? Well, before you do anything, you first have to learn how exactly the web page is structured and what it's composed of. You will find a webpage has beautiful images and colors, but the underlying HTML document is pretty textual in nature.

The HTML document is the technical representation of a webpage as it tells the browsers which HTML elements it should display and how exactly it will display. The HTML document is something that you need to analyze and understand if you desire to crunch data from a web page successfully.

- HTML Tags and Elements

When you check the HTML code, you will come across something like <title>, </title>, <body>, </body>, and many more. These are known as HTML tags, which are special markers in the HTML document. All the stages serve an important purpose, and each of them is interpreted in a different manner by the web browser.

For instance, "<title> offers a browser with the title of the web page, and the <body> provides the browser that has the primary content of the web page. Also, tags are known to be closing and opening markers that have content in-between, or they self-close the tags by themselves. But the type of style that follows depends heavily on the use case and the stage type.

But the tags also come with attributes that offer extra HTML data and information which is relevant to the HTML tag it belongs to. Once you gain proper knowledge of the primary concept of the HTML file, the HTML tables, document tree, tags, and particular HTML elements, it will make much more sense on all the parts that you're interested in

So, what's the primary takeaway here? Well, the HTML page is viewed as a structured format paired with a tag hierarchy, which the crawler will utilize in the web scraping project to extract all the needed information.

- Parsing a Web Page With R Programming

Now, it's time to perform web scraping on a target web page with the R. Remember one thing, you will only scrape the surface of the HTML content, so here, you will not extract the data frames but print the simple HTML complete code.

So, if you want to web scrape all the elements of a web page and check how it appears, you need to use redLines() to map out all the lines of the HTML content within a development environment to produce a representation of it.

Now, you need to print "flat_html," and the R console will show you the results you need, which will be something like this:

Remember one thing clearly, web sites scraping is done just for fun, and every data science expert is well aware of it. It will surely be an exciting experiment, and you can easily scrape multiple pages of a web page, such as the IMDB website, on your operating system.

'Whether you scrape the first page or one single page of a web page, if you do it correctly, it will be a successful one. Even though scraping HTML files might give you a tremendous output, it's not an HTML document. This is because the redLines() reads the document properly but doesn’t take the document structure into account.

But this is just an illustration to show you what exactly scraping of web browsers looks like through the r web scraping method. The real-world following code will be much more complicated. But there is a list of libraries available, which will simplify the r web scraping work greatly.

Getting to Know CSS

As HTML can provide the URL structure and content of the web page, CSS selectors offer information on how the web page needs to be styled. Without the presence of CSS selectors, the web page will appear pretty plain. When it's about styling, it's referred to a diverse range of things but not one single element.

Styling can point toward the shade of HTML elements or their positioning. Just like HTML, the scope of the CSS selectors is so big that you cannot cover all the concepts within the language. But before going into scraping with R, you should learn more about the IDS and classes. For this section, let's learn about the classes.

When you create websites within an integrated development environment, you want to ensure that all the similar website components look the same. For instance, you might find things that appear on the list, and all of them should have the same shade, which is red.

So, to accomplish that, you have to insert the CSS selector that carries the shade information into all the lines of the text's HTML tag. This is how you do it:

<p style= "color:red" >Text 1</p>

<p style= "color:red" >Text 2</p>

<p style= "color:red" >Text 3</p>

Here, the style text shows that you're trying to apply a CSS selector to the <P> stages. In the quotes, you will come across the key-value pair known as "color:red." The color refers to the text color present in the <p> tags, and the red defines what exactly the shade should be.

The RVest Library

Just like the CSS selector, you also need to have a good understanding of the rvest package, which is an important r package for web scraping work. The rvest library, maintained by the well-known Hadley Wickham, is a particular library that enables all the users to harvest or scrape out data sets through the web pages. The rvest library is a tidyverse library that works perfectly with all the other libraries present within a bundle. The Rvest package is said to take inspiration from Python's web scraping library called Beautiful Soup.

Using Web Scraping API Software: How Exactly Will It Help?

You're well aware that to obtain the data stored on different websites, the proper web scraping tool is compulsory. It will not just help with further analysis but will speed up the web scraping work greatly. Speaking of web scraper tools, Web Scraping API is currently one of the best in the market.

The software comes in paid and free versions and will work perfectly on various browsers, including google chrome. When it comes to common web scraping scenarios like "scraping data from Wikipedia with R" or "downloading files over FTP with R," you can use this particular software.

There are over 10,000 companies out there that utilize WebScrapingAPI to scrape over 50 million each month. The software is designed with a state-of-art-technology that will help in making sure the targets loads within a matter of sectors and provides you with an instant API response.

You can also conduct sentiment analysis and JavaScript Rendering, and the software is equipped with an anti-bot detection feature. Furthermore, the software comes with a rotating proxy feature, which takes care of all of the proxies and also conducts the rotation automatically.

Pros:

- It's simple to easy

- It has an easy registration process

- It comes with the Amazon Scraper API

- Companies can use the tool for price comparison, financial data, lead generation, etc.

Cons:

- It's a Paid tool

Scrape Websites with Web Scraping API

The internet is filled with numerous data sets that people can utilize for their personal projects. On certain occasions, people can easily access API and then get asked for the data through R. But it might only be possible sometimes, and you will not get the data in a clean format. This is where web scraping comes in.

This technique will help you get the data you want to analyze by finding it in the HTML code of the site. But to conduct the scraping work, you need a proper tool, such as Web Scraping API, to get the work done. This is effective software that is used by numerous businesses and makes web scraping work 3 times faster.

It has an excellent customer support system, and the pricing section will enable you to opt for the pricing option according to your budget.

Get Web Scraping API now and get started!

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore the in-depth comparison between Scrapy and Selenium for web scraping. From large-scale data acquisition to handling dynamic content, discover the pros, cons, and unique features of each. Learn how to choose the best framework based on your project's needs and scale.

Discover how to efficiently extract and organize data for web scraping and data analysis through data parsing, HTML parsing libraries, and schema.org meta data.

Master web scraping with Python & Regex. Extract data from websites effortlessly. Learn advanced techniques & avoid common pitfalls.