Scrapebox Alternatives: The Top 5 Web Scraping Tools to Use

WebscrapingAPI on Nov 07 2022

If you're fascinated by web scrapers and need a solution that can effectively scrape website data, you've come to the right place.

Scrapebox is an automated data extraction tool. It offers a straightforward architecture for web scraping. However, Scrapebox is prone to errors and spamming. Hence it is better to consider using another Scrapebox alternative.

Finding a good Scrapebox alternative might not be what you want, but it might be what you need.

But what exactly is Scrapebox? What does it do? What is the best Scrapebox alternative? Well, I’ve got you covered with the answers!

Let’s dive in!

Scrapebox

Scrapebox is a straightforward, system-independent architecture for web scraping. It uses the Vagrant VirtualBox interface with puppet provisioning. You can construct and execute scraping of online material to structured data. You can do all this without altering your primary system.

Scrapebox is a shared infrastructure used to run scrapers and web crawlers. This may generate structured data from various online domains, which can then be used to power applications and data catalogs.

Installation

Install Vagrant on your host computer's operating system first. Vagrant launches virtual machines within VirtualBox on your host computer's operating system.

It ensures that all developers are using the same runtime environment. It uses a shared image and configures it with Vagrant (Puppet).

Here are the steps you need to follow:

- Type vagrant up to launch the virtual machine.

- Wait a few minutes for installation and setup to complete.

- SSH into the virtual machine

- Finish by opening the virtual environment and going to the synchronized folder.

Scraping

Spiders crawl websites and gather information from the pages. Every spider is tailored to a particular website or group of websites. You can see the spiders that are accessible by running a scrapy list.

You may begin crawling with the following command. Scraped data is often saved as JSON under 'project root>/feed.json'. Data can be produced as CSV or XML or sent straight to a web service or database.

Features

- Search Engine scraping

- Keyword scraping

- Proxy harvesting

- Web page meta scraping

- Email scraping

- Comment scraping

- Phone number scraping

Pros

- Provides easy-to-use tools to search the web for long-tail keywords related to your topic.

- Platform customization allows you to select the features beneficial to your business.

- Versatile platform that can meet all of your requirements.

- Ease of use and understanding for beginners.

- Works with Windows 7, 8, 10, 11, XP, Apple Mac, Vista, and other operating systems.

Cons

- Optimal for people with basic knowledge of data scraping

- Scraping returns no results or a lot of errors

- Results are often scraped from irrelevant and unreliable sites

- Most websites will restrict you since they do not want spammers scraping their pages.

- All your emails will be transferred to spam, deleted, or banned.

- Marks your domain as a spam advertiser.

- It is pricey than other tools

Pricing

One-time purchase of $197, which is quite pricey.

Top 5 Web Scraping Tools To Try Now

Scrapebox may not offer you the best solution to your data scraping problems. But I've got you covered with Scrapebox alternatives you can use. I have also included my favorite tool that I've found the best based on its speed, architecture, price, proxy mode, and Javascript rendering.

Here is a list of my Top 5 Scrapebox alternatives

- Agenty

- Scraper API

- Outwit Hub

- Scrapy

- WebScrapingAPI

I will explain each one of them and what they have to offer. The installation, features, pros, cons, and prices.

Let's dive in!

- Agenty

Agenty is a no-code web scraping tool. You can extract data from any website. You can use it when you need quality data for your AI algorithm or track your competitors' prices. The software and built-in API give you a good web scraping experience on the cloud.

A scraping agent is a collection of settings for scraping a specific website, such as fields, selectors, headers, and so on.

The scraping agent may collect data from

- Sitemaps

- RSS feeds

- Public websites

- Web APIs

- JSON pages

- Password-protected websites

- XML pages and a variety of other web resources.

Installation

The Chrome extension, accessible in the Chrome store, may be used to generate the scraping agent.

Scraping

A single scraping agent may gather information from various pages, whether there are 100 or millions of comparable structured pages. You only need to submit the URLs using the various input types available in the agent, or you may use sophisticated capabilities.

Features

- Point to click

- Batch URL crawling

- Advanced scripting

- Integrations

- Crawling history

- Crawl website with logins

- Anonymous web scraping

- Scheduling

Pros

- Provides clear scraping instructions

- Time efficient

- Great customer service

- Affordable pricing

Cons

- Hidden costs

- Problems logging in

Pricing

The basic plan starts at $29 per month

2. Scraper API

Scraper API is a multi-language software that simplifies web scraping. Scraper API is compatible with Bash, Python/Scrapy, PHP, Node, Ruby, and Java.

Scraper API is a developer-friendly API that allows you to scrape HTML from web pages. Since it does it for you, you won't be worried about obtaining web pages with the Scraper API. This means you won't have to deal with Captcha, browsers, proxies, or antibot systems.

All you have to deal with are data processing duties, which begin with parsing data from downloaded web pages.

All that is asked of you is a simple API call. This service supports a vast pool of locations and IP addresses via which your requests can be routed. The price plan for the service is based upon successful API queries, and you have unlimited bandwidth use.

Scraping

The new Async Scraper endpoint enables you to perform web scraping tasks at scale without specifying timeouts or retries and construct a distinct status endpoint to receive all data.

This increases the resilience of your online scrapers, regardless of how complicated the sites' anti-scraping techniques are.

Features

- Supports POST/PUT Requests

- Sessions

- Custom Headers

- Rendering Javascript

- Proxy Mode

- Geographic Location.

Pros

- Scrape text files and images

- You can set HTTP headers

- Fast and Reliable

- Built to scale

- Bypassing and antibot detection to reduce blocks

Cons

- Smaller plans come with limitations

- You may sometimes experience blocks

Pricing

The starting package is $49 per month

3. Outwit Hub

Outwit Hub is a Firefox extension obtained from the Firefox add-ons store. Once installed and active, you may immediately scrape content from websites.

The contents of a Web page are displayed simply and visually, needing no programming skills or significant technical understanding. You can easily extract links, photos, email addresses, RSS news, and data tables.

It offers excellent "Fast Scrape" features that swiftly scrape data from a list of URLs you feed. Outwit Hub does not require any programming abilities to extract data from websites.

The scraping procedure is relatively simple to learn. You can refer to their tutorials to get started with web scraping with the program.

Outwit Hub also provides tailor-made scraper services.

Features

- Automatic multi-page browsing

- Table and list extraction

- Email extraction

- Data structure recognition

Pros

- Fast data extraction

- Store images

Cons

OutWit Hub lacks proxy rotation and anti-captcha capabilities. Thus while the tool is accessible and straightforward, it is restricted in the pages it can scrape.

Pricing

There is a light-free version. But, the PRO version starts at 95€

4. Scrapy

Scrapy is a high-level web crawling and scraping framework for crawling websites and extracting data sets from their pages. You may use it for various tasks, including data mining, monitoring, and automated testing.

Zyte (previously Scrapinghub) and many more contributors keep Scrapy running. You can only use Python 3.7 and above and it runs on Windows, Linux, macOS, and BSD.

One of Scrapy's most appealing features is that the queries it sends are scheduled and handled asynchronously. If the scraper finds a problem, it will not stop working on one page at a time.

Instead, it will navigate to several pages and complete its tasks as quickly as possible. Furthermore, if it finds an issue on one page, it will not influence its performance on other pages.

Features

- Built-in support

- Open source and free web scraping tool

- Extracts data from websites automatically

- Exports data in CSV, JSON, and XML

Pros

- Fast and powerful

- Easily extensible

- Portable python

Cons

- Time-consuming

- Requires basic knowledge of computers

Pricing

- Free

5. WebScrapingAPI

My favorite web scraping tool is WebScrapingAPI. This API has given the most reliable and straightforward solutions for my scraping problems. Let me add that you get all solutions under one API with an easy-to-navigate UI.

WebScrapingAPI is used to scrape data from the web, search engine results pages, and Amazon. You are served by a team of professionals who ensure you get the best solutions. You never have to deal with unprofessionalism.

Furthermore, it is a straightforward and efficient REST API interface for scraping web pages at scale. It enables users to scrape websites effortlessly and extract HTML code.

To provide the most incredible level of service for its clients, the API handles tasks that might otherwise need to be developed by a programmer.

Features

Here are a few of the features that make this my reliable web scraping tool:

- Amazon Web Services (AWS)

The API's architecture is built from AWS. Hence, AWS and its worldwide data centers provide the foundation for WebScrapingAPI. This means everything is linked via its top-tier network. AWS reduces hops and distance, resulting in quick and safe data delivery.

- Architecture obsessed with speed

WebScriptAPI employs cutting-edge technology. This ensures that your target website loads in a flash, and you receive HTML content immediately. No one wants a slow API. You get results with total resource separation, automated scalability, and uptime.

- API for Scraper

Data from websites can be obtained without the risk of being blocked using the Web Scraping API capability. As a result, IP rotation is the feature that best suits it.

- API for Amazon Product Data

You can also use the Amazon Product Data API function to extract data in JSON format. This capability is recommended for a secure JavaScript rendering process.

- API for Google Search Results

The Search Console API allows you to access the most useful insights and actions in your Search Console account, such as updating your sitemaps, displaying your verified sites, and monitoring your search stats.

- JavaScript Rendering

Using the render js parameter in your request enables WebScrapingAPI to visit the targeted website via a headless browser. It allows JavaScript page components to render before returning the complete scraping result. No more stress on enabling Javascript.

- Rotating proxies

Access the one-of-a-kind, massive pool of IPs from hundreds of ISPs, which supports real-world devices and automated IP rotation to improve dependability and prevent IP bans.

How can you resist all the features that come with WebScrapingAPI? Remember, All solutions are under one API!

Pros

- Customizable features

- EVERY package offers Javascript rendering

- High-quality services uptime

- All packages are affordable

- +100 million rotating proxies to reduce blocking

- AWS architecture

Cons

No problems are currently found.

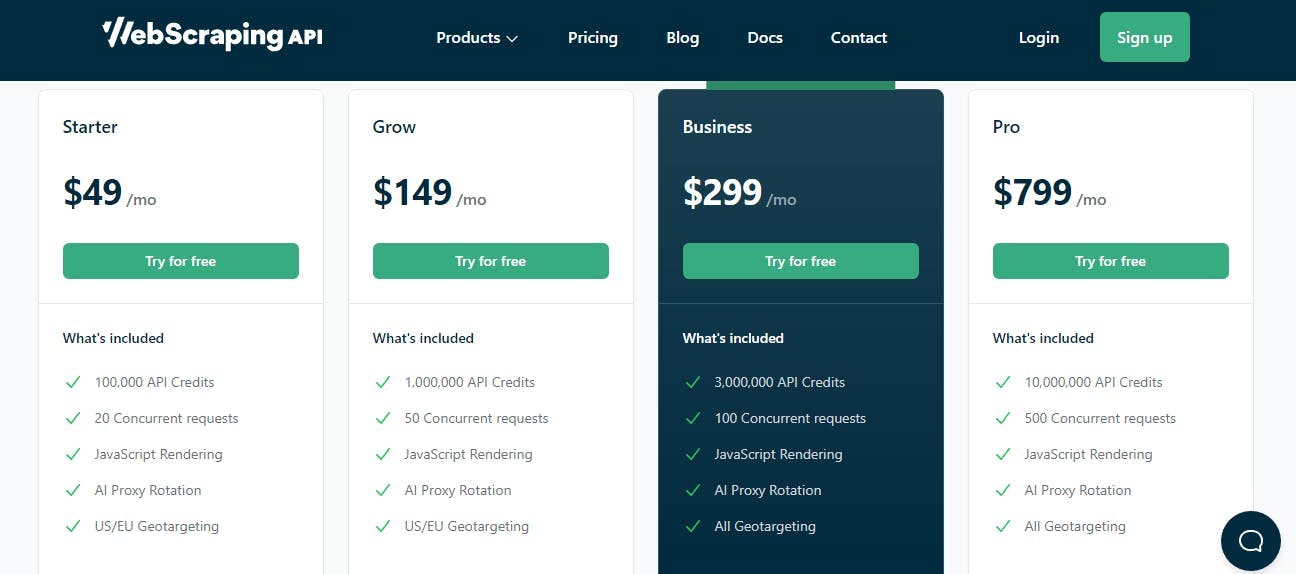

Pricing

- The starting package is $49 per month

- All packages have a 14-day trial

Why WebScrapingAPI Is The Best ScrapeBox Alternative

WebScrapingAPI is my best Scrapebox alternative. Here’s Why

Javascript processing, IP spins, CAPTCHAs, and other features are available. When attempting to web-scrape a website, you may encounter a number of challenges that WebScrapingAPI handles.

Web scraping APIs (WSAPI) enable businesses to expand their existing web-based systems by providing a well-thought-out collection of services for assisting mobile application and developer support, developing new business platforms, and improving partner interaction.

Web scraping APIs provide clean, organized data from existing websites for use by other applications. Web scraping APIs expose data that can be tracked, changed, and managed. The built-in architecture of online scraping APIs allows developers to incorporate website modifications when migrating websites to settings without changing the collection algorithm.

Because of these benefits, major companies such as Infraware, SteelSeries, Deloitte, and others rely on WebScrapingAPI solutions.

To try out the comprehensive WebScrapingAPI package, sign up for a free 30-day trial.

It is unique that no data is unavailable for web data extraction using these web scrapers. Proceed to develop your business using the information you obtained.

With only $49 per month, you can start your web scraping journey with this API. You get access to email support, javascript rendering, API calls, proxies, and concurrent requests.

Over 10,000 users are using WebScrapingAPI; join them today.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore the transformative power of web scraping in the finance sector. From product data to sentiment analysis, this guide offers insights into the various types of web data available for investment decisions.

Learn how to use proxies with node-fetch, a popular JavaScript HTTP client, to build web scrapers. Understand how proxies work in web scraping, integrate proxies with node-fetch, and build a web scraper with proxy support.

With these 11 top recommendations, you'll learn how to web scrape without getting blacklisted. There will be no more error messages!