5 Best axios Alternative Tools For GET and POST Requests

WebscrapingAPI on Sep 28 2022

Undoubtedly, some developers prefer Axios to built-in APIs due to their simplicity of usage. However, many people overestimate the necessity of such a library. Hence, you might think of using an axios alternative.

With dozens of options available, learning how to collect data might be intimidating. Each solution asserts that it is superior to the others. Some systems provide cross-platform support, but others prioritize developer experience.

Web applications must often interface with web servers to obtain various resources. You may need to send or get data from an API or an external server.

The axios lovers are very aware of how simple it is to install the module. You can get the resources right now by running npm install Axios.

I'll walk you through axios in this blog. You will grasp what it is and how to utilize it. I've also provided axios alternatives for you to consider.

Without further ado, let's get started!

Axios: Node.js, Browser-based, and Promise-based HTTP client

Axios is a Node.js and browser-based and promise-based HTTP client. Axios POST and GET requests make it straightforward. You can also send repeated HTTP queries to REST APIs.

It may be natively in JavaScript or a library like React or Vue.

Sending asynchronized HTTP requests from the Javascript side is a need when creating dynamic web apps.

Axios is a popular Javascript library for performing HTTP requests. You can do this from the browser, with a high reputation in the community.

How to Make an HTTP Request With axios?

Performing HTTP requests with Axios is simple. You can pass an object with all configuration settings and data to the Axios() method.

- First you need to install Axios

- Next you need to pass the relevant configuration

Let's take a closer look at the setting options:

- Method: The HTTP method via which the request shall be sent.

- URL: The URL of the server where you should make the request.

- In axios POST, PUT, and PATCH requests, the data given with this option is transmitted in the HTTP request body.

Refer to the documentation for further information on the configuration options with Axios request functions.

Why is axios Popular?

Currently, the two most popular native Javascript options for sending numerous queries are Fetch API and axios. Axios has the edge over Fetch API due to some of the unique capabilities it provides developers.

I've included a few of them below;

- Multiple requests and response interceptions are supported.

- Effective error management

- Client-side protection against cross-site request forgery

- Timeout for response

- The ability to resend requests

- Older browsers like Internet Explorer 11 are supported

- JSON data automated translation

- Assistance on the upload progress

Because of its extensive capabilities, developers prefer Axios rather than Fetch API to make HTTP requests.

Why axios Is Not The Most Reliable HTTP Client

Axios may offer you solutions to your HTTP requests but it is always convenient

Here are some of the cons of using Axios:

- Installation and import are required (not native in JavaScript)

- Because this is not the norm, handling the potential for conflict is critical.

- Third-party libraries increase the weight and burden on the website or application (to be considered)

5 Best axios Alternative Tools For GET and POST Requests

Here is a list of 5 Best Axios alternatives you can use for HTTP Requests

1. Fetch API

2. GraphQL

3. JQuery

4. Superagent

5. WebscrapingAPI

I will go through each one of them for you to get a better view of what they entail.

1. Fetch API

The Fetch API offers a JavaScript interface for interacting with and altering protocol elements such as requests and answers.

Though Fetch API has been available for a while, it is yet to be incorporated into Node.js core due to several constraints.

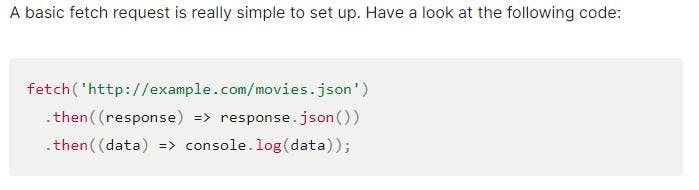

Here is an example a request using Fetch API

You may also modify the fetch process by inserting an optional object after the URL. This allows you to adjust things like request methods, and other parameters.

Pros

- Native in contemporary browsers (no installation/import required)

- JavaScript standard (Vanilla)

- A lightweight solution

- A modern approach that can take the place of XMLHttpRequest

Cons

- An extra stage for JSON conversion is required for both the request and the response.

- As Fetch always delivers a response, it is necessary to manage the response and issues

- Older browsers are not supported

- Only on the client side is this feature available (browser)

2. GraphQl

Because of its popularity, HTTP is the most used client-server protocol for GraphQL.

GraphQL should be deployed after all authentication middleware. This gives you access to the same session and user information as your HTTP endpoint handlers.

You first need to install it.

Your GraphQL HTTP server should handle the GET and POST methods. GraphQL's conceptual model, is an entity graph. As a result, properties in GraphQL do not have URLs.

A GraphQL server works on a single URL/endpoint. All its queries for a particular service should be routed at this endpoint.

Pros

- Clients may specify what data they want from the server and receive it predictably.

- It is tightly typed, allowing API users to know what data is accessible and in what form it exists.

- It may obtain several resources in a single request.

- There are no under-fetching and over-fetching problems.

Cons

- Regardless of whether or not a query is successful, it always returns an HTTP status code of 200.

- The absence of built-in caching support

- Complexity. If you have a basic REST API and work with generally stable data over time, you should continue with it.

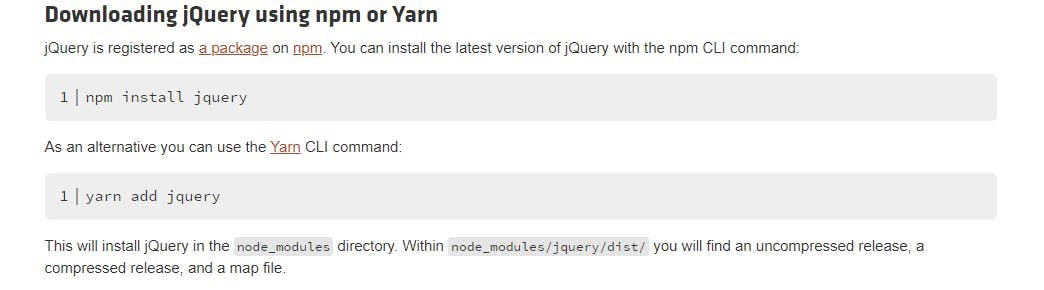

3. JQuery

If you're using JavaScript, it has a built-in interface for processing HTTP requests. But sending many queries using this interface is a hassle and requires a lot of code.

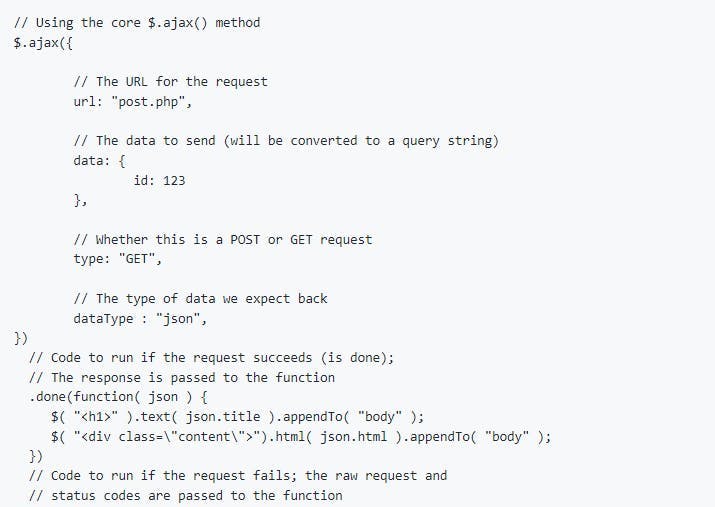

Anyone who has worked with JQuery should be familiar with the $.ajax method. It extends the interface with a more transparent and user-friendly abstraction. This allows you to make HTTP requests with fewer lines of code.

As frameworks like JQuery have grown outdated recently, developers require a native Javascript.

Pros

- jQuery is relatively easy to use. It requires programming expertise to generate eye-catching web pages and animations.

- jQuery is also incredibly adaptable since it allows users to install plugins.

- jQuery provides a lightning-fast answer to your difficulties.

- jQuery includes UI and effects libraries and can quickly integrate Ajax capabilities into the program.

- jQuery is capable of doing complex Javascript tasks with minimal code.

- jQuery is free and widely supported in a variety of apps. You may use this language in any application without worrying about license or compatibility difficulties.

Cons

- jQuery has its drawbacks, such as not everything being developed to a single standard.

- jQuery has several versions available. Some versions work well with others, while others do not.

- When jQuery is implemented incorrectly as a Framework, the development environment might spiral out of control.

- JQuery may be reasonably sluggish, especially in the case of animations. It can be much slower than CSS.

- Maintaining a jQuery site may quickly become a headache.

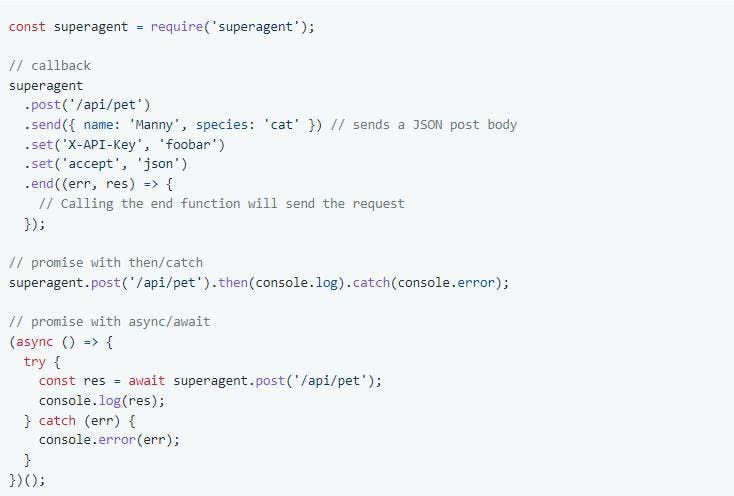

4. SuperAgent

SuperAgent is a small HTTP request library that you may use to make AJAX inquiries in Node.js and browsers.

It provides callback and promise-based APIs. Using async/await on top of a promise-based API is merely one of its syntactic features.

SuperAgent has dozens of plugins available for tasks such as cache prevention, server payload transformation, and suffix or prefix URLs. They range from no-cache to monitoring HTTP timings.

With the following command, you can install SuperAgent:

HTTP requests using SuperAgent;

You might also increase functionality by creating your plugin. SuperAgent can also parse JSON data for you.

Pros

- Superagent is known for offering a fluent interface for conducting HTTP requests. A plugin architecture, and several plugins for much proper functionality are now available.

- Superagent provides a Stream API and Promise API, demand cancellation, retries whenever a request fails

Cons

- The build of Superagent is currently failing. It also does not provide upload progress tracking as XMLHttpRequest does.

- Timers, metadata errors, and hooks are not supported.

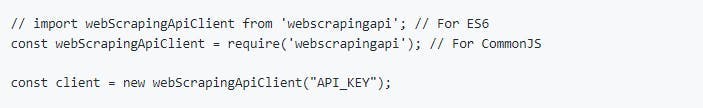

5. WebScrapingAPI

I must state that WebScrapingAPI has provided me with realistic solutions. It has solved problems I've encountered when retrieving data from the web. The API has spared me money and time by allowing me to concentrate on developing my product.

WebScrapingAPI is an easy REST API interface for scraping web pages at scale. This allows users to scrape websites and extract HTML code efficiently.

You can access the API through various means;

- By using the official API endpoint:

- By using one of their published SDKs;

- WebScrapingApi NodeJS SDK

- WebScrapingApi Python SDK

- WebScrapingApi Rust SDK

- WebScrapingAPI PHP SDK

- WebScrapingAPI Java SDK

- WebScrapingAPI Scrapy SDK

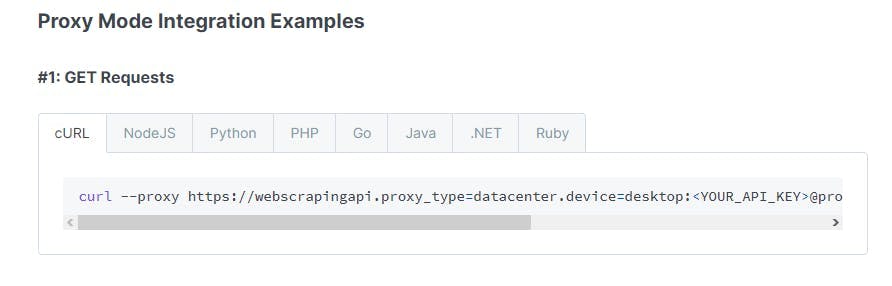

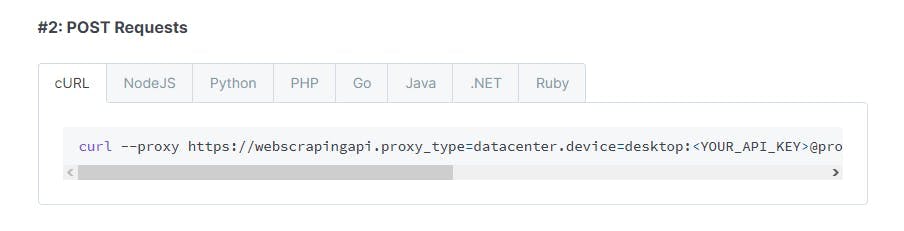

3. By using Proxy Mode

Setting the API key and URL arguments of the URL and your access key to the website you wish to scrape is the most straightforward basic request you can make to the API.

The API handles functions that would otherwise need to be developed by a programmer to provide the most outstanding level of service for its clients.

Here are a few of the strategies that WebscrapingAPI employs:

- Geolocations

- Solving Captchas

- IP Blocks

- IP Rotations

- Custom cookies

Some webpages may use JavaScript to render crucial page components, which means that some material will not be seen on the first-page load and will not be scrapped. The API can render this material and extract it for you to utilize by using a headless browser.

You only need to set render js=1, and you're ready to go!

It has solid technological expertise and over 100 million proxies to ensure you don't encounter blockers. This is because certain websites can only be scraped in specific locations worldwide. You'll need the proxy to access their data for this.

WebScrapingAPI handles everything for you because keeping a proxy pool is tough. It uses millions of rotating proxies to keep you hidden. It also grants you access to geo-restricted content through a particular IP address.

Moreover, the API's infrastructure is built in AWS, offering you access to extensive, secure, and reliable global mass data.

You can also use other scraping functionalities of WebScrapingAPI. Some of these may be used by adding a few additional arguments, while others are already built into the API.

In my honest opinion, using WebScrapingAPI is a win.

Pros

- Architecture is built on AWS

- Affordable pricing

- Javascript rendering

- Millions of rotating proxies to reduce blocking

- Customizable features

Cons

None so far.

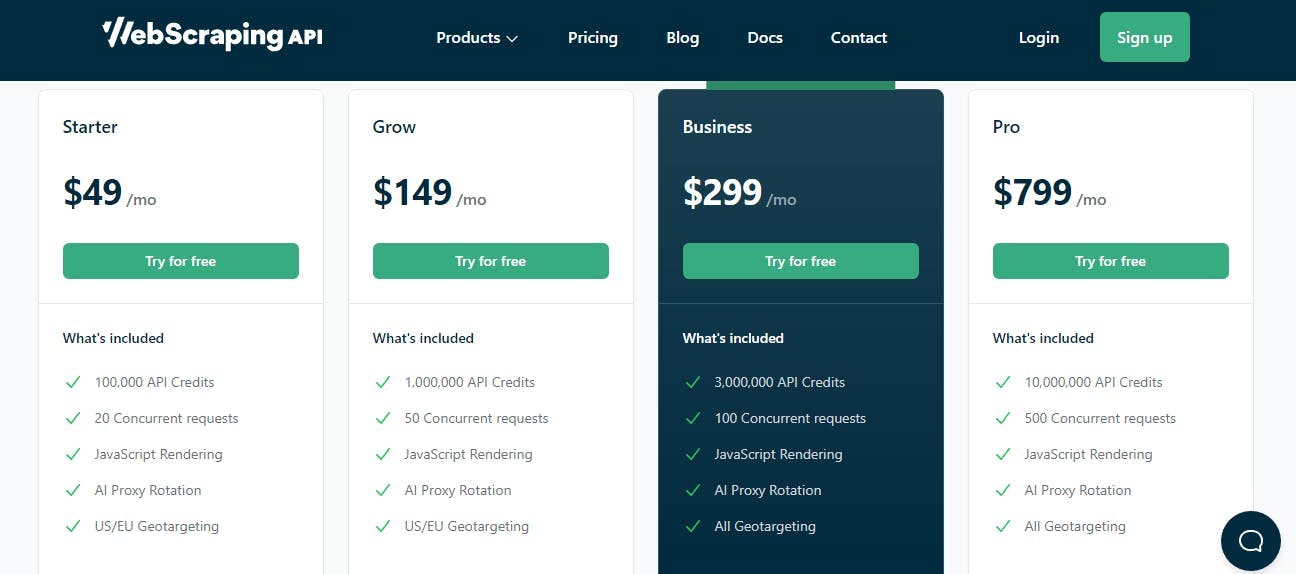

Pricing

- $49 per month.

- Free trial options

Understanding the services offered by WebScrapingAPI is crucial for assisting the API on its online scraping journey. You can find this information in the complete documentation, which includes code samples in various computer languages.

Why WebScrapingAPI is my Top Axios Alternative

I recommend WebScrapingAPI for data extraction because it offers straightforward solutions for anyone handling web scraping in one API. You only need to submit your HTTP requests and leave it to do the rest.

The API gets the job done!

Remember that you may also obtain competitive rates and give better bargains to your consumers. As prices in your industry vary, you may use data from this API to predict how long your company will survive.

WebScrapingAPI handles everything for you because keeping a proxy pool is tough. It uses millions of rotating proxies to keep you hidden. It also grants you access to geo-restricted content through a particular IP address.

How better can this API get?

The WebScrapingAPI's actual value lies in its ability to enable users to customize each request. Adding the appropriate parameters to your request allows you to do practically anything.

You can display JavaScript files on selected websites to establish sessions or parse the HTML page in a JSON format.

The API architecture is likewise created on Amazon Web Services, giving you access to comprehensive, secure, and dependable global mass data. As a result, firms such as Steelseries and Wunderman Thompson rely on this API for their data requirements.

Furthermore, it only costs $49 per month. I'm enamored with the speed it has. It already has over 10,000 people using its services, thanks to the usage of a worldwide rotating proxy network. That is why I propose utilizing WebScrapingAPI to get data.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore the in-depth comparison between Scrapy and Selenium for web scraping. From large-scale data acquisition to handling dynamic content, discover the pros, cons, and unique features of each. Learn how to choose the best framework based on your project's needs and scale.

Explore a detailed comparison between Scrapy and Beautiful Soup, two leading web scraping tools. Understand their features, pros and cons, and discover how they can be used together to suit various project needs.

Discover how to efficiently extract and organize data for web scraping and data analysis through data parsing, HTML parsing libraries, and schema.org meta data.