Web Crawling With Python: A Detailed Guide on how to scrape with python

WebscrapingAPI on Dec 13 2022

Web Crawling With Python

Web crawling is an incredibly powerful technique that helps you collect data from the web by locating each and every URL for single or multiple domains.

You can find numerous popular web crawler frameworks and libraries with the Python package. With the rise of the big data era, there has been an increase in the need for web-relevant information.

Several organizations extract data (external) from the web for numerous purposes: acquire daily stock prices to develop forecasting models, keep track of specific data trends in the market, summarize the latest stories or events, or analyze the competition.

Thus, it's safe to say that a web crawler is vital in this scenario. A web crawler automatically surfs and extracts data from the web-based on some specified regulations.

In this blog, we will highlight what web crawling is, how it works, and the various web crawling strategies.

Furthermore, we will help you learn how to develop a basic web crawler in Python by leveraging two libraries: Beautifulsoup object and requests.

Lastly, we will explain why you should use a web crawler framework like WebScrapingAPI. So, let's start.

Web Crawler: What Is It?

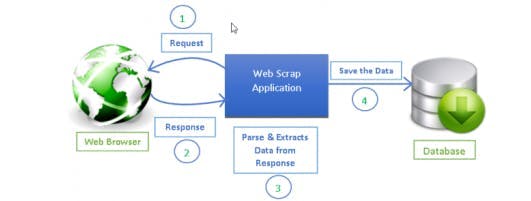

Web scraping and web crawling are two distinct yet relevant theories. Web crawling has always been a crucial part of web scraping, as the URLs that undergo processing by the web scraper code work only when the crawler logic finds it.

Web crawlers come with a range of URLs to visit, known as the seed. The web crawler traces and locates links in the HTML document for every URL, filters and sorts all the links depending on a few factors, and incorporates the new URL links into a queue.

It extracts a few specific data from the web or all the HTML tags and data, which are subsequently processed by a unique pipeline.

Web Crawlers Classification

Based on the executed structure and technology, web crawlers could be categorized into the following types: deep web crawlers, incremental web crawlers, focused web crawlers, and general web crawlers.

Web Crawlers & Their Fundamental Workflow

A general web crawler consists of the following basic fundamental workflow:

Obtain the first URL. The first element, the initial URL, is the web crawler's entry point, which links and directs you to the web page required to get crawled.

When crawling the web page, you must extract the HTML content of the web page, then apply the parse method or HTML parsers to extract the URLs of all the pages linked to this particular web page.

Place all these URLs in a queued manner. Pass through this queue and read and comprehend the URLs one at a time.

Then, crawl the relative web page for every URL and repeat the above mentioned steps.

Finally, see if the stop condition requirement is fulfilled. If not set, the web crawler will continue to crawl until it fails to obtain a new URL.

Web Crawling: What Environmental Preparation Do You Need To Take?

- Ensure installing browsers like Chrome, Internet Explorer, or Safari in your environment.

- Start downloading and installing the Python programming language.

- Subsequently, download an ideal IDL like Visual Studio Code.

- Start installing the required Python files. For instance, Pip is a Python management tool that helps you find, download, install, and even uninstall Python packages.

Strategies For Web Crawling That You Must Know

Typically, web crawlers only explore a subgroup of web pages based on the crawler budget. This budget can be the highest number of pages per depth, domain, or implementation time.

Well-known websites offer a robots.txt file to pinpoint and display the segments of the website that are not permitted to crawl by any user agent. The sitemap.xml XML document is the exact opposite of the robot's file, as it lists all the pages one can crawl.

A few most popular use cases of web crawlers comprise the following:

Use Case 1:

Search engines like Yandex Bot, Bingbot, Googlebot, etc., extract every HTML document for a major web segment. The extracted data is further indexed to transform it into searchable data.

Use Case 2:

Besides HTML code collection, SEO analytic software programs also collect metadata such as the response object, response time, and status code to find broken web pages and the links amid the range of domains to extract backlinks.

Use Case 3:

An E-commerce website undergoes web crawling via price monitoring tools to detect product web pages and collect metadata, mainly the price. Subsequently, periodic revisits are done on the product pages of the e-commerce website.

Use Case 4:

An open repository of web crawler information or data is maintained and preserved by Common Crawl. For instance, there are 2.71 billion web pages stored in the archive as of Oct 2020.

Developing Basic Web Crawler In Python From The Beginning

To develop a basic web crawler in a Python file, you'll require a minimum of one library for downloading the HTML tags from URLs and a parsing HTML library to collect links.

Python community offers standard libraries like html.parser for parsing HTML and urllib to perform HTTP requests. On Github, you can find an example Python crawler developed only using these standard libraries.

The standard Python developer tools for HTML parsing methods and requests aren't that developer-friendly.

Other well-known libraries like requests library and BeautifulSoup offer an enhanced developer experience.

To better understand, revise the pointers mentioned earlier in this section and follow a defined set of HTML source codes.

Usually, the above code is simple. However, there are several usability and performance challenges to resolve before web crawling an entire website and web scraping data completely.

- The web crawler is typically slow and offers no balance. It usually requires around one second for a web crawler to crawl a particular URL.

- Every time a web crawler submits a request, it stands in the queue for the request solution, and no task is accomplished in between.

- There's no retry option for the download URL logic. The URL queue isn't an actual queue and is inefficient when it involves many URLs.

- The link extraction logic doesn't support URL filtration by domain or filtering of requests to static files, doesn't manage URLs beginning with a hashtag (#), and avoids supporting standardizing URLs via the removal of the URL query string parameters.

- The web crawler avoids the robots.txt file and won't identify or detect itself.

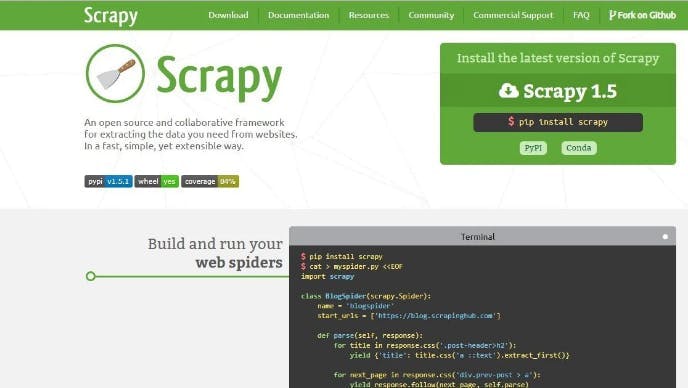

Now, let's look at why you should install Scrapy and how it makes custom web crawling easier than ever.

Web Crawling Via Python

Scrapy is one of the most well-known web scraping and crawling Python packages with an excellent overall rating on Github.

A significant benefit of Scrapy is that requests are organized and dealt with asynchronously. It implies that Scrapy can send another request before the previous one is accomplished or perform another operation in between.

Scrapy can also deal with several concurrent requests but could also be set up to respect the websites via customized settings.

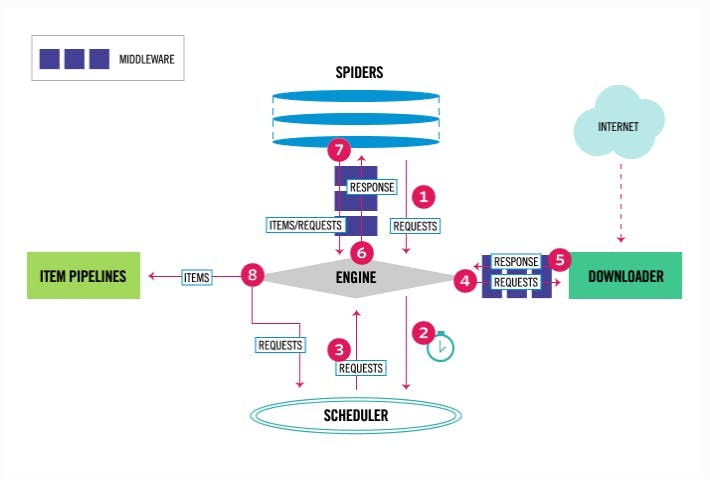

Scrapy comes with an architecture of multiple web elements. Typically, you will execute a minimum of two different classes: Spider class and Pipeline class.

Web scraping can be considered an ETL; here, extracting data from all the HTML and web is all you have to do. Of course, you'll have to load the extracted data on your personal storage.

Scrapy spider extract data and pipelines ensure seamless loading of it on your own storage. You can witness transformation in both pipelines and spiders.

However, it's advisable to set up a customized Scrapy pipeline to convert every item separately. By this approach, the inability to process any item will not affect the other items.

Apart from that, you can also incorporate Scrapy spider and downloader middleware in between components.

Overview of The Scrapy Architecture

If you've already tried Scrapy before, you'll be aware that web scrapers are defined as a class that is generated from the basic Spider class and deploys a parsing method to deal with all responses.

Install Scrapy, which also offers plenty of generic spider classes, including the SitemapSpider, CSVFeedSpider, XMLFeedSpider, and CrawlSpider.

The CrawlSpider class is also a branch of the base Scrapy Spider class that offers an additional rules attribute to state the ways to crawl a specific website.

Every rule leverages a LinkExtractor to outline the links that are to be extracted from every single web page.

Developing An Exemplar Scrapy Crawler For IMDb

Before attempting to crawl the IMDb website, detect which URL routes are permitted by checking the IMDb robots.txt file.

Only 26 routes or paths are disallowed by the robots file for all user agents. Scrapy reads and checks the robots.txt file before time and adheres to it when the ROBOTSTXT_OBEY setting is defined to be true.

This applies to every project created using the Scrapy command startproject.

Web Crawling At Scale

By default, for a website like IMDb, Scrapy's web spiders can crawl around 600 pages every minute. A single robot usually takes more than 50 days to crawl about 45 million pages.

To crawl various websites, it's advisable to deploy individual web crawlers for every group of websites.

Web Crawling With Python Program Is Easy Via Scrapy Configuration

You can create the source code of a Python framework crawler in two ways. The first is by using third-party libraries for downloading web page URLs, and the second one is by parsing HTML with a web crawler that is designed via a popular web crawler framework.

Scrapy is an excellent web crawling framework that you can easily extend via your custom code. However, you must have knowledge of all the links where you can incorporate your own code and the settings for every component.

Proper and seamless Scrapy configuration becomes more crucial when you crawl websites consisting of millions of web pages. If you seek more knowledge about web crawling, it's advisable to select a well-reputed website and start crawling it.

Perform Seamless Web Crawling & Web Scraping Using WebScrapingAPI

Although you'll find plenty of open-source data crawlers, they may not be capable of crawling complex web pages and websites on a massive scale.

You'll have to tweak and modify the underlying code to ensure the code works for your intended page. Plus, it may fail to work for each and every operating software program in your environment. Another issue that may arise is the computational and speed requirements.

To overcome such hurdles, WebScrapingAPI lets you crawl multiple pages irrespective of the programming language, your devices, or platforms and retains the content in database systems or basic, understandable, and readable file formats like .csv.

When it comes to crawling data from the web and performing web scraper functionalities, WebScrapingAPI makes things simpler than ever.

You can learn more about our web scraping and crawling capabilities by visiting our website and contacting us. For a detailed insight into our pricing, click here.

News and updates

Stay up-to-date with the latest web scraping guides and news by subscribing to our newsletter.

We care about the protection of your data. Read our Privacy Policy.

Related articles

Explore the in-depth comparison between Scrapy and Selenium for web scraping. From large-scale data acquisition to handling dynamic content, discover the pros, cons, and unique features of each. Learn how to choose the best framework based on your project's needs and scale.

Explore the transformative power of web scraping in the finance sector. From product data to sentiment analysis, this guide offers insights into the various types of web data available for investment decisions.

Explore a detailed comparison between Scrapy and Beautiful Soup, two leading web scraping tools. Understand their features, pros and cons, and discover how they can be used together to suit various project needs.